In music creation, rapid prototyping is essential for exploring and refining ideas, yet existing generative tools often fall short when users require both structural control and stylistic flexibility. Prior approaches in stem-to-stem generation can condition on other musical stems but offer limited control over rhythm, and timbre-transfer methods allow users to specify specific rhythms, but cannot condition on musical context. We introduce DARC, a generative drum accompaniment model that conditions both on musical context from other stems and explicit rhythm prompts such as beatboxing or tapping tracks. Using parameterefficient fine-tuning, we augment STAGE [1], a state-ofthe-art drum stem generator, with fine-grained rhythm control while maintaining musical context awareness.

In recent years, numerous works [1][2][3][4][5][6][7] have achieved high-quality, musically coherent accompaniment generation. However, these methods often lack fine-grained control over time-varying features. Such control is often desirable in the context of musical prototyping, where a creator wishes to quickly evaluate an early musical idea before investing substantial time into it. In this work, we focus on the Tap2Drum task, in which a user can record a rhythm prompt, such as a beatboxing or tapping track, and a generative model renders it as drums. State-of-the-art approaches for Tap2Drum focus on timbre transfer, where the user provides a timbre prompt to explicitly specify the desired drum timbre. For instance, [8] requires the user to provide drum audio as the timbre prompt; this can limit the speed of iteration, as different songs will require different drumkit sounds, and the user must search for an existing audio sample matching their desired timbre. Other works in music editing [9] provide text control, but it can be difficult to articulate drum timbres using text, and moreover these methods tend to suffer from timbre leakage [8]. Some works, both in Tap2Drum [10,11] and in accompaniment generation [1,12], offer onset-based rhythm control, but this is too coarse to capture the implied timbre categories of a rhythm prompt.

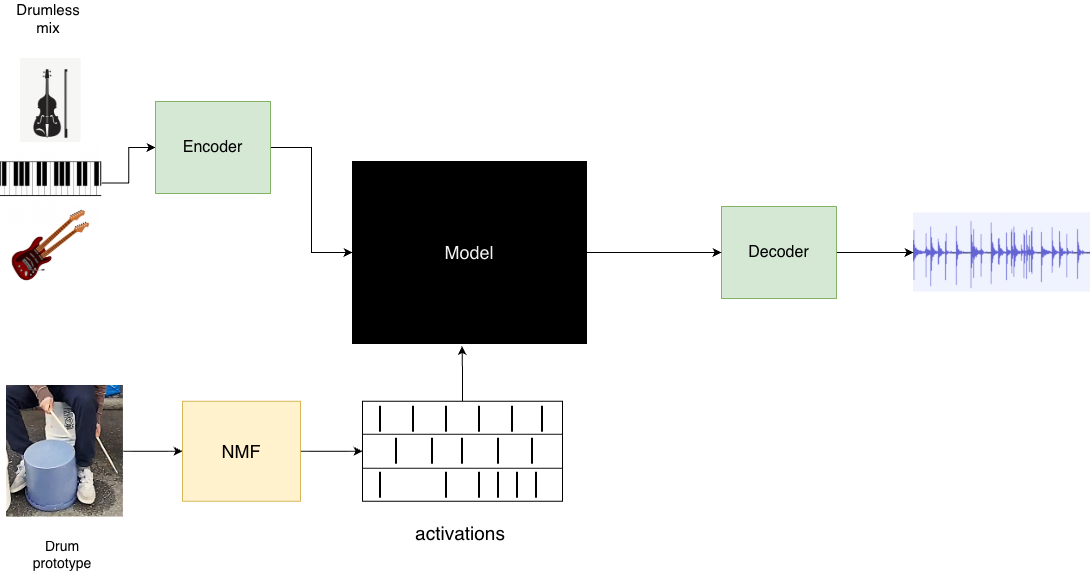

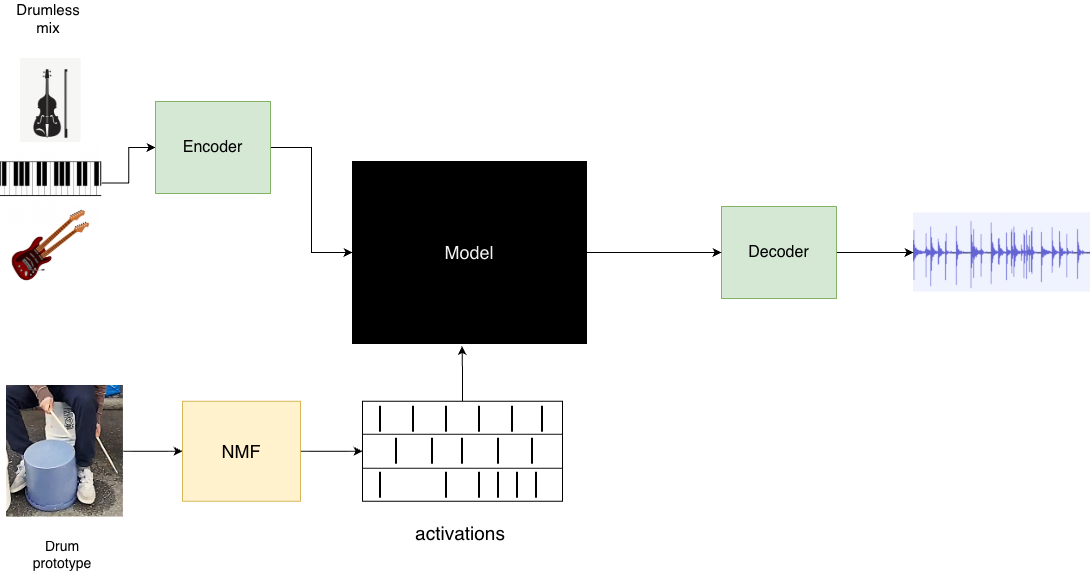

We propose DARC, a drum accompaniment generation model that takes as input musical context and a rhythm © R. Brosnan. “DARC: Drum accompaniment generation with finegrained rhythm control”, submitted as a final project for CMU 15-798. prompt. Our rhythm feature representation, based on nonnegative matrix factorization (NMF), provides greater granularity than onset-based methods by classifying each onset into a timbre class. DARC is a fine-tuning of STAGE [1], a SOTA drum accompaniment model. Our motivation for inferring timbre from musical context rather than a timbre prompt is twofold: first, drums are rarely a solo instrument, i.e. the end goal for a drum track is often to accompany a mix; second, removing the requirement for users to provide a timbre prompt can shorten their iteration cycle, enabling them to explore more ideas. For our dataset, we extract drum stems from the FMA dataset [13] using Demucs [14,15]. During fine-tuning, we utilize the parameter-efficient method proposed in [2].

Our contributions are 2-fold:

• We introduce a generative drum model that can condition on both musical context and specific rhythms, with timbre classes

• We evaluate our model on musical coherence with the input mix and onset and timbre class adherence to the rhythm prompt, exposing limitations in existing evaluation metrics

A recent line of work has explored music accompaniment generation [1-3, 5-7, 16], which can generate one or more tracks to accompany given musical mix. Note that many of these models support text conditioning, and are in fact fine-tunings of the text-to-music model MusicGen [17]. While these stem-to-stem generation models can condition on other stems in the mix, they are not designed for finegrained rhythm control. Some approaches allow for conditioning on onsets [1,12]. However, the rhythm control provided by these approaches is quite loose; the model does not preserve the onsets, but rather uses them as a guide to generate an embellished drum track. In addition, onset timings alone do not capture implied timbre classes, such as an onset being from a kick drum versus a snare. Our work seeks to provide tighter rhythm control and can preserve timbre classes.

Other work has focused on more specialized aspects of drum generation. For example, [18] generates drum accompaniments in real time, and [19] uses a bidirectional Figure 1. Architecture of the proposed rhythm-conditioned music generation model. Musical context and rhythm prompt are provided as audio inputs. The tokenized musical context is prepended to the input sequence, and the rhythm prompt is transcribed into (onset time, timbre class) pairs using non-negative matrix factorization (NMF). The rhythm embedding is passed through the self-attention layers via jump fine-tuning and adaptive in-attention [2]. The model outputs EnCodec audio tokens that are decoded to the final waveform. language model to generate drum fills. We leave the adaptation of our methods for real-time or fill generation as future work.

An alternative line of work explores the Tap2Drum task, which takes tapping or beatboxing as input and seeks to generate a drum track with the same rhythm. Tap2Drum was first introduced in [10], which takes onset times as input and generates drums as MIDI 1 . Other work such as TRIA [8] performs timbre transfer, directly converting the rhythm prompt audio to high-fidelity drum audio. In addition to a rhythm prompt, such methods take a timbre prompt in the form of audio, requiring users to present an audio sample with the exact timbre they desire. Further work has explored non-zero-shot timbre transfer

This content is AI-processed based on open access ArXiv data.