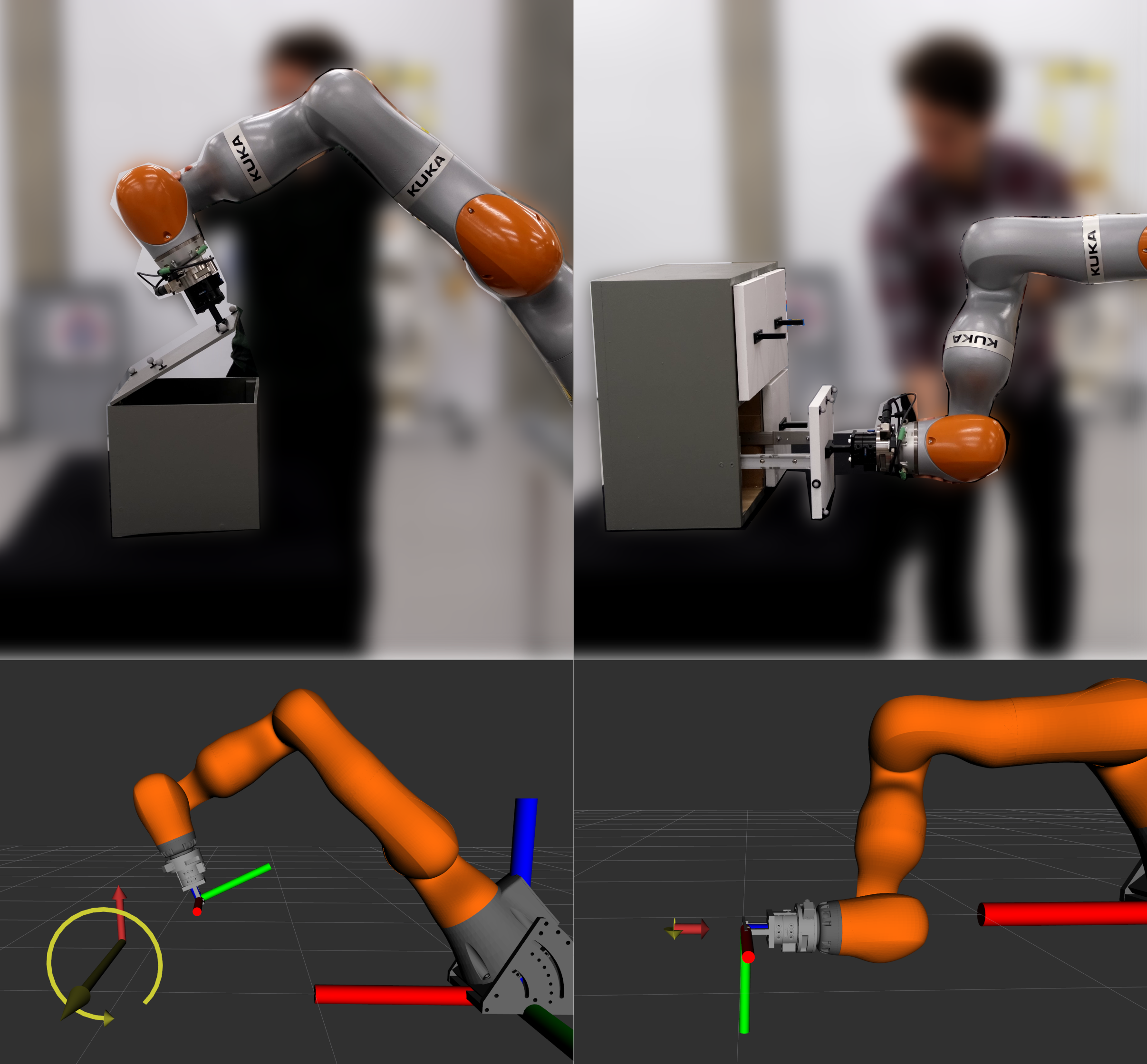

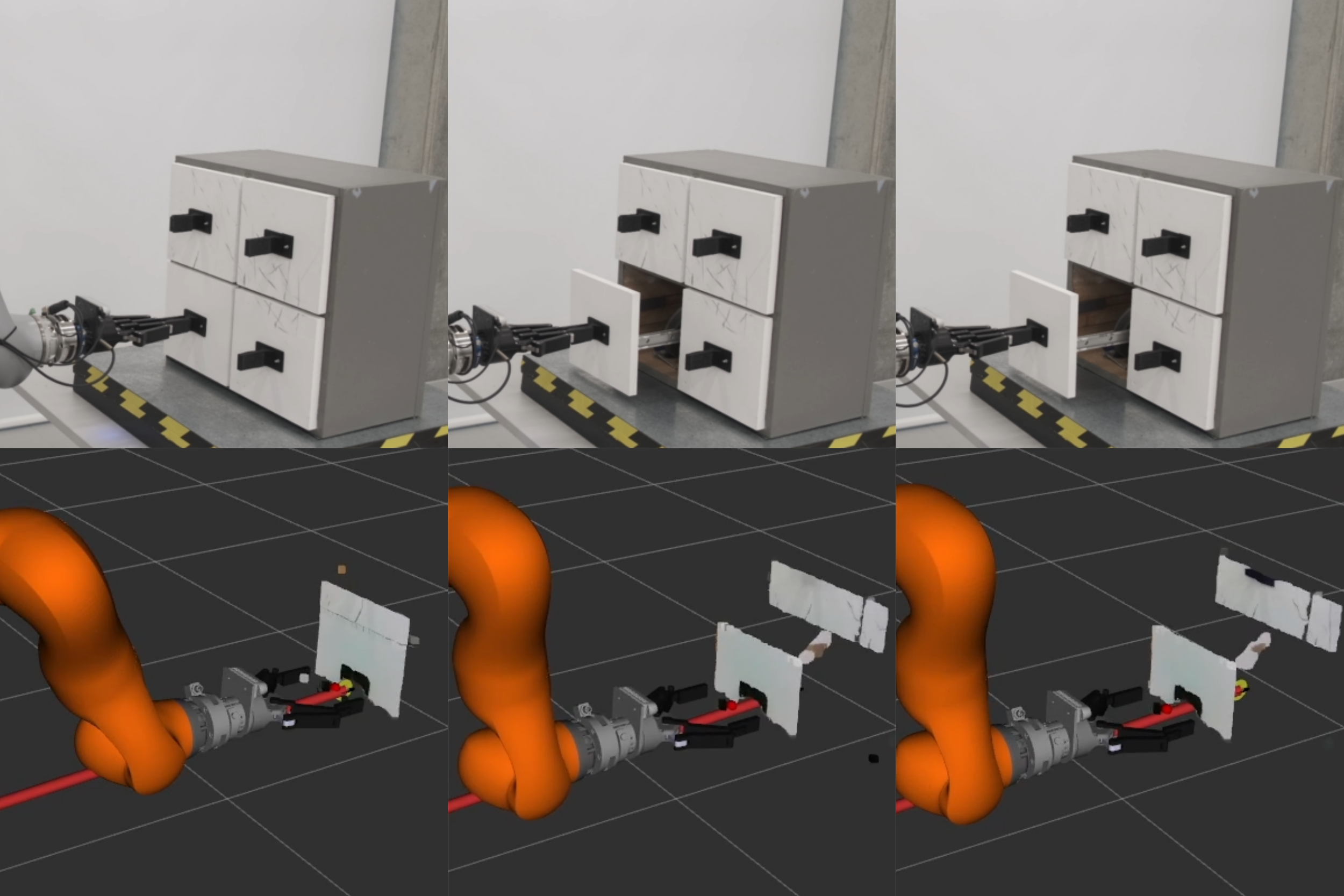

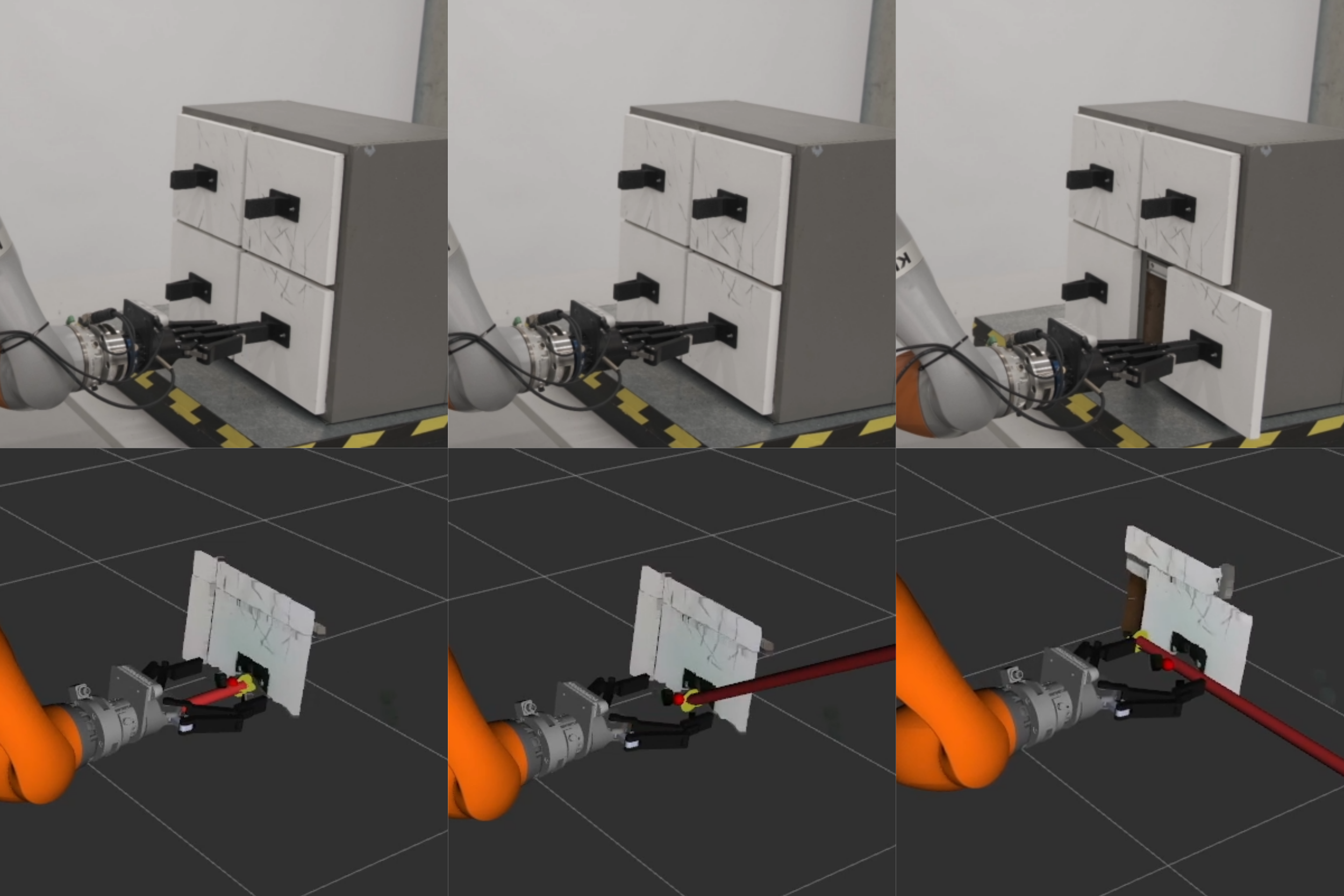

From refrigerators to kitchen drawers, humans interact with articulated objects effortlessly every day while completing household chores. For automating these tasks, service robots must be capable of manipulating arbitrary articulated objects. Recent deep learning methods have been shown to predict valuable priors on the affordance of articulated objects from vision. In contrast, many other works estimate object articulations by observing the articulation motion, but this requires the robot to already be capable of manipulating the object. In this article, we propose a novel approach combining these methods by using a factor graph for online estimation of articulation which fuses learned visual priors and proprioceptive sensing during interaction into an analytical model of articulation based on Screw Theory. With our method, a robotic system makes an initial prediction of articulation from vision before touching the object, and then quickly updates the estimate from kinematic and force sensing during manipulation. We evaluate our method extensively in both simulations and real-world robotic manipulation experiments. We demonstrate several closed-loop estimation and manipulation experiments in which the robot was capable of opening previously unseen drawers. In real hardware experiments, the robot achieved a 75% success rate for autonomous opening of unknown articulated objects.

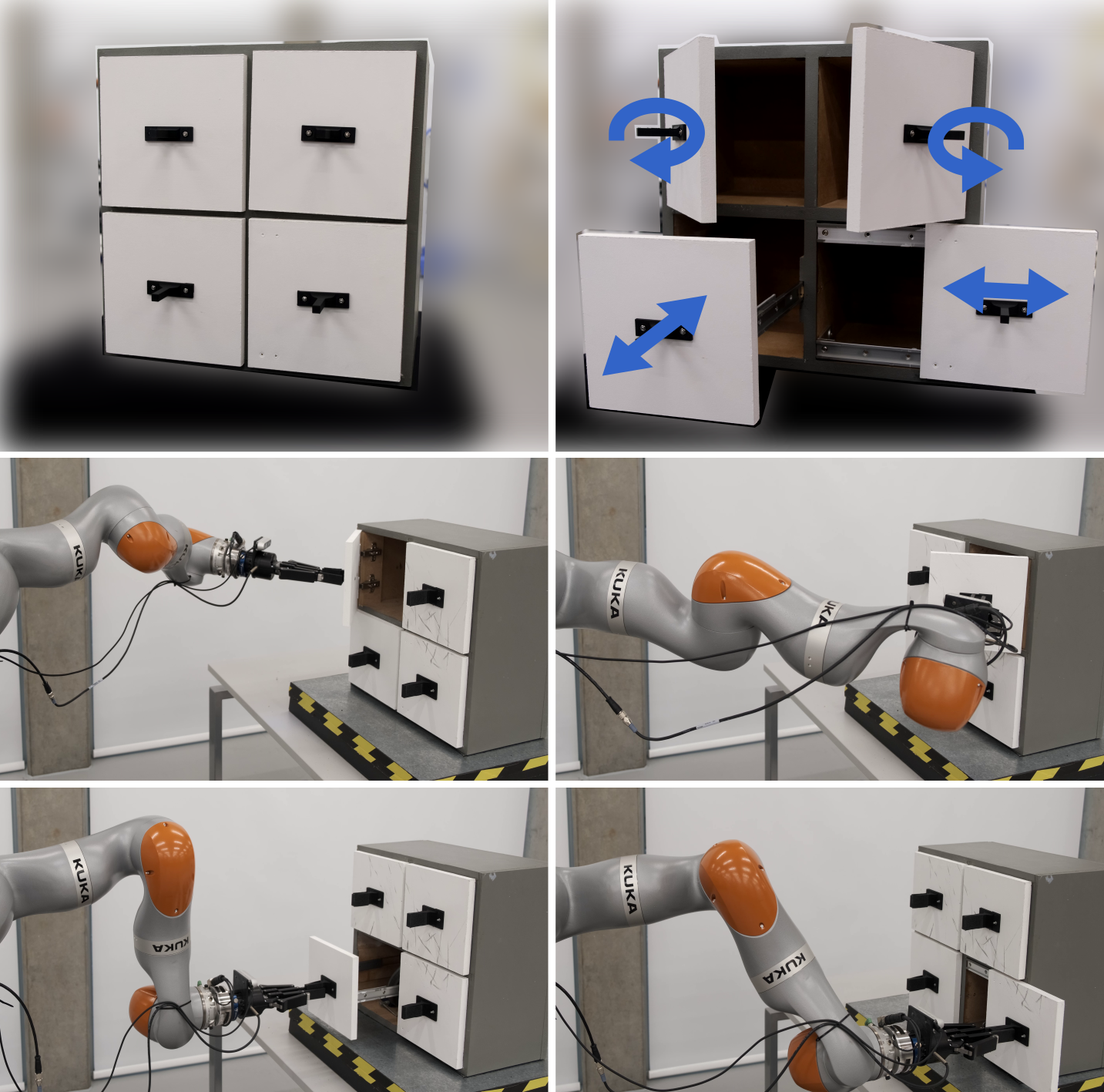

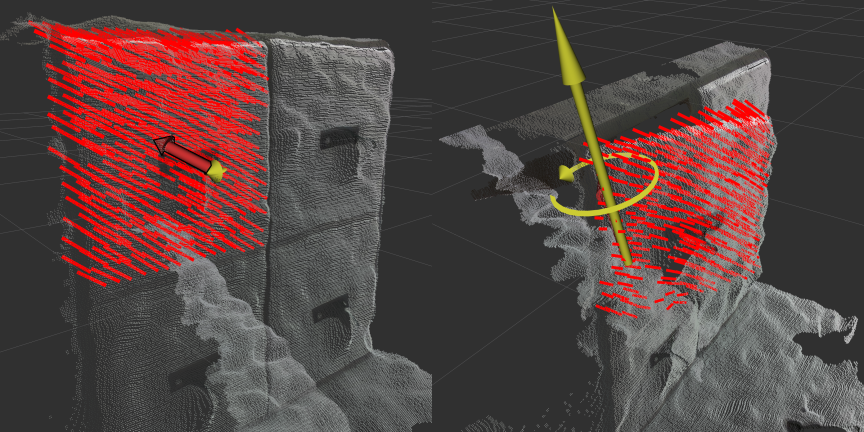

If service robots are to assist humans in performing common tasks such as cooking and cleaning, they must be capable of interacting with and manipulating common articulated objects such as dishwashers, doors, and drawers. To manipulate these objects, a robot would need an understanding of the articulation, either as an analytical model (e. g. revolute, prismatic, or screw joint) or as a model implicitly learned through a neural network. Many recent works have shown how deep neural networks can predict articulated object affordance using point cloud measurements. To achieve this, common household articulated objects are rendered in simulation with randomized states as training examples. Because most common household objects have reliably repeatable articulations, such as refrigerator doors, the learned models effectively generalize to real data. However, predicting articulation from visual data alone can often be unreliable.

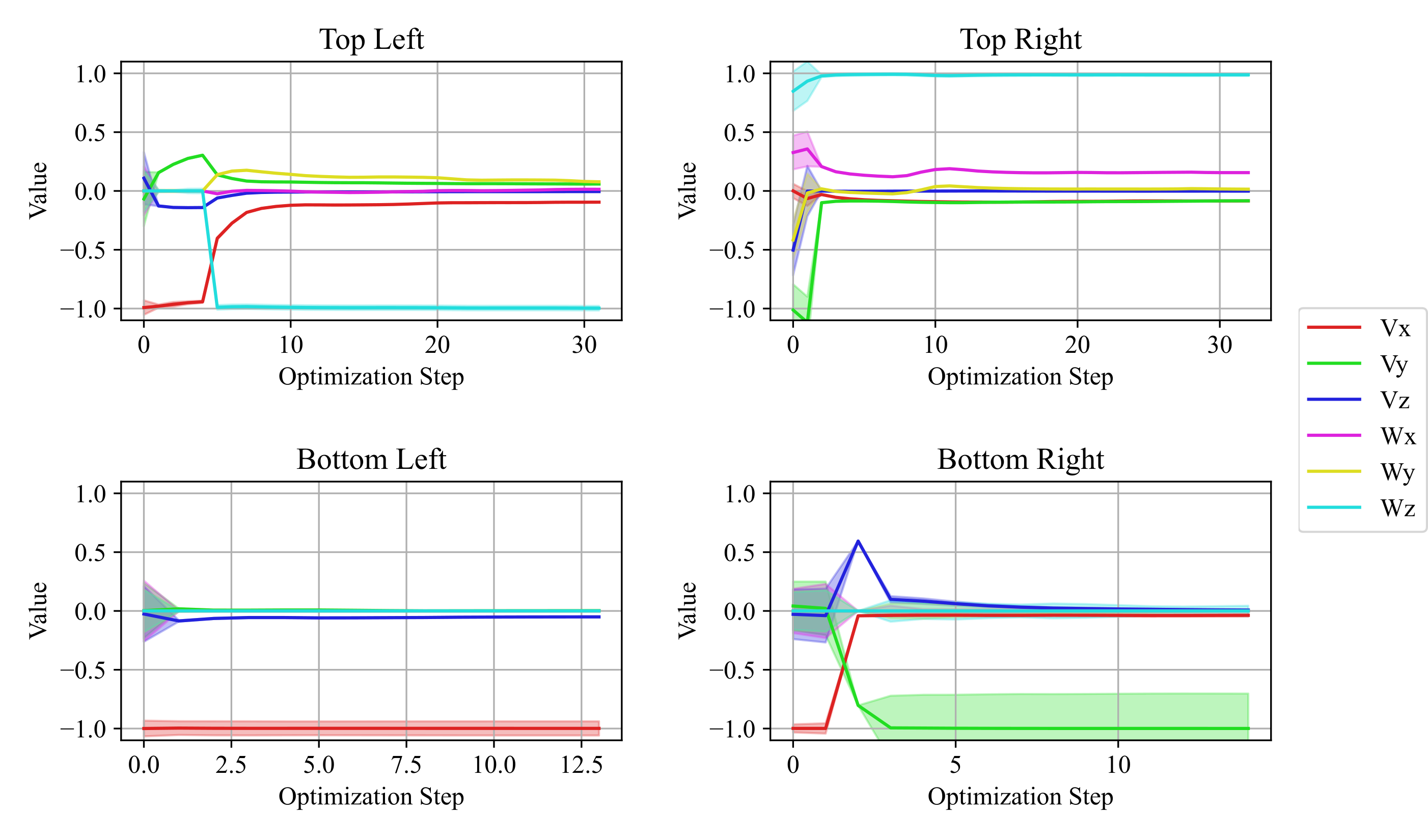

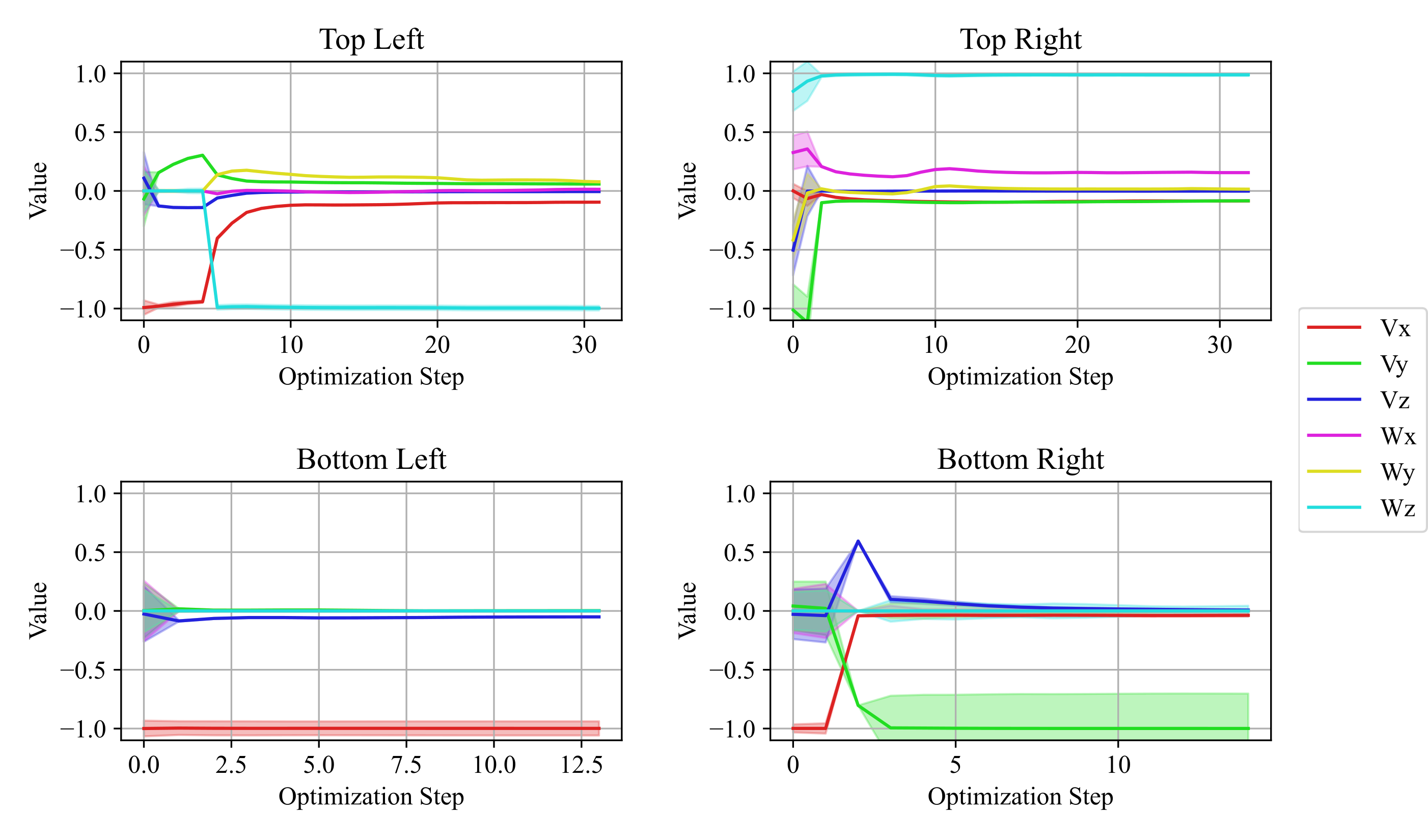

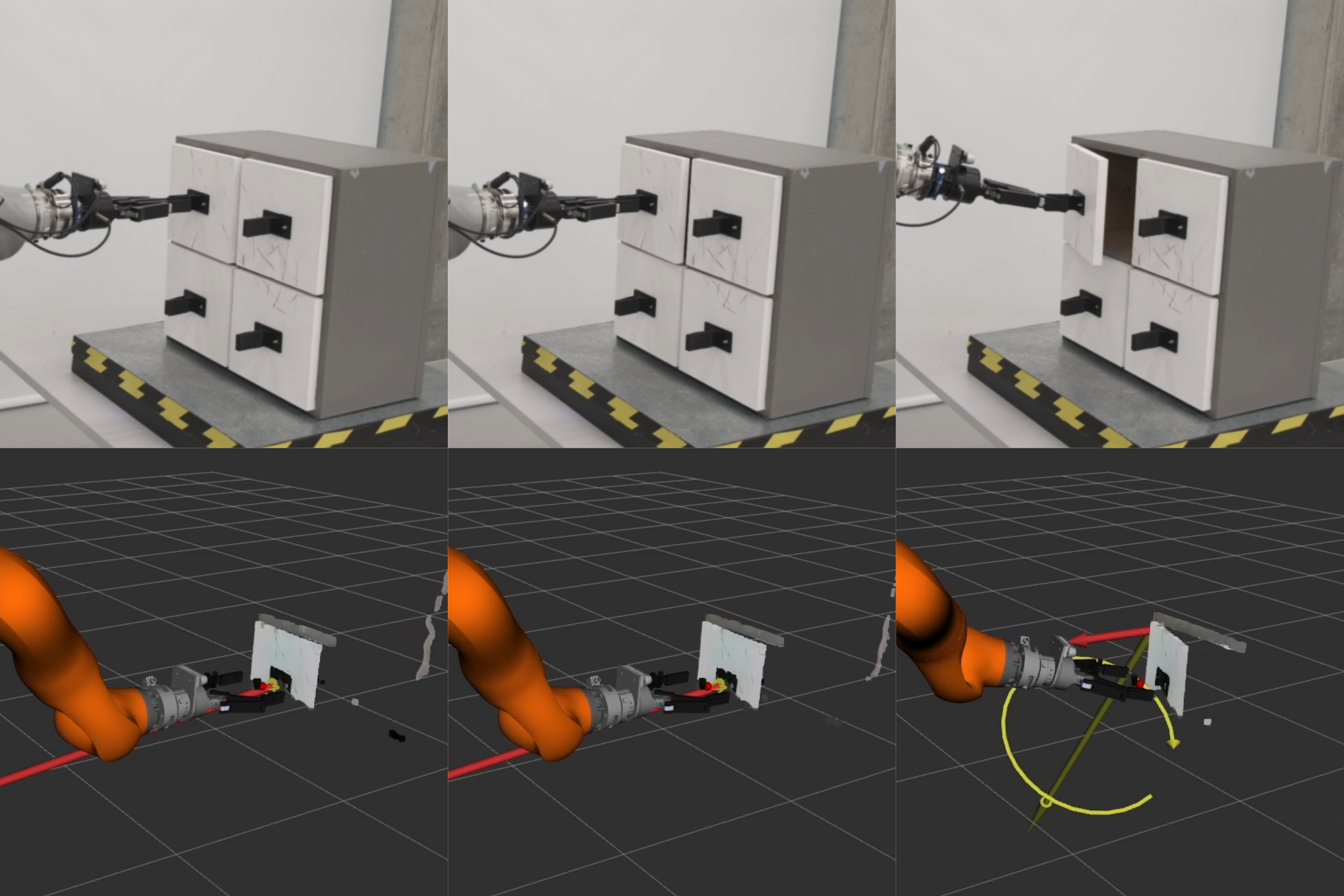

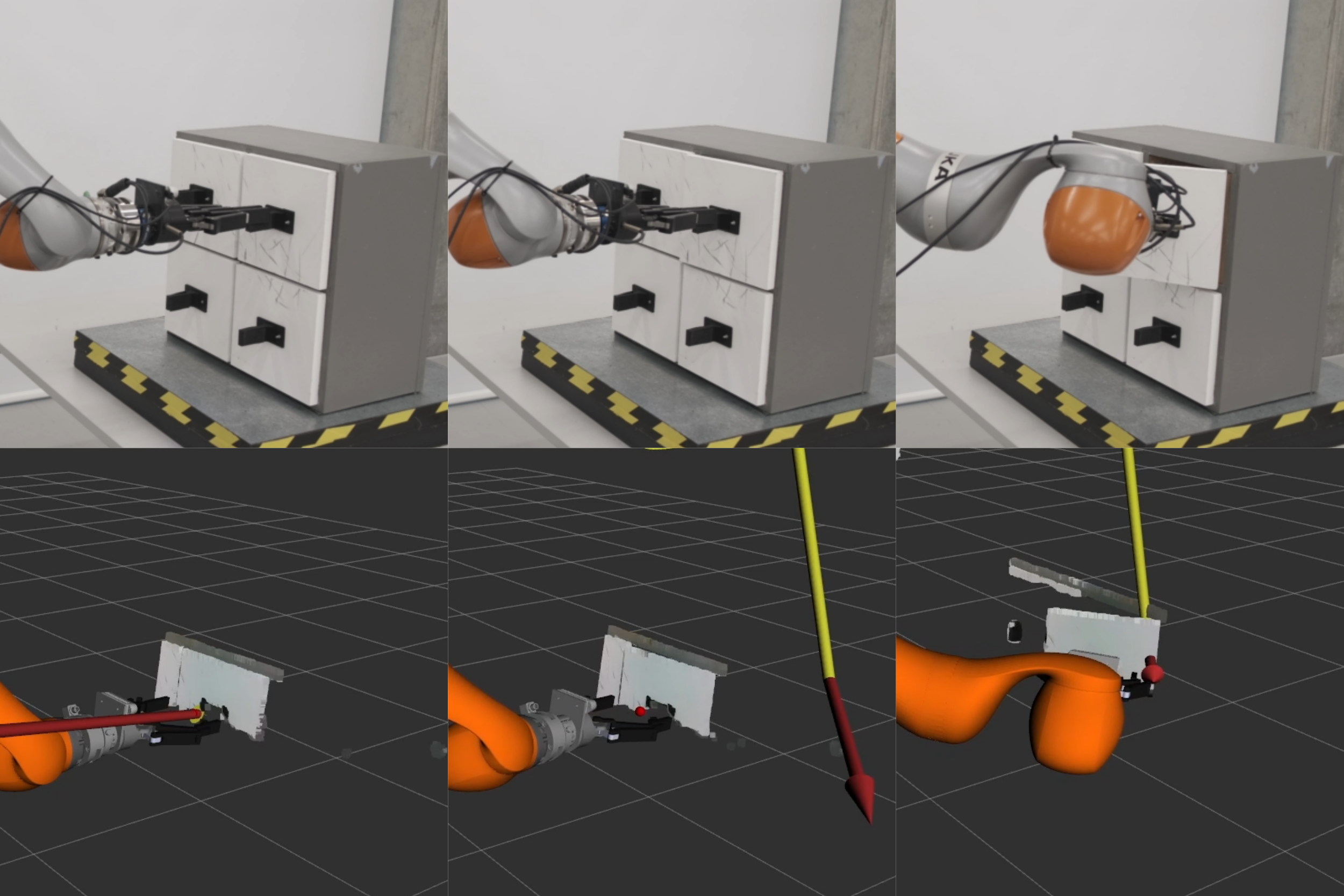

For example, the cabinet in Fig. 1 has four doors which appear identical when closed. It is impossible for humans or robots to reliably predict the articulation from vision alone. However, once a person or robot interacts with them, they are revealed to open in completely different ways. This is challenging for robotic systems that rely exclusively on vision for understanding articulations and are not capable of updating their articulation estimate online. In this work, we propose a novel method for jointly optimizing visual, force, and kinematic sensing for online estimation of articulated objects. There is another branch of research that has focused on probabilistic estimation of articulations. These works have typically used analytical models of articulation and estimate the object articulation through observations of the motion of the object during interaction. However, these works largely rely on a good initial guess of articulation so that the robot can begin moving the object.

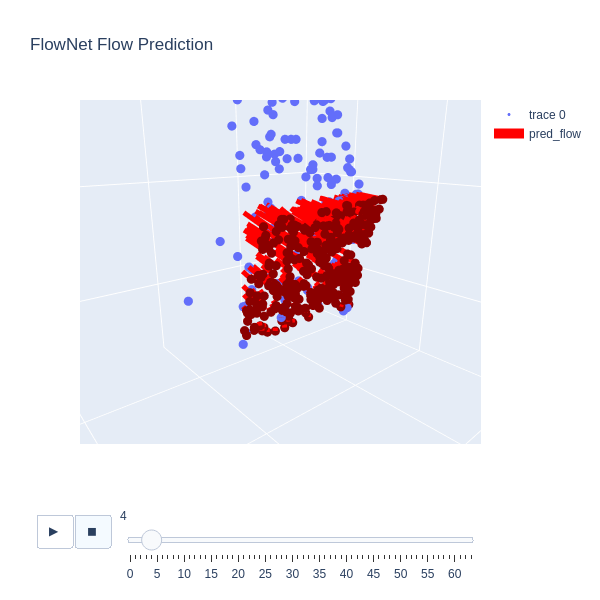

In this work, we significantly improve upon our previous work presented in Buchanan et al. (2024) in which we first investigated estimation of articulated objects. The first of these improvements is a neural network for affordance prediction which incorporates uncertainty in predictions and a completely new method of including learned articulation affordances into a factor graph to provide a good initial guess of articulation. We have also incorporated kinematic and force sensing in the factor graph which updates the estimate online during interaction. The result is a robust multi-modal articulation estimation framework. The contributions of this paper are as follows:

-We propose online estimation of articulation parameters using vision and proprioceptive sensing in a factor graph framework. This improves upon our previous work with a new uncertainty-aware articulation factor leading to improved robustness in articulation prediction.

-We additionally introduce a new force sensing factor for articulation estimation. -We demonstrate full system integration with shared autonomy for unseen opening articulated objects. -We validate our system with extensive real-world experimentation, opening visually ambiguous articulations with the estimation running in a closed loop. We demonstrate improvements over Buchanan et al. (2024) by opening all doors for the cabinet in Fig. 1, which was not previously possible.

In this section, we provide a summary of related works on the estimation of articulated objects. This is a challenging problem that has been investigated in many different ways in computer vision, and robotics. In this work, we are concerned with robotic manipulation of articulated objects. Therefore, in the following section, we first cover related works in interactive perception (Bohg et al. (2017)), which has a long history of use for estimation and manipulation of articulated objects. Then, we briefly cover the most relevant, recent deep learning methods for vision-based articulation prediction. Finally, we discuss some methods that have integrated different systems together for robot experiments.

Interactive perception is the principle that robot perception can be significantly facilitated when the robot interacts with its environment to collect information. This has been applied extensively in the estimation of articulated objects, as once the robot has grasped an articulated object and started to move it, there are many sources of information from which to infer the articulation parameters. Today, few works use proprioception for estimating articulation due to challenges in identifying an initial grasp point and pulling direction. In 2010, Jain and Kemp (2010) simplified the problem by assuming a prior known grasp pose and initial opening force vector. This allowed their method to autonomously open several everyday objects, such as cabinets and drawers, while only using force and kinematic sensing. They demonstrated that once a robot is physically interacting with an articulated object and is given

This content is AI-processed based on open access ArXiv data.