📝 Original Info Title: RovoDev Code Reviewer: A Large-Scale Online Evaluation of LLM-based Code Review Automation at AtlassianArXiv ID: 2601.01129Date: 2026-01-03Authors: Kla Tantithamthavorn, Yaotian Zou, Andy Wong, Michael Gupta, Zhe Wang, Mike Buller, Ryan Jiang, Matthew Watson, Minwoo Jeong, Kun Chen, Ming Wu📝 Abstract Large Language Models (LLMs)-powered code review automation has the potential to transform code review workflows. Despite the advances of LLM-powered code review comment generation approaches, several practical challenges remain for designing enterprisegrade code review automation tools. In particular, this paper aims at answering the practical question: how can we design a reviewguided, context-aware, quality-checked code review comment generation without fine-tuning? In this paper, we present RovoDev Code Reviewer, an enterprise-grade LLM-based code review automation tool designed and deployed at scale within Atlassian's development ecosystem with seamless integration into Atlassian's Bitbucket. Through the offline, online, user feedback evaluations over a oneyear period, we conclude that RovoDev Code Reviewer is effective in generating code review comments that could lead to code resolution for 38.70% (i.e., comments that triggered code changes in the subsequent commits); and offers the promise of accelerating feedback cycles (i.e., decreasing the PR cycle time by 30.8%), alleviating reviewer workload (i.e., reducing the number of human-written comments by 35.6%), and improving overall software quality (i.e., finding errors with actionable suggestions).

CCS Concepts • Software and its engineering → Software development techniques.

💡 Deep Analysis

📄 Full Content RovoDev Code Reviewer: A Large-Scale Online Evaluation of

LLM-based Code Review Automation at Atlassian

Kla Tantithamthavorn

Monash University & Atlassian

Australia.

Yaotian Zou

Atlassian

USA.

Andy Wong

Atlassian

USA.

Michael Gupta

Atlassian

USA.

Zhe Wang

Atlassian

Australia.

Mike Buller

Atlassian

Australia.

Ryan Jiang

Atlassian

Australia.

Matthew Watson

Atlassian

Australia.

Minwoo Jeong

Atlassian

USA.

Kun Chen

Atlassian

USA.

Ming Wu

Atlassian

USA.

Abstract

Large Language Models (LLMs)-powered code review automation

has the potential to transform code review workflows. Despite the

advances of LLM-powered code review comment generation ap-

proaches, several practical challenges remain for designing enterprise-

grade code review automation tools. In particular, this paper aims

at answering the practical question: how can we design a review-

guided, context-aware, quality-checked code review comment genera-

tion without fine-tuning? In this paper, we present RovoDev Code

Reviewer, an enterprise-grade LLM-based code review automation

tool designed and deployed at scale within Atlassian’s development

ecosystem with seamless integration into Atlassian’s Bitbucket.

Through the offline, online, user feedback evaluations over a one-

year period, we conclude that RovoDev Code Reviewer is effective

in generating code review comments that could lead to code reso-

lution for 38.70% (i.e., comments that triggered code changes in the

subsequent commits); and offers the promise of accelerating feed-

back cycles (i.e., decreasing the PR cycle time by 30.8%), alleviating

reviewer workload (i.e., reducing the number of human-written

comments by 35.6%), and improving overall software quality (i.e.,

finding errors with actionable suggestions).

CCS Concepts

• Software and its engineering →Software development tech-

niques.

Keywords

Code Review Automation, Review Comment Generation, Online

Production, Online Experimentation.

This work is licensed under a Creative Commons Attribution-NonCommercial-

NoDerivatives 4.0 International License.

ICSE-SEIP ’26, Rio de Janeiro, Brazil

© 2026 Copyright held by the owner/author(s).

ACM ISBN 979-8-4007-2426-8/2026/04

https://doi.org/10.1145/3786583.3786851

ACM Reference Format:

Kla Tantithamthavorn, Yaotian Zou, Andy Wong, Michael Gupta, Zhe Wang,

Mike Buller, Ryan Jiang, Matthew Watson, Minwoo Jeong, Kun Chen,

and Ming Wu. 2026. RovoDev Code Reviewer: A Large-Scale Online Evalua-

tion of LLM-based Code Review Automation at Atlassian. In 2026 IEEE/ACM

48th International Conference on Software Engineering (ICSE-SEIP ’26), April

12–18, 2026, Rio de Janeiro, Brazil. ACM, New York, NY, USA, 12 pages.

https://doi.org/10.1145/3786583.3786851

1

Introduction

Code review is a cornerstone of modern software engineering [7,

37, 38, 30], serving as a critical quality assurance practice that helps

teams identify defects, share knowledge, and maintain high coding

standards. However, as software projects grow in size and com-

plexity, manual code review becomes increasingly time-consuming

and resource-intensive [43], often leading to bottlenecks in the

development process. Automating aspects of code review using ad-

vances in Large Language Models (LLMs) [46, 47, 45, 41, 42, 22, 21]

could speed up code review processes, particularly, code review

comment generation, defined as a generative task to generate code

review comments written in natural languages for a given code

change [22, 14, 20, 32, 48].

Despite these advances, several practical challenges remain for

designing enterprise-grade code review automation tools. First,

data privacy and security are paramount, especially when process-

ing sensitive customer code and metadata, making it infeasible to

fine-tune LLMs on proprietary or user-generated content in many

industrial contexts. Second, review guidelines play a critical role to

guide inexperienced reviewers to conduct a code review, yet it re-

mains largely ignored in the recent LLM-powered code review com-

ment generation approaches [32]. Third, most retrieval-augmented

generation (RAG) approaches [32, 20] rely on rich historical data,

which may not be available for newly created or context-limited

projects. Finally, LLMs are prone to generating noisy or halluci-

nated comments that may be vague, non-actionable, or factually

incorrect [27, 39], potentially diminishing the utility of automated

code review tools.

arXiv:2601.01129v2 [cs.SE] 20 Jan 2026

ICSE-SEIP ’26, April 12–18, 2026, Rio de Janeiro, Brazil

Tantithamthavorn et al.

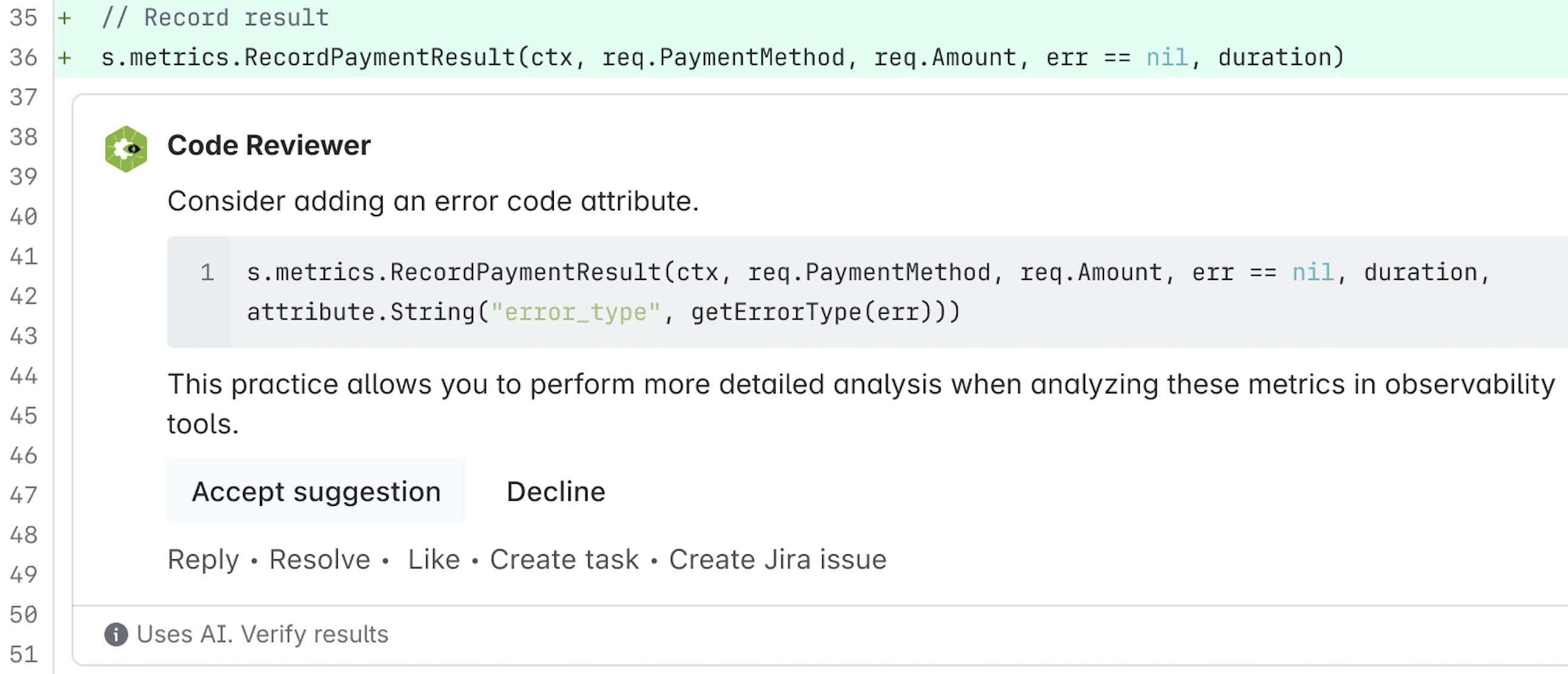

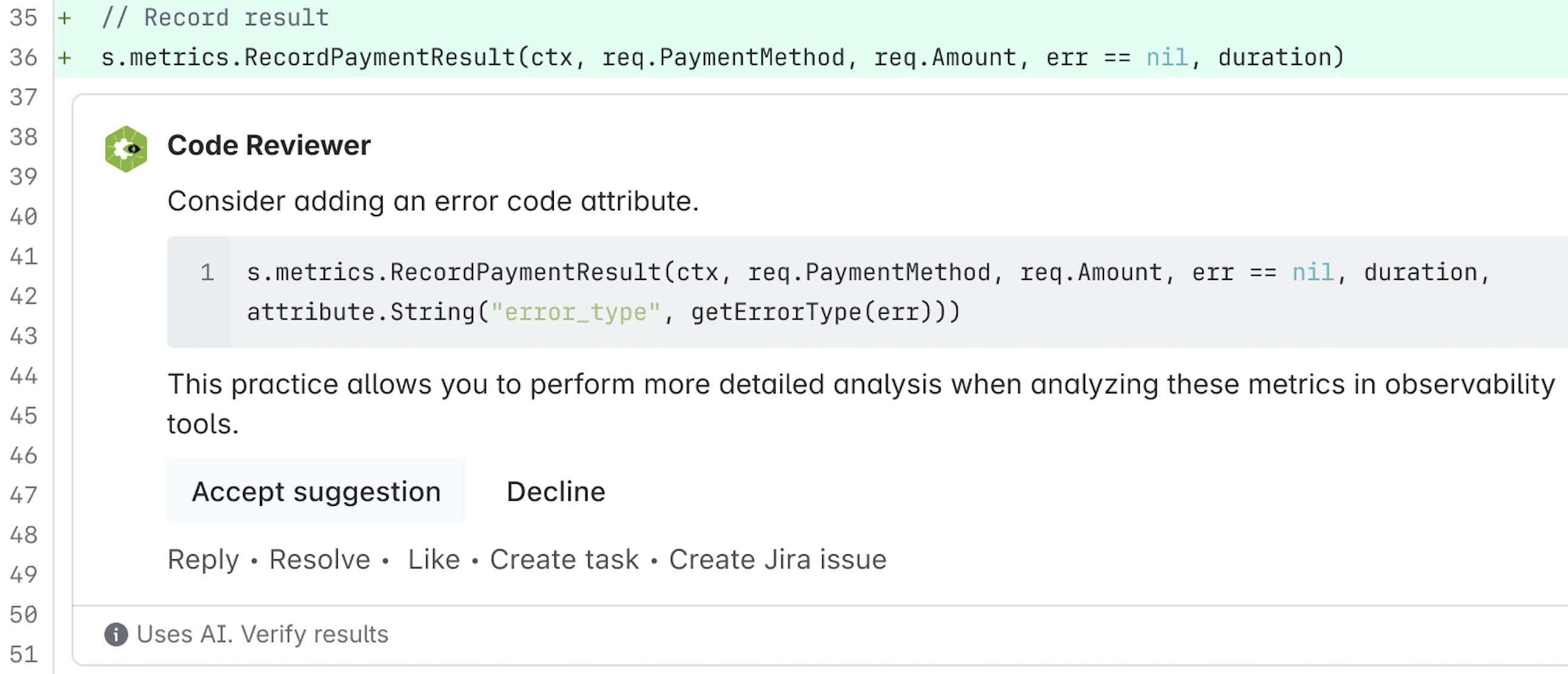

In this paper, we present RovoDev Code Reviewer, a Review-

Guided, Quality-Checked Code Review Automation. Our RovoDev

Code Reviewer consists of three key components: (1) a zero-shot

context-aware review-guided comment generation; (2) a comment

quality check on factual correctness to remove noisy comments

(e.g., irrelevant, inaccurate, inconsistent, or nonsensical); and (3) a

comment quality check on actionability to ensure that the RovoDev-

generated comments are most l

📸 Image Gallery

Reference This content is AI-processed based on open access ArXiv data.