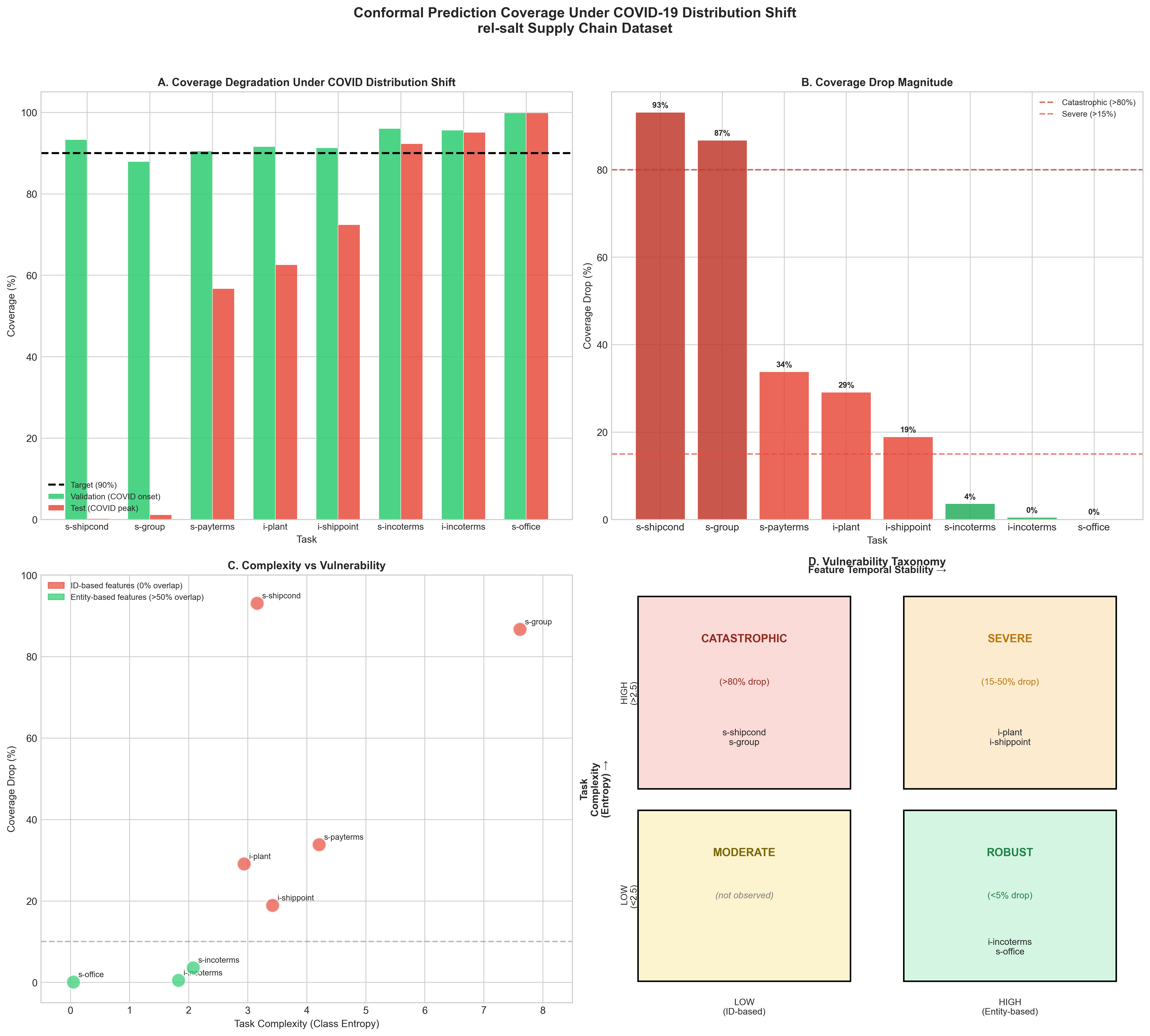

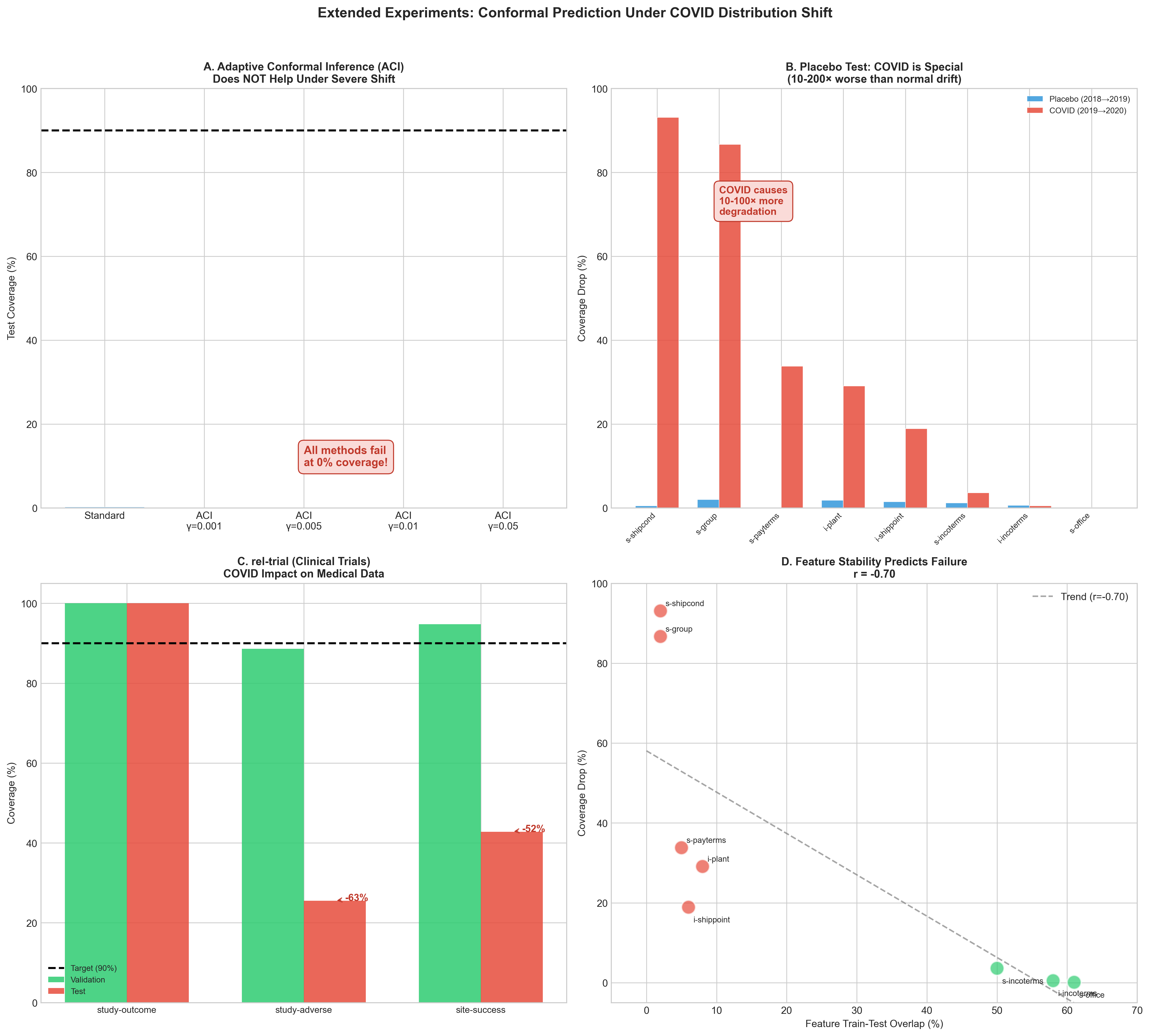

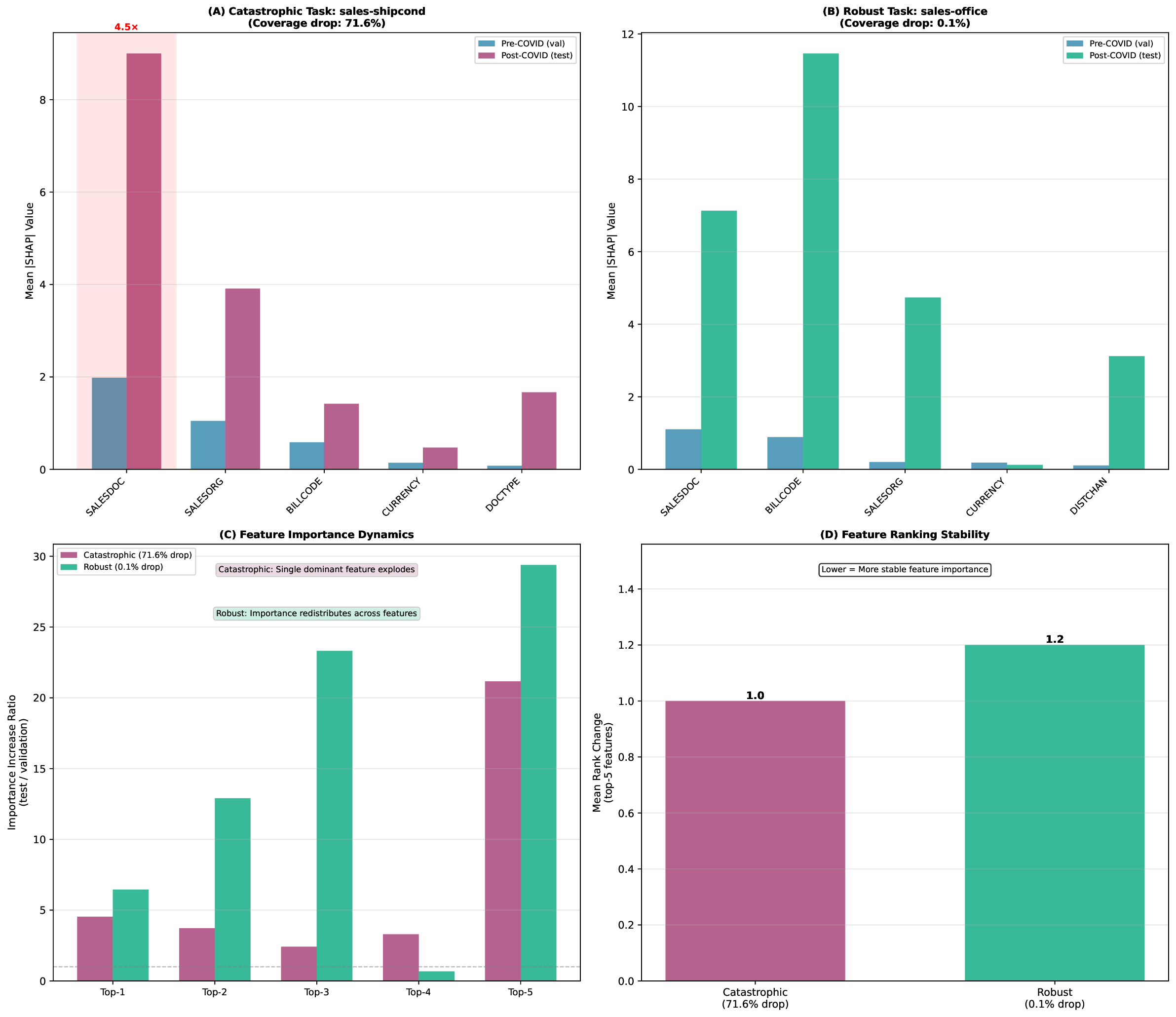

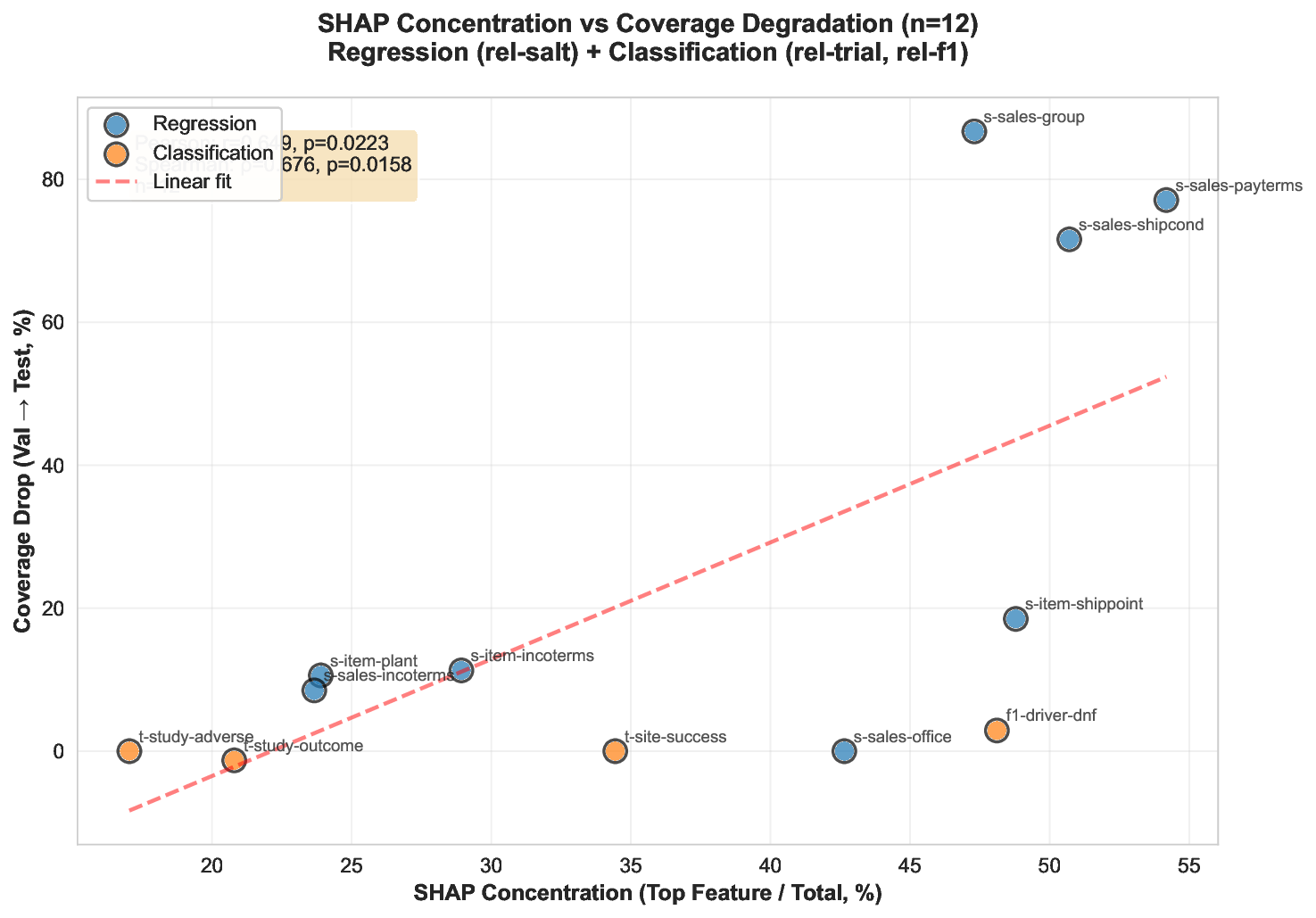

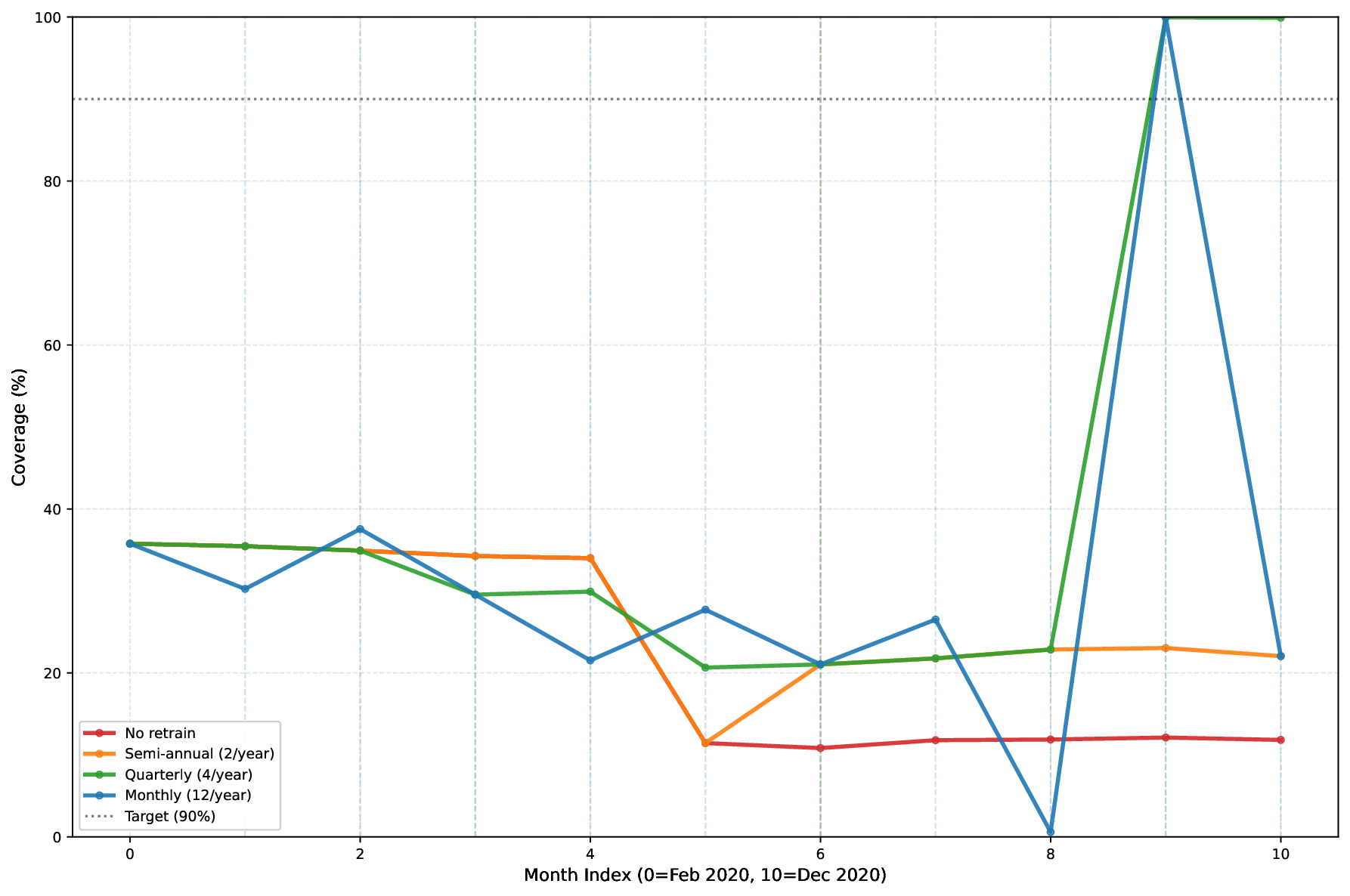

Conformal prediction guarantees degrade under distribution shift. We study this using COVID-19 as a natural experiment across 8 supply chain tasks. Despite identical severe feature turnover (Jaccard approximately 0), coverage drops vary from 0% to 86.7%, spanning two orders of magnitude. Using SHapley Additive exPlanations (SHAP) analysis, we find catastrophic failures correlate with singlefeature dependence (rho = 0.714, p = 0.047). Catastrophic tasks concentrate importance in one feature (4.5x increase), while robust tasks redistribute across many (10-20x). Quarterly retraining restores catastrophic task coverage from 22% to 41% (+19 pp, p = 0.04), but provides no benefit for robust tasks (99.8% coverage). Exploratory analysis of 4 additional tasks with moderate feature stability (Jaccard 0.13-0.86) reveals feature stability, not concentration, determines robustness, suggesting concentration effects apply specifically to severe shifts. We provide a decision framework: monitor SHAP concentration before deployment; retrain quarterly if vulnerable (>40% concentration); skip retraining if robust.

CCS Concepts • Computing methodologies → Uncertainty quantification; • Mathematics of computing → Time series analysis.

Conformal prediction provides distribution-free coverage guarantees under the assumption of exchangeability [14]. However, realworld deployments face distribution shifts that violate this assumption. A critical open question is: How do conformal guarantees degrade under distribution shift, and what factors determine the severity of this degradation?

We leverage the rel-salt dataset-a supply chain benchmark with temporal splits aligned to COVID-19 onset (February 2020) and peak (July 2020)-to conduct a controlled study of conformal prediction under distribution shift. Our key contributions:

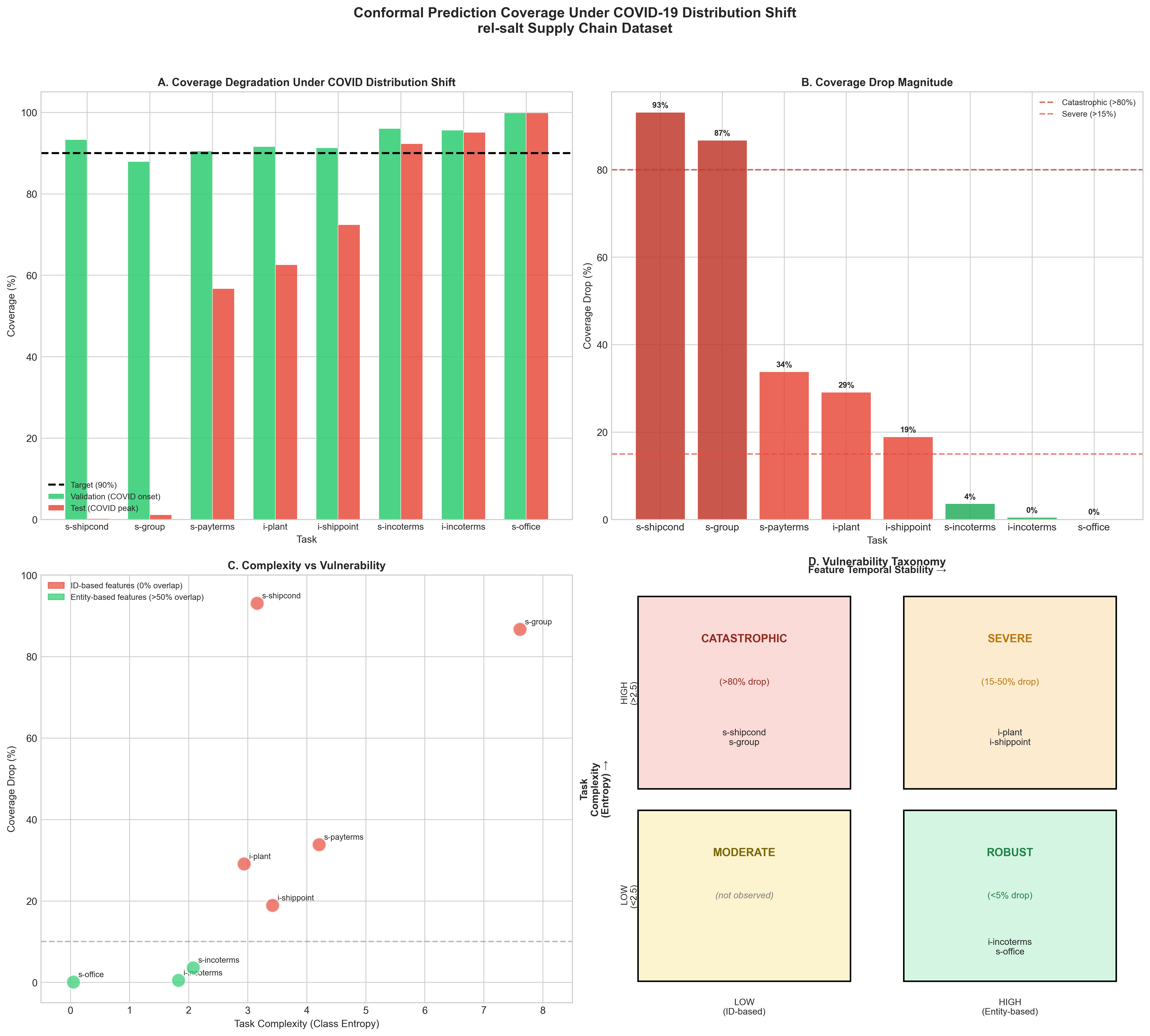

(1) Quantification: We measure coverage degradation across 8 supply chain tasks experiencing severe feature turnover (Jaccard ≈0), finding drops ranging from 0% to 86.7%-two orders of magnitude variation under identical temporal shift (2) Predictive Signal: Using SHapley Additive exPlanations (SHAP) analysis, we identify single-feature dependence as * Corresponding author a predictive signal for catastrophic failure in severe-shift scenarios (Spearman 𝜌 = 0.714, 𝑝 = 0.047, n=8). Exploratory analysis of 4 additional tasks with moderate feature stability (Jaccard 0.13-0.86) reveals a different mechanism, suggesting concentration effects apply specifically when features lack temporal stability (3) Practical Solution: Retraining experiments show quarterly retraining significantly restores catastrophic task coverage by 19 percentage points (Wilcoxon 𝑝 = 0.04 vs no retraining). While quarterly achieves higher mean coverage than monthly (41% vs 32%), this difference is not statistically significant (𝑝 = 0.24) (4) Cost-Benefit Analysis: Robust tasks (99.8% coverage) gain no benefit from retraining, enabling practitioners to avoid unnecessary computational cost (5) Decision Framework: We provide actionable guidance based on SHAP importance concentration: monitor before deployment, retrain quarterly if vulnerable (>40% concentration), skip retraining if robust (6) Negative Result: We show that Adaptive Conformal Inference does not help under severe distribution shift 2 Related Work Conformal Prediction. Vovk et al. [14] introduced conformal prediction with exchangeability guarantees. Recent work extends to classification [12] and regression [11]. Distribution Shift. Tibshirani et al. [13] study conformal prediction under covariate shift. We focus on temporal shift with feature staleness-a distinct failure mode.

Adaptive Methods. Gibbs and Candès [4] propose Adaptive Conformal Inference (ACI) for non-stationary settings. We test whether ACI helps under severe distribution shift.

beyond exchangeability. We focus on temporal shift with feature staleness-a distinct failure mode where feature distributions have zero overlap between train and test.

Gibbs and Candès [4] propose Adaptive Conformal Inference (ACI) for non-stationary settings, with extensions by Zaffran et al. [15] for time series. We show in Section 5.1 that these adaptive methods fail when feature overlap is ∼0%-this is not a calibration problem but a fundamental data shift that requires retraining.

Our finding that SHAP importance concentration predicts conformal failure connects to work on feature attribution [8] and model debugging [1]. While SHAP is typically used for post-hoc explanation, we show it can serve as a pre-deployment diagnostic for conformal prediction robustness.

We use the rel-salt supply chain dataset with temporal splits:

• Train: Before February 2020 (pre-COVID) • Validation: February-July 2020 (COVID onset) • Test: After July 2020 (COVID peak)

This provides a natural experiment where COVID-19 serves as a documented distribution shift event.

We use conformal prediction via the Adaptive Prediction Sets (APS) algorithm [12] with 𝛼 = 0.1 (90% target coverage, computed as (1 -𝛼) × 100%):

(1) Train LightGBM ensemble (50 seeds) on training data (2) Calibrate conformal predictor on 50% of validation set (3) Evaluate coverage on held-out validation and test sets

We train 50 independent models with random seeds 42-91 to quantify uncertainty due to model initialization. For each seed 𝑠: We report mean ± standard deviation across 50 trials. This is not ensemble prediction (averaging model outputs)-each seed represents an independent experimental trial to measure model variance.

We define feature temporal stability using Jaccard similarity:

where 𝐴 train and 𝐴 test are the sets of unique values for feature 𝑓 in train and test data respectively.

Table 1 shows coverage degradation across 8 supply chain regression tasks experiencing severe feature turnover (Jaccard ≈0). Coverage drops range from 0% to 86.7%-two orders of magnitude variation under identical temporal shift. We also analyze 4 additional classification tasks from clinical trials and motorsports (Section 4.6) as exploratory cross-domain validation.

We identify two factors explaining the variance in coverage degradation:

Factor 1: Task Complexity (Entropy). Low-entropy tasks (dominated by

This content is AI-processed based on open access ArXiv data.