We present MORTAR, a system for autonomously evolving game mechanics for automatic game design. Game mechanics define the rules and interactions that govern gameplay, and designing them manually is a time-consuming and expertdriven process. MORTAR combines a quality-diversity algorithm with a large language model to explore a diverse set of mechanics, which are evaluated by synthesising complete games that incorporate both evolved mechanics and those drawn from an archive. The mechanics are evaluated by composing complete games through a tree search procedure, where the resulting games are evaluated by their ability to preserve a skill-based ordering over players-that is, whether stronger players consistently outperform weaker ones. We assess the mechanics based on their contribution towards the skill-based ordering score in the game. We demonstrate that MORTAR produces games that appear diverse and playable, and mechanics that contribute more towards the skill-based ordering score in the game. We perform ablation studies to assess the role of each system component and a user study to evaluate the games based on human feedback.

Procedural content generation (PCG) is a well-studied approach in game design, concerned with the automatic creation of game content such as levels, maps, items and narratives (Shaker et al., 2016;Liu et al., 2021). PCG serves multiple purposes: enabling runtime content generation in games such as roguelikes, providing ideation tools for designers, automating the production of repetitive content, and facilitating research into creativity and design processes. Traditionally, PCG research has focused on structural aspects of games-particularly level or layout generation (Risi & Togelius, 2020)-where the goal is to produce environments that are coherent, solvable, and varied.

By contrast, comparatively little attention has been paid to the procedural generation of game mechanics-the underlying rules for interactions that govern gameplay. Yet mechanics play a central role in shaping the player experience, determining not just how players act, but what kinds of strategies and emergent behaviours are possible. Designing mechanics is inherently challenging: unlike levels, which can be evaluated by solvability or novelty, the utility of a mechanic depends on the dynamics it induces within the context of a game. This makes both generation and evaluation significantly harder.

A central premise of this work is that evaluating game mechanics is fundamentally more difficult than evaluating assets or level layouts. Unlike these forms of content, a mechanic cannot be judged in isolation-it only gains meaning through the gameplay it enables. A mechanic that appears novel or complex may still be uninteresting if it does not support skill-based interaction. This insight motivates our approach: effective automation of mechanic design requires not only a generative model, but also a principled way to assess a mechanic’s utility in the context of play.

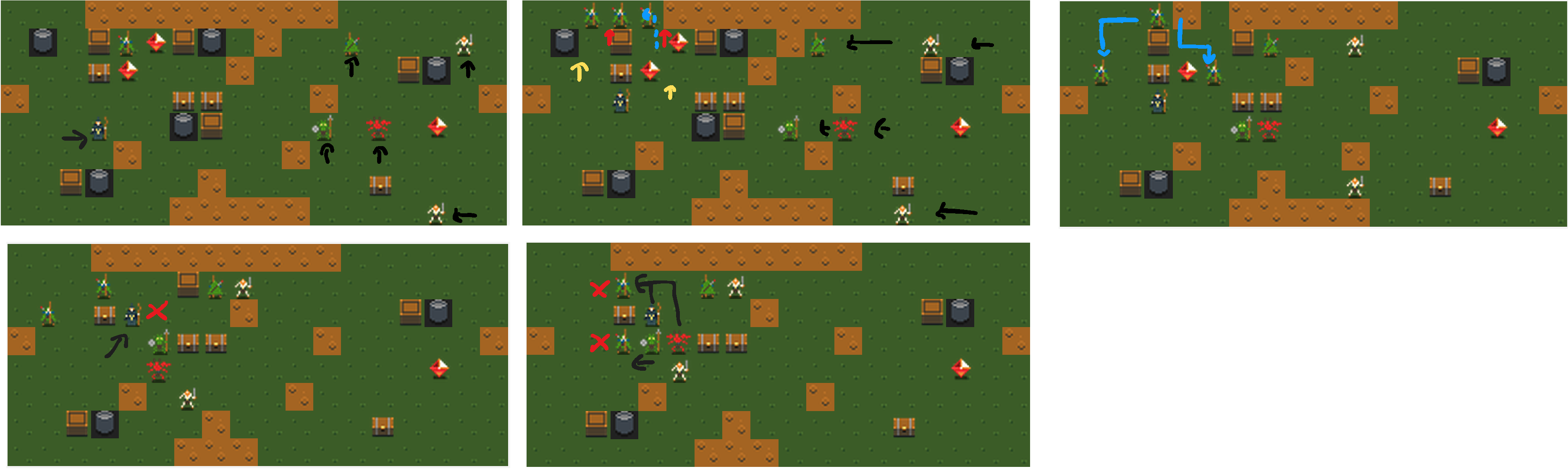

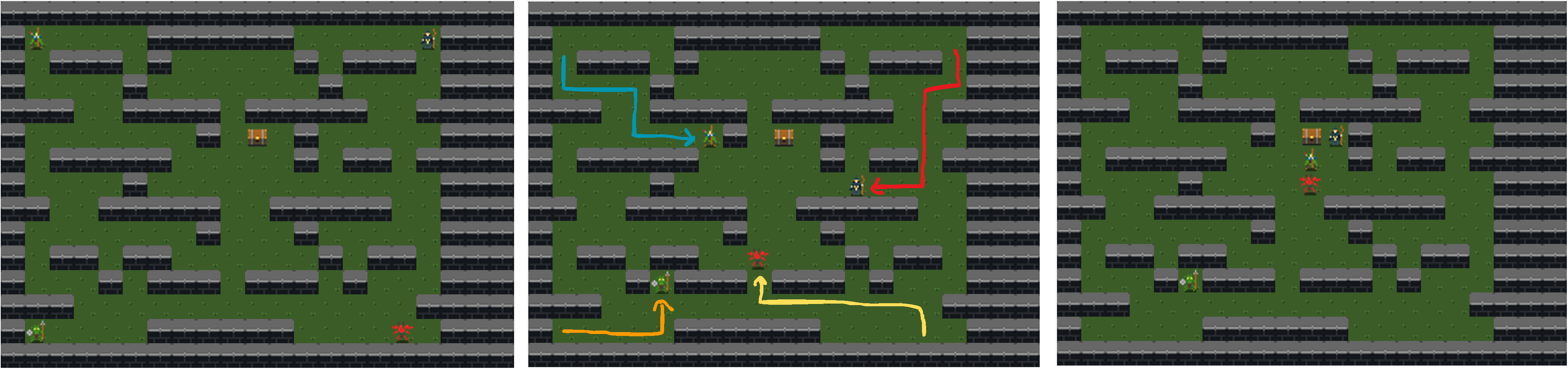

We address this challenge by introducing a mechanic-centric framework for automatic game design. The central idea is to evolve mechanics not in isolation, but through their contribution to the quality of full games. Specifically, we evaluate mechanics by constructing complete games around them, and measuring whether the resulting games induce a skill-based ordering over players of different Figure 1: A flow diagram of MORTAR capabilities. This allows us to define a concrete notion of usefulness for a mechanic: its contribution to the overall expressivity and skill gradient of the games in which it appears.

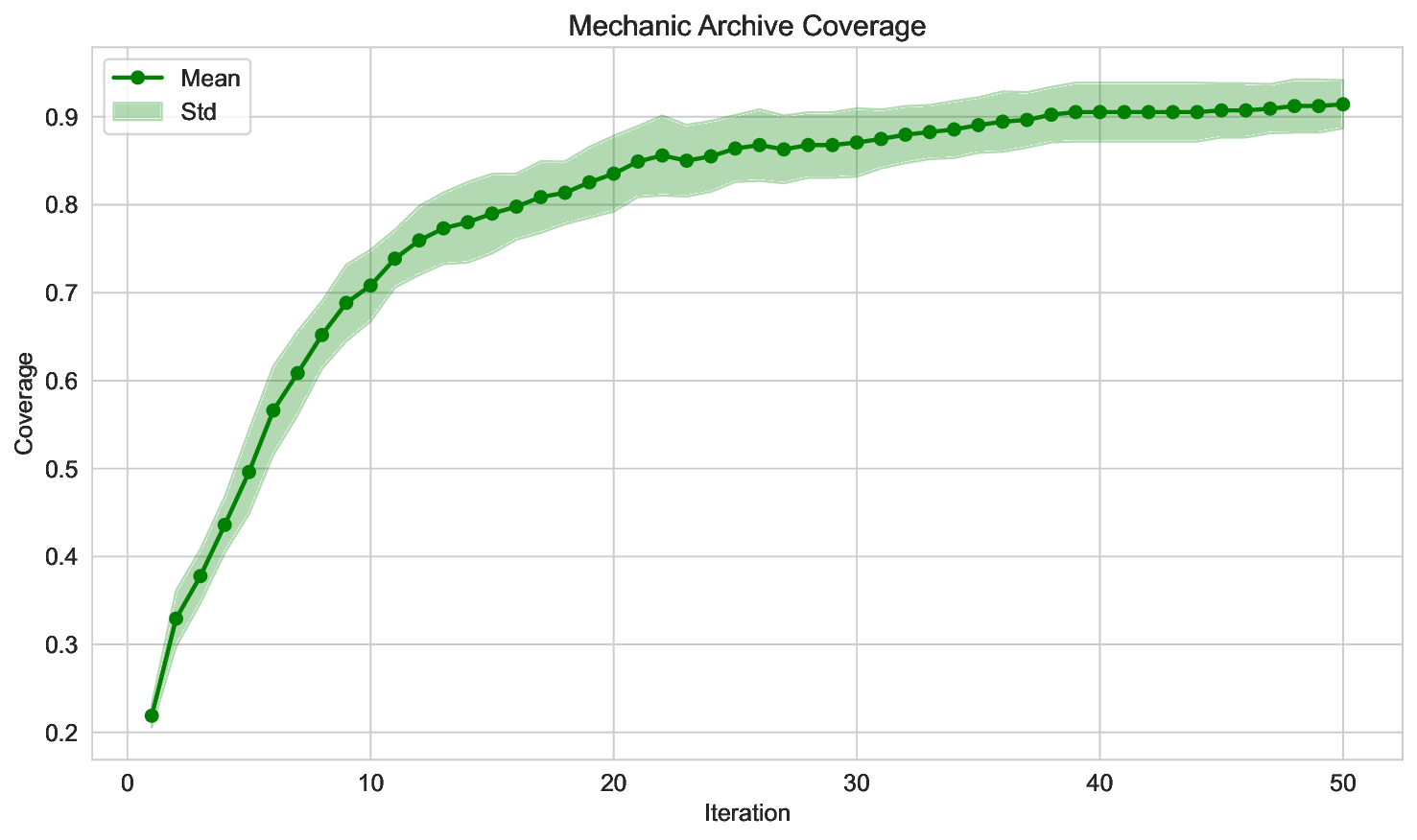

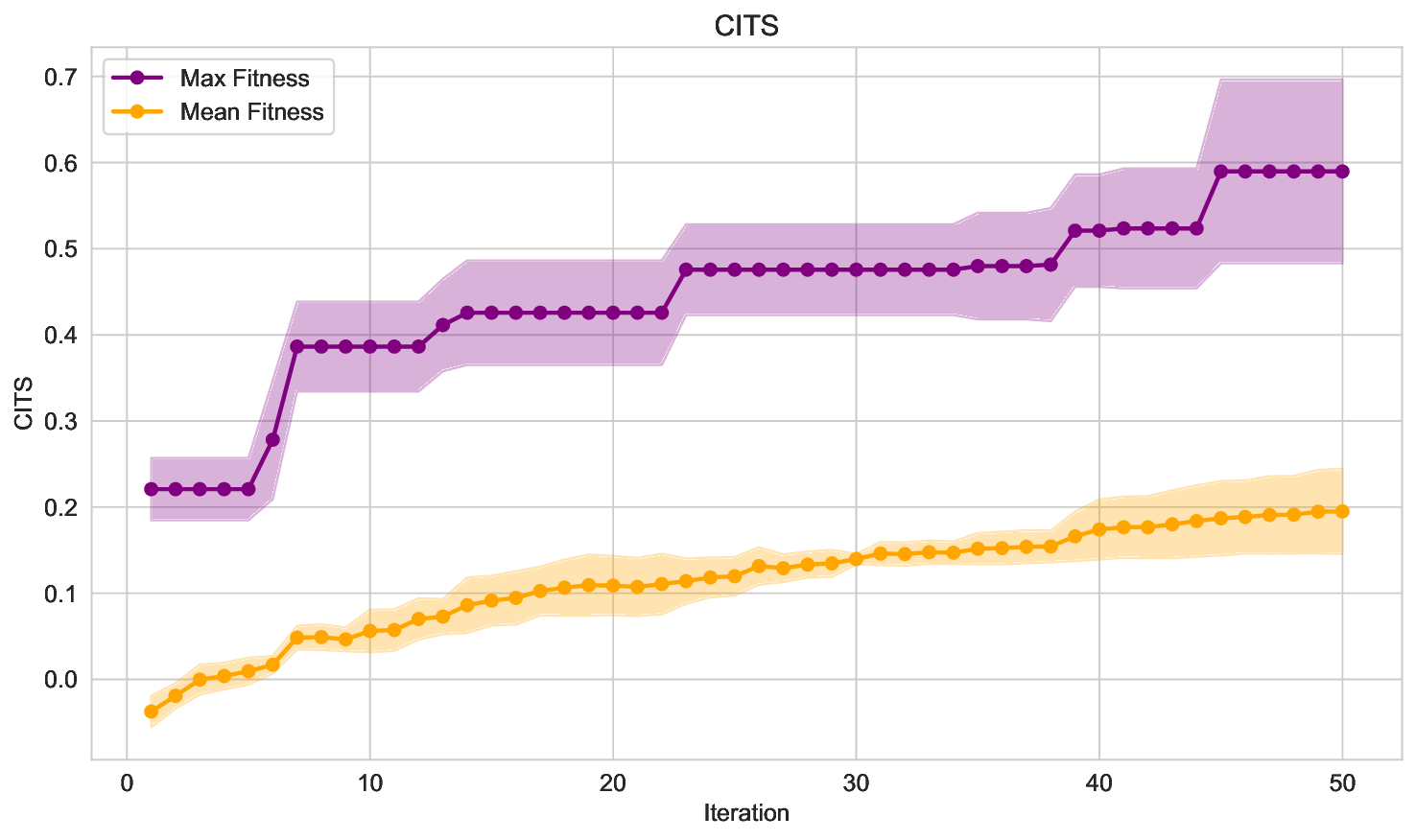

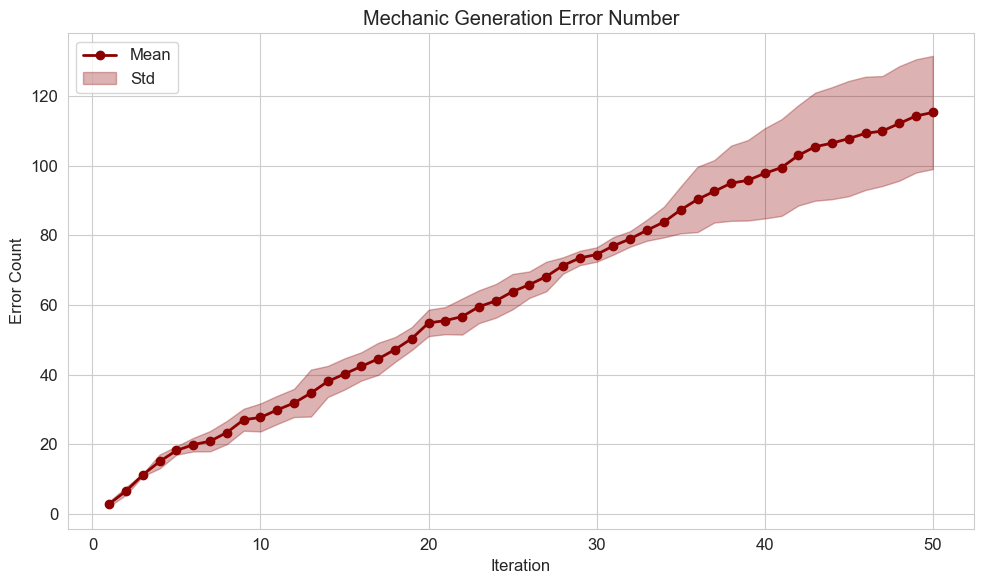

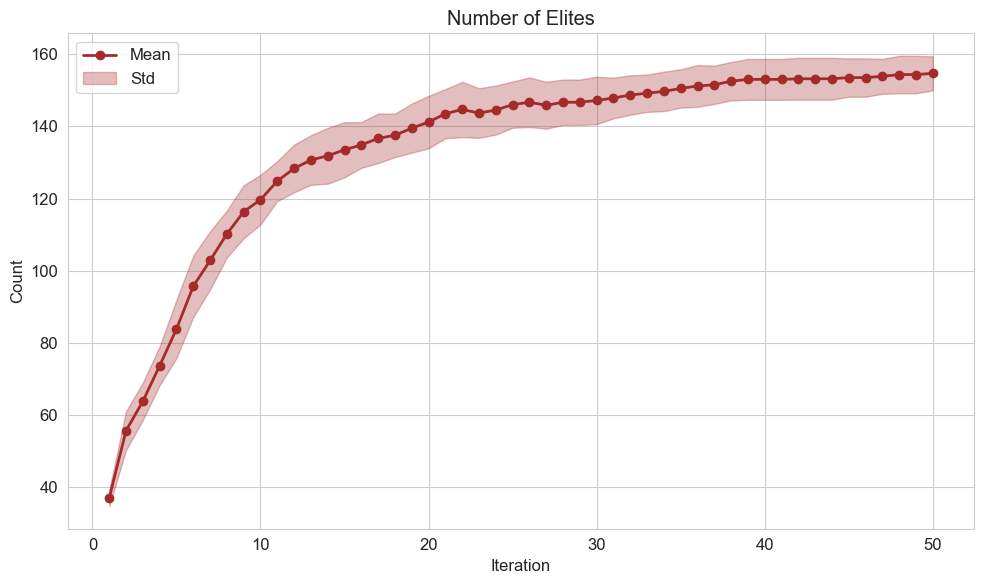

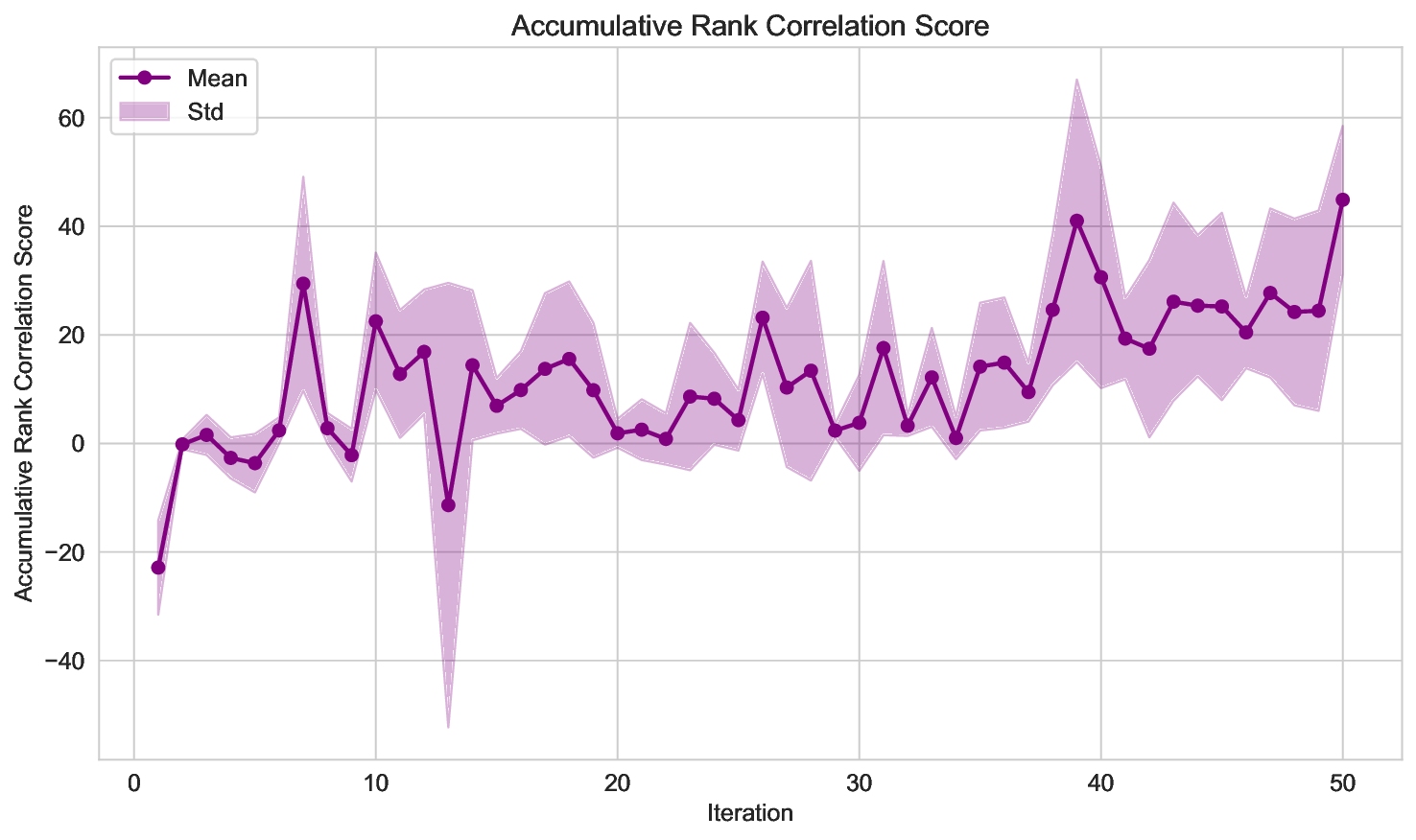

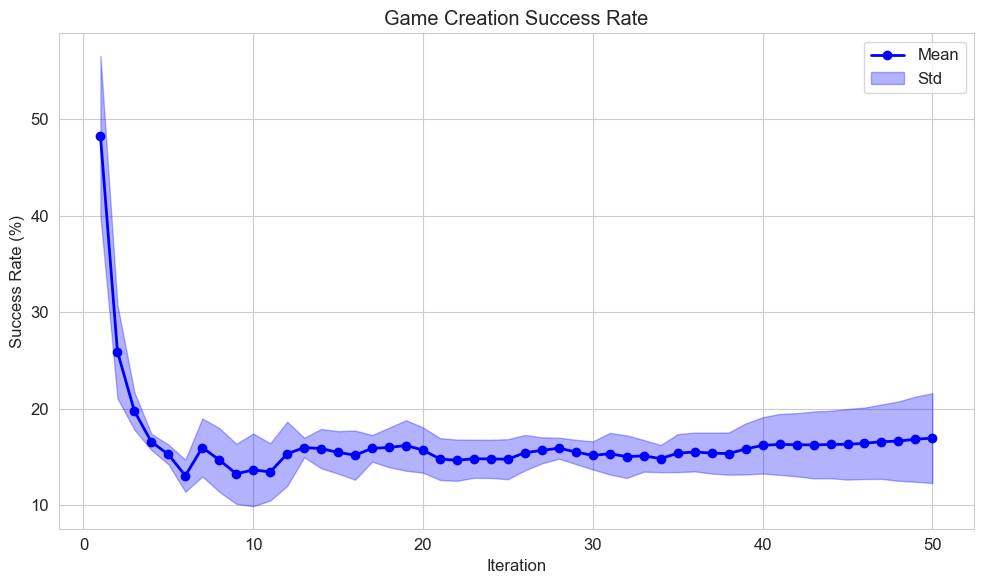

We introduce MORTAR, a system that evolves game mechanics using a quality-diversity algorithm guided by a large language model (LLM). MORTAR maintains a diverse archive of mechanics, represented as code snippets, which are mutated and recombined through LLM-driven evolutionary operators. Each evolved mechanic is evaluated by embedding it into full games constructed via Monte Carlo Tree Search, which incrementally builds games by composing mechanics from the archive. These games are evaluated based on their ability to induce a consistent skill-based ranking over a fixed set of agents. We define a novel fitness measure, which quantifies the contribution of a mechanic to the final game’s skill-based ordering, inspired by Shapley values (Shapley, 2016).

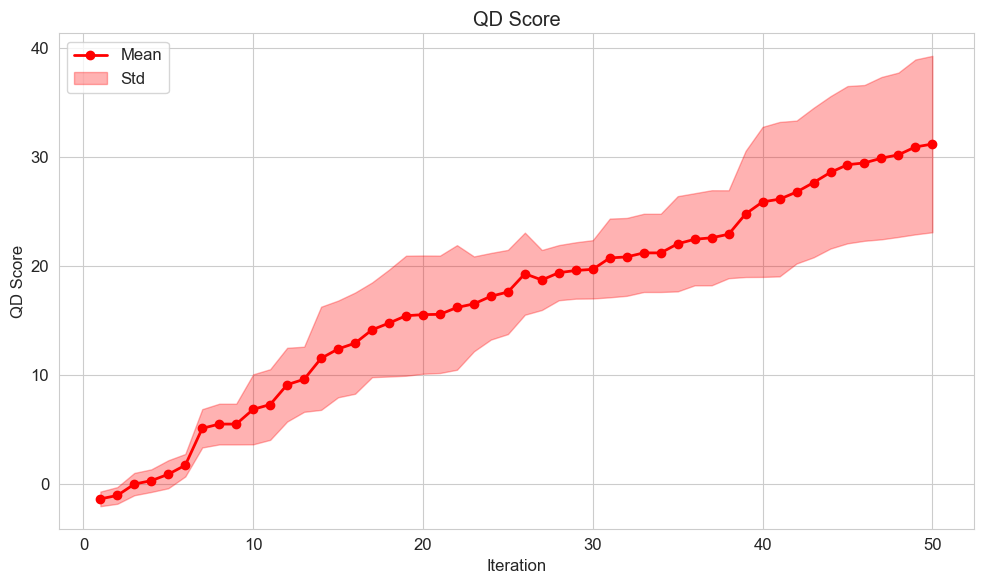

We demonstrate that MORTAR can evolve a diverse set of game mechanics that contribute to the quality and playability of the generated games. 1 The resulting games exhibit coherent structure, varied interaction patterns, and meaningful skill gradients. Through ablations, we show that both the tree-search-based composition and the LLM-driven mutations are critical for generating highquality mechanics. Our results highlight the potential for using LLMs not only as generators, but as evaluators and collaborators in the game design loop. Compared to existing work on game generation, MORTAR is the first system to apply an LLM-driven quality-diversity method to video game generation, which is a significantly different problem than board game generation; we also show that it is possible to do this in code space without a domain-specific language, and introduce a novel fitness measure.

The system described here is a research prototype for the purposes of understanding how to best generate complementary game mechanics. However, it could also serve as an ideation tool for game designers, suggesting new mechanics and mechanic combinations, perhaps in response to designer input. It is not meant to generate complete games, and aims to empower rather than replace game designers.

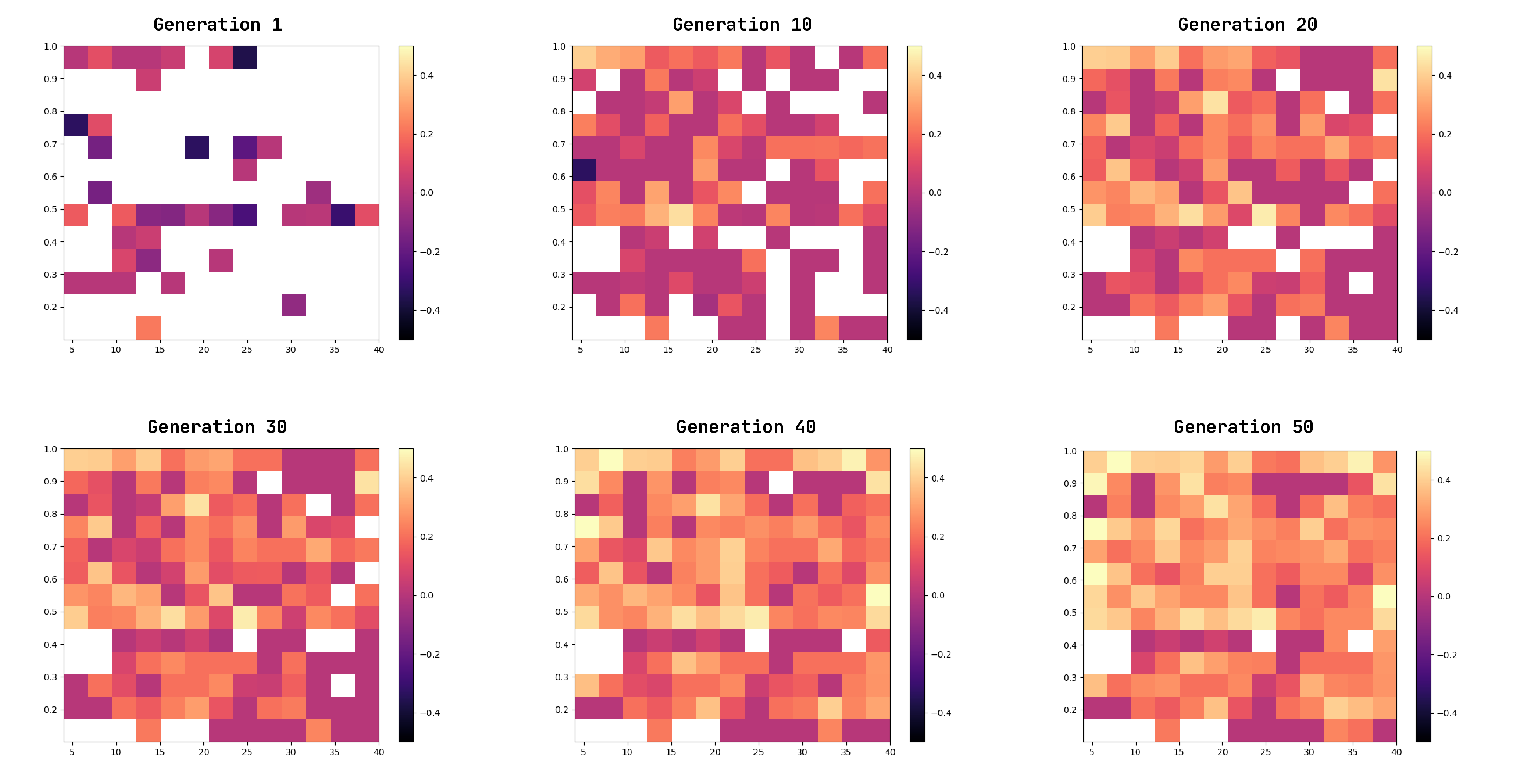

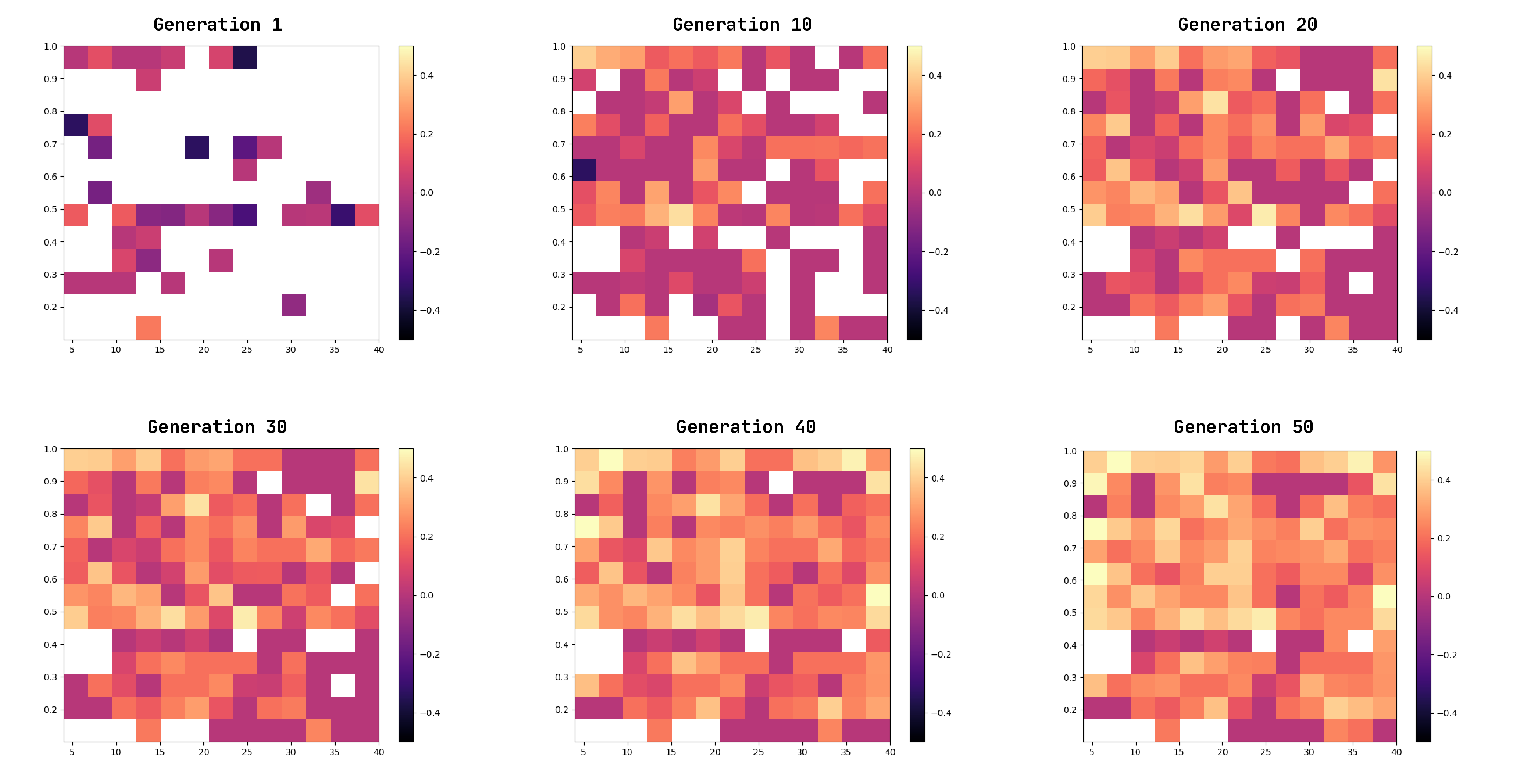

MORTAR employs a Quality-Diversity (QD) algorithm, using a fixed 2D archive (as in MAP-Elites (Mouret & Clune, 2015)) to store and explore diverse game mechanics. We refer to this structure as the Mechanics Archive. Each mechanic is represented as a Python function belonging to a game class, and placed in the archive based on two behavioural descriptors:

- Mechanic Type: A categorical descriptor indicating the gameplay behaviour the mechanic enables. We define 8 m

This content is AI-processed based on open access ArXiv data.