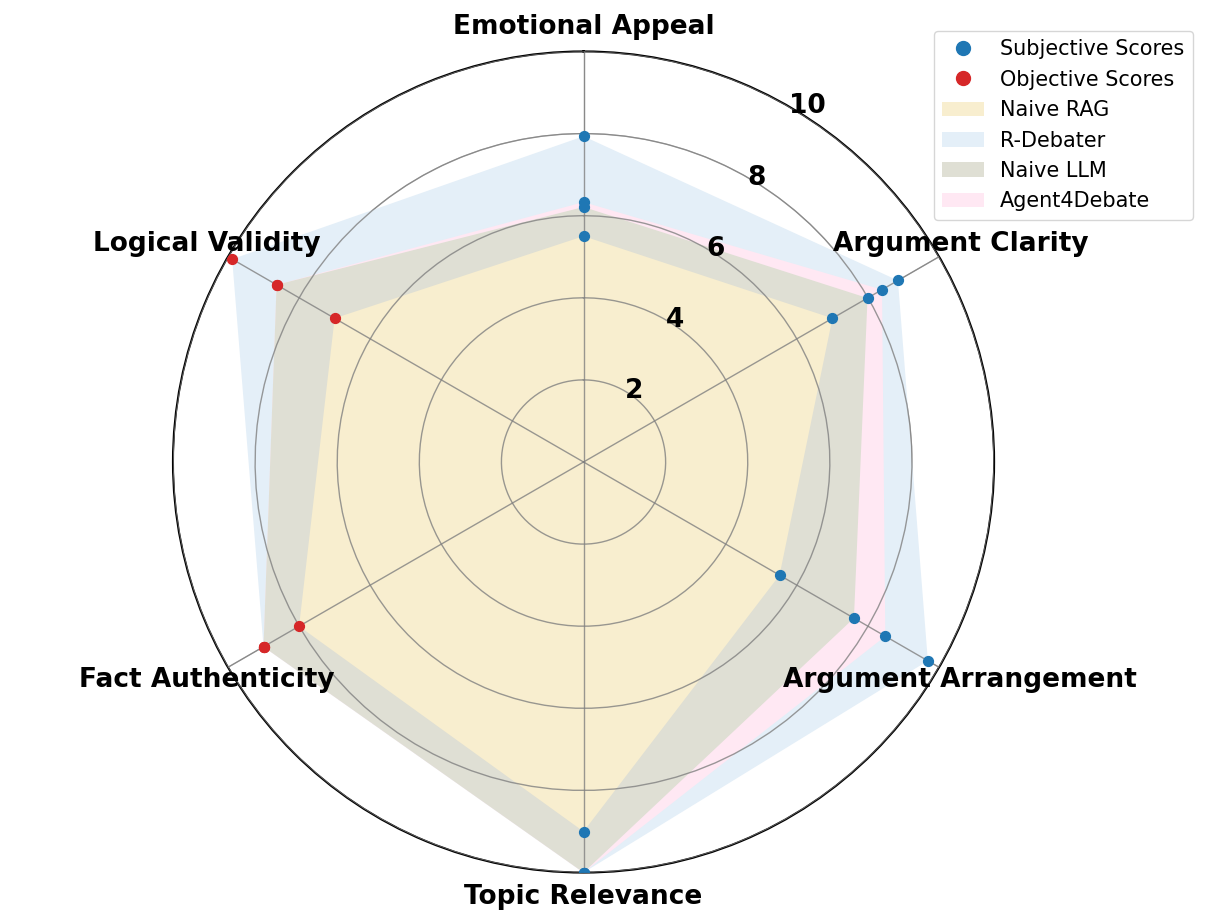

We present R-Debater, an agentic framework for generating multiturn debates built on argumentative memory. Grounded in rhetoric and memory studies, the system views debate as a process of recalling and adapting prior arguments to maintain stance consistency, respond to opponents, and support claims with evidence. Specifically, R-Debater integrates a debate knowledge base for retrieving caselike evidence and prior debate moves with a role-based agent that composes coherent utterances across turns. We evaluate on standardized ORCHID debates, constructing a 1,000-item retrieval corpus and a held-out set of 32 debates across seven domains. Two tasks are evaluated: next-utterance generation, assessed by InspireScore (subjective, logical, and factual), and adversarial multi-turn simulations, judged by Debatrix (argument, source, language, and overall). Compared with strong LLM baselines, R-Debater achieves higher single-turn and multi-turn scores. Human evaluation with 20 experienced debaters further confirms its consistency and evidence use, showing that combining retrieval grounding with structured planning yields more faithful, stance-aligned, and coherent debates across turns. Code and supplementary materials are available at https://anonymous.4open.science/r/R-debater-E87F/.

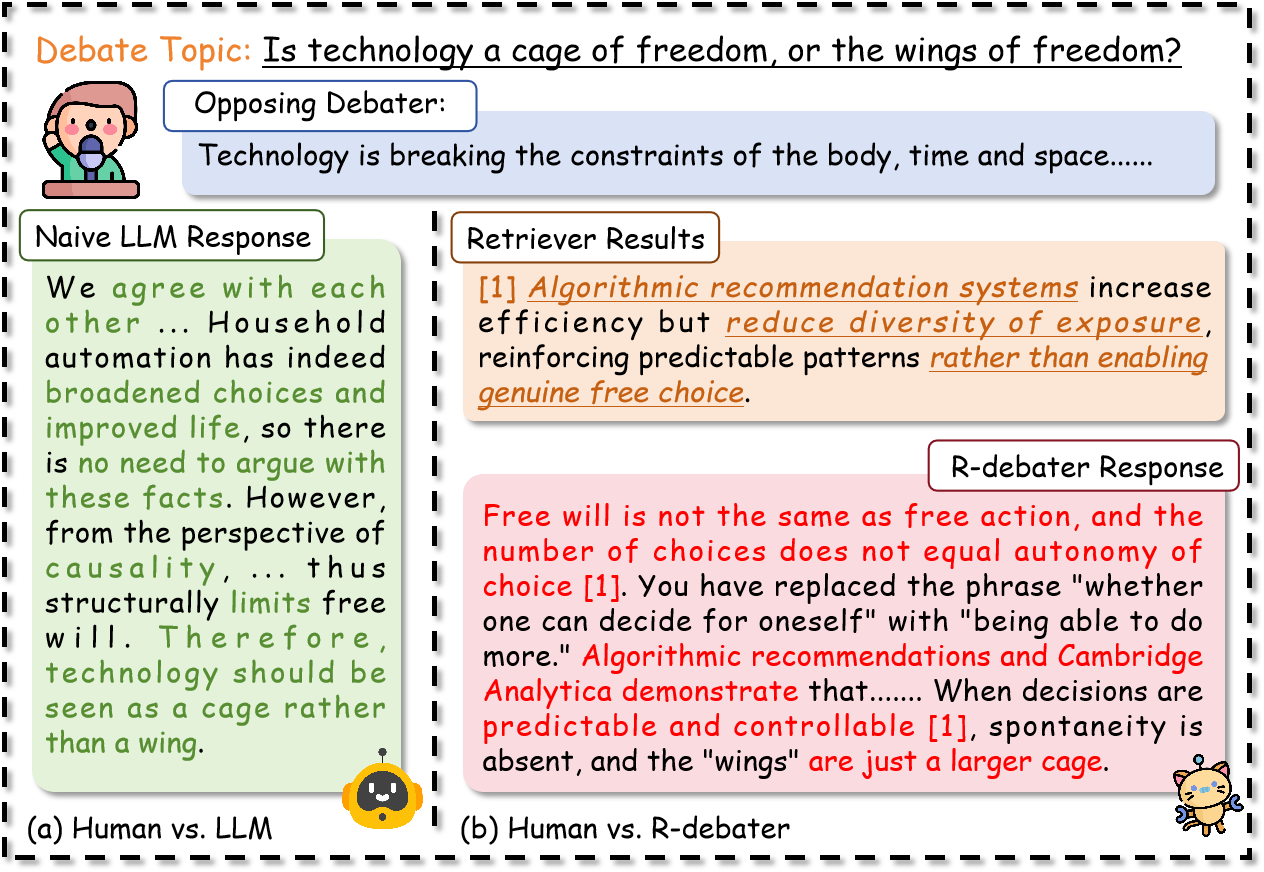

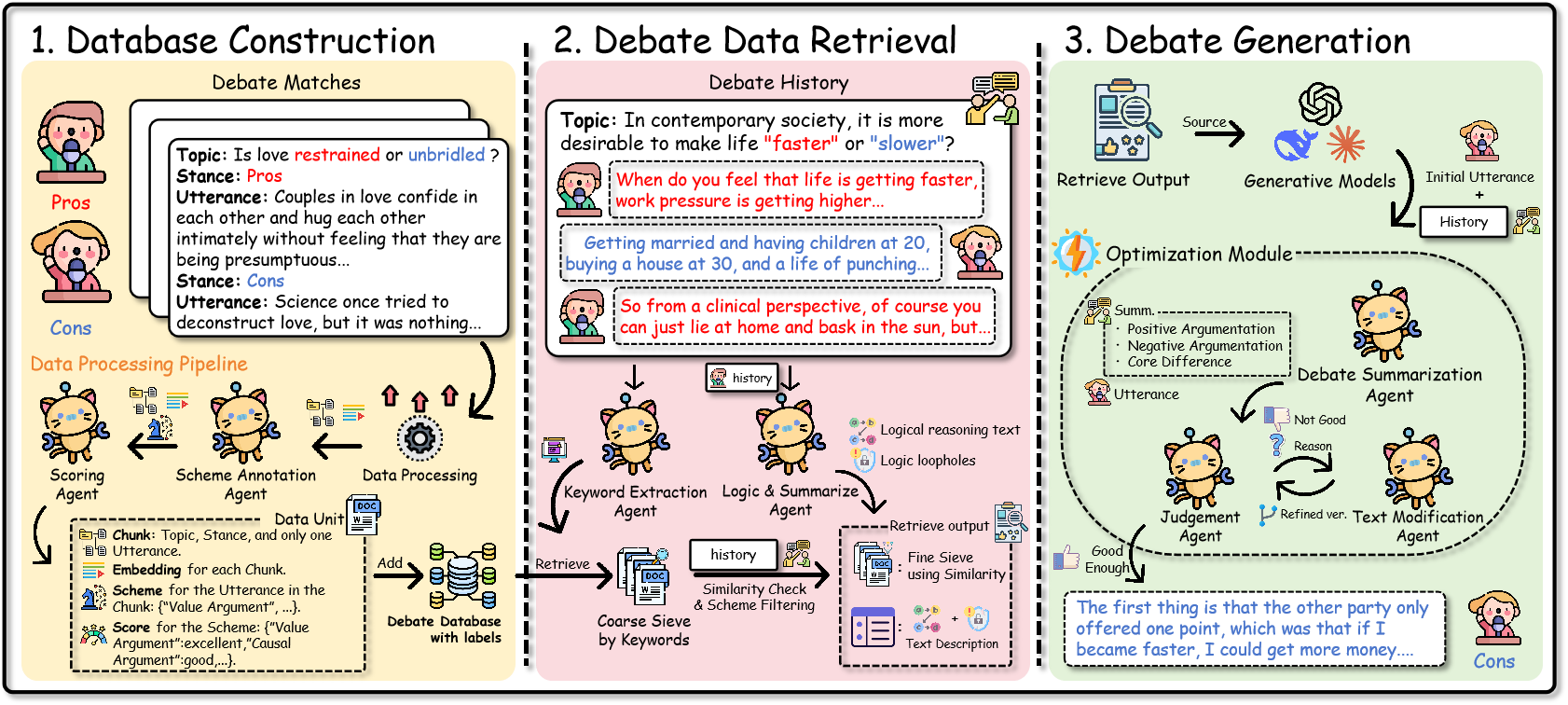

Competitive debate distills public reasoning into a structured, adversarial setting where speakers must plan multi-turn rhetoric, maintain stance, and ground claims in verifiable evidence. Prior work, from end-to-end debating systems (e.g., Project Debater [37]) to a mature literature on argument mining [22], has advanced pipelines for discovering and organizing arguments. Meanwhile, Large Language Models (LLMs) have markedly improved open-ended dialogue and text generation [21,33], but when faced with competitive debate, their outputs often remain fluent yet shallow: They are insufficiently grounded, weak on stance fidelity, and brittle over many turns. Therefore, one should pivot to the capacities that make argumentation stance-faithful and durable across turns.

Building on this insight, we treat argumentation not only as a computational task but as a cognitive and rhetorical process grounded in human memory and discourse. As Vitale notes, public argumentation operates through a rhetorical-argumentative memory that recycles and reformulates prior persuasive strategies in new situations [44]. Likewise, Aleida Assmann in her acclaimed theory of cultural memory conceptualizes collective memory as a dialogic, dynamic reconstruction of past discourses rather than a static archive [4]. These perspectives motivate our central premise: effective debate generation should retrieve and recontextualize argumentative memory, producing statements that are grounded in prior reasoning and robust rhetorical patterns.

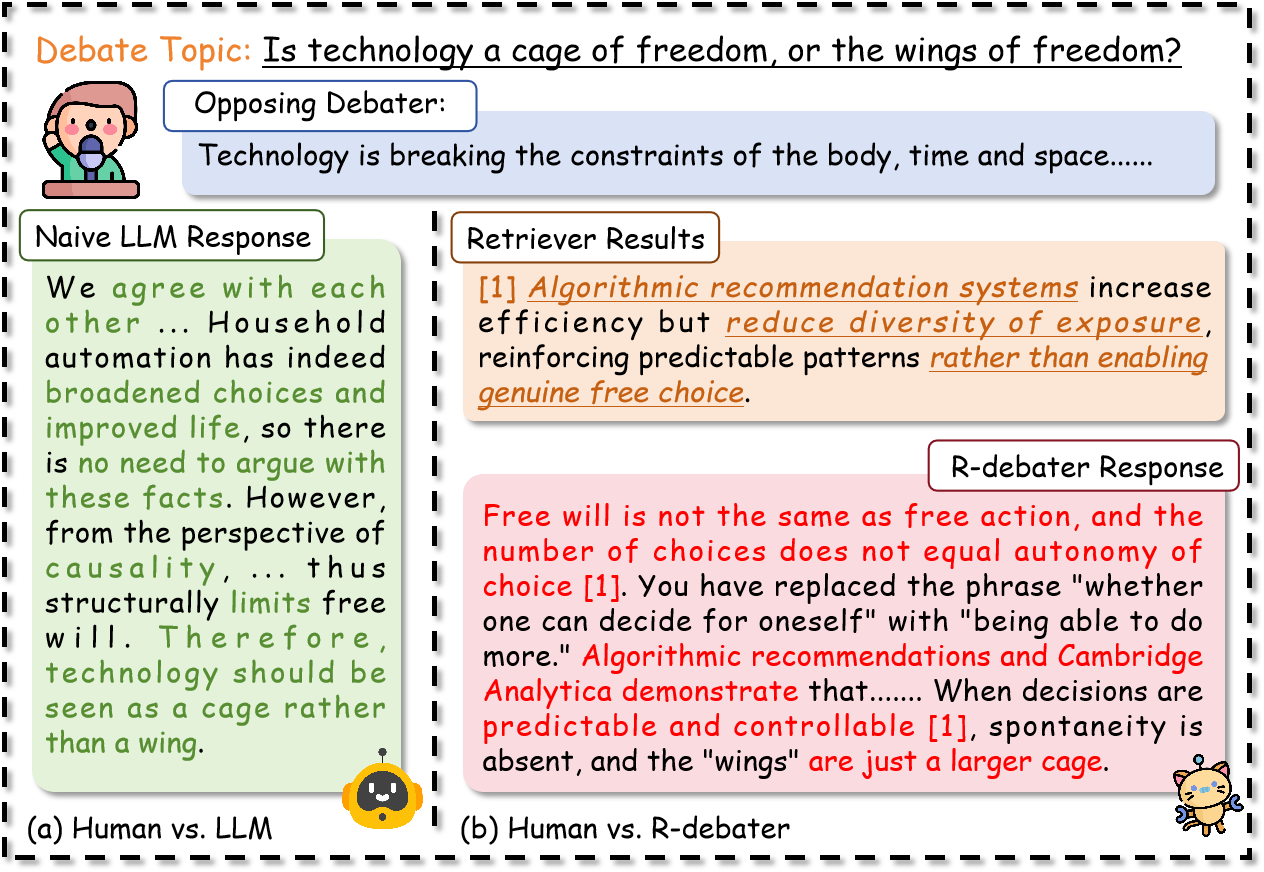

Retrieval-augmented generation (RAG) is a natural mechanism for recalling prior cases and evidence [25,36], and has proved useful in QA and open-domain assistants [20]. However, mainstream RAG stacks optimize for short factual responses and struggle with debate’s structured, adversarial recontextualization, where models must reconcile retrieved material with evolving discourse and opponent claims. Recent analyses also show a persistent “tug-of-war” between a model’s internal priors and retrieved evidence, yielding faithfulness and coverage errors without explicit control [48,52]. Concurrently, agentic AI frameworks provide machinery for planning and tool use [26,49], yet they rarely encode argumentative schemes [46] or dialogic memory needed to preserve stance and reasoning coherence across turns [54].

This paper addresses this gap by conceptualizing argumentative memory in computational terms. Specifically, we treat retrieval as the mechanism for recalling prior discourse and role-based planning as the mechanism for reframing it into new argumentative settings. Tackling this question raises three central challenges: First, generated statements must incorporate appropriate argumentative schemes and logical structures to be persuasive and coherent. Second, the system must maintain stance fidelity throughout the debate, ensuring that rebuttals are grounded in opponents’ claims while avoiding hallucinations or sycophantic tendencies. Finally, the model must retrieve high-quality, context-relevant debate materials and integrate them strategically into statement generation rather than reproducing retrieved content verbatim.

To address these challenges, we propose R-Debater, a framework that integrates retrieval-augmented reasoning with role-based planning for debate statement generation. Unlike prior systems, R-Debater leverages the entire debate history to generate stancespecific and rhetorically coherent utterances, bridging the gap between rhetorical theory and generative modeling.

We conduct extensive experiments on the ORCHID [57] dataset, which comprises over 1,000 formal debates across multiple domains. Evaluation demonstrates that R-Debater achieves substantial gains in both logical fidelity and rhetorical persuasiveness over strong LLM and RAG baselines. In particular, it delivers near-perfect logical coherence and markedly higher factual grounding, while human experts prefer its generated debates in over 75% of pairwise comparisons. These results confirm that R-Debater not only improves measurable debate quality but also aligns closely with expert judgements, validating its reliability and interpretability.

Overall, our main contributions are as follows:

• We present, to the best of our knowledge, the first systematic investigation of debate statement generation with LLMs under realistic multi-turn settings. • We propose R-Debater, a novel framework that integrates argumentation structures, debate strategies, stance fidelity mechanisms, and retrieval-based case reasoning to address the core challenges of debate generation. • Extensive empirical validation on debate datasets demonstrates that R-Debater significantly outperforms strong LLM and RAG baselines in terms of factual accuracy, stance consistency, and cross-turn coherence.

Argumentation research has long-standing roots in symbolic reasoning and formal logic [10,11,[39][40][41][42]45], with early computational approaches offering transparen

This content is AI-processed based on open access ArXiv data.