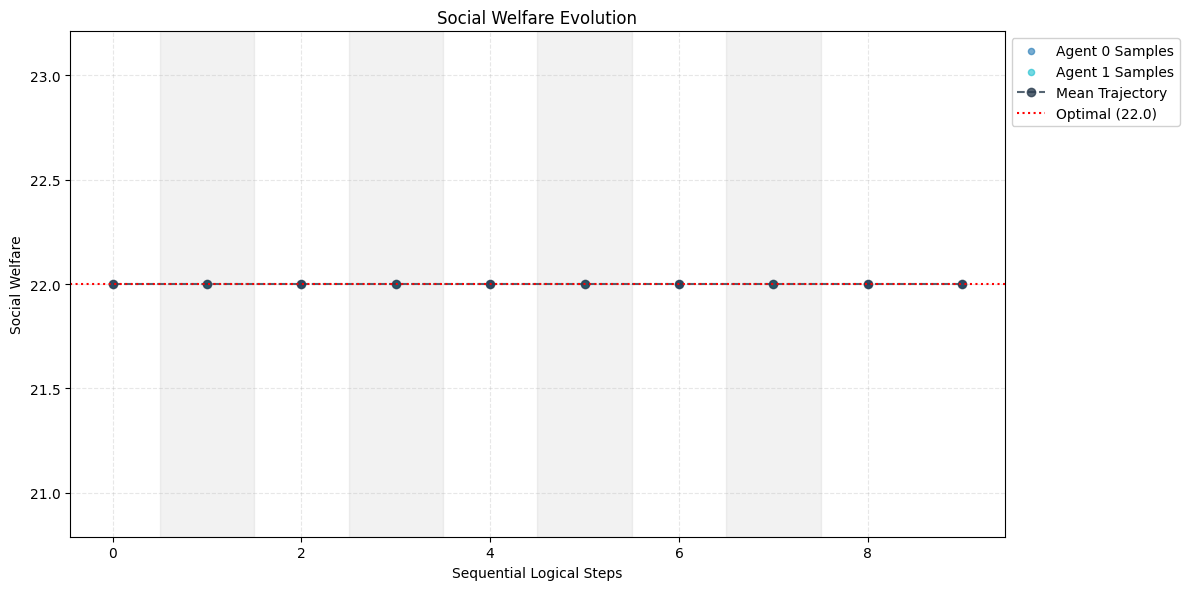

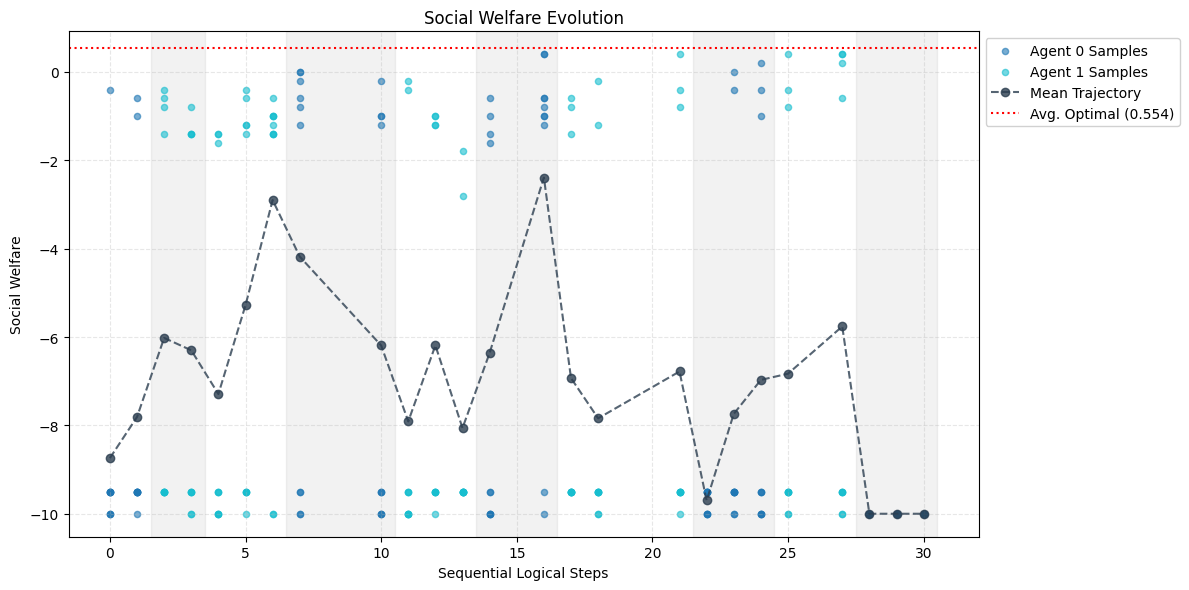

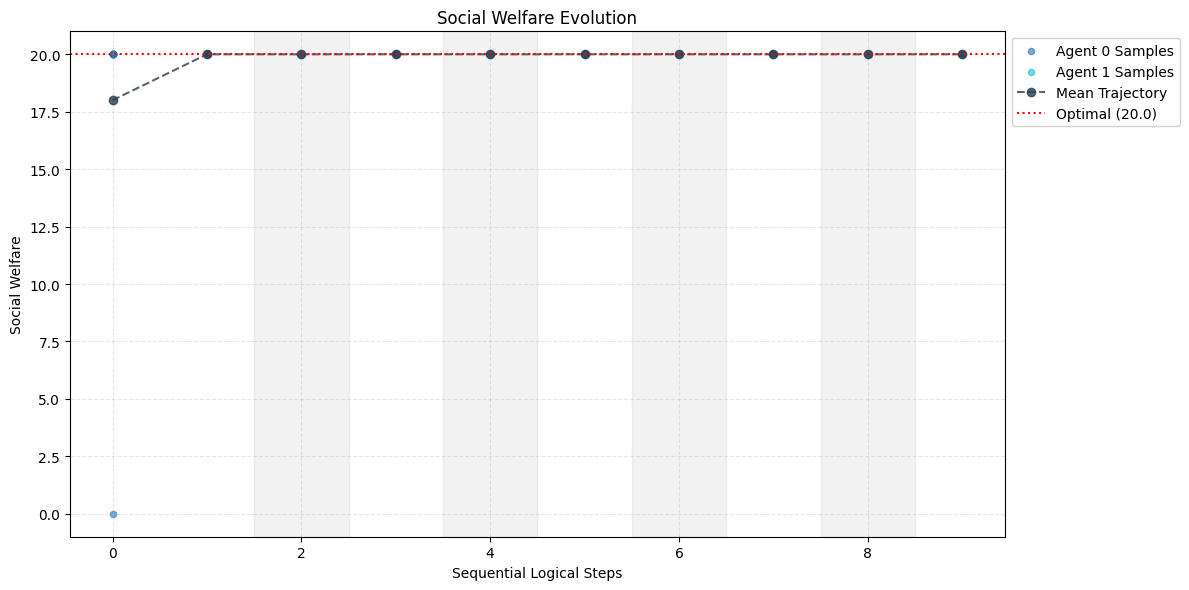

In multi-agent tasks, the central challenge lies in the dynamic adaptation of strategies. However, directly conditioning on opponents' strategies is intractable in the prevalent deep reinforcement learning paradigm due to a fundamental ``representational bottleneck'': neural policies are opaque, high-dimensional parameter vectors that are incomprehensible to other agents. In this work, we propose a paradigm shift that bridges this gap by representing policies as human-interpretable source code and utilizing Large Language Models (LLMs) as approximate interpreters. This programmatic representation allows us to operationalize the game-theoretic concept of \textit{Program Equilibrium}. We reformulate the learning problem by utilizing LLMs to perform optimization directly in the space of programmatic policies. The LLM functions as a point-wise best-response operator that iteratively synthesizes and refines the ego agent's policy code to respond to the opponent's strategy. We formalize this process as \textit{Programmatic Iterated Best Response (PIBR)}, an algorithm where the policy code is optimized by textual gradients, using structured feedback derived from game utility and runtime unit tests. We demonstrate that this approach effectively solves several standard coordination matrix games and a cooperative Level-Based Foraging environment.

The study of multi-agent tasks, typically formalized as Markov games (or stochastic games) (Shapley, 1953), presents a complexity that fundamentally transcends single-agent decision-making. In these settings, the environment is non-stationary from the perspective of any individual agent, as the state transitions and expected rewards are co-determined by the evolving policies of interacting agents. Consequently, there is no single optimal policy in isolation; rather, an agent must continuously compute a best response to the varying strategies of its opponents.

Canonical approaches to this problem, particularly in Multi-Agent Reinforcement Learning (MARL), typically rely on opponent modeling (He et al., 2016). Agents attempt to infer the current policies of their counterparts from the history of interactions. However, inferring complex, co-adapting policies from limited, high-dimensional, and non-stationary historical data remains an exceptionally difficult problem.

A theoretically more direct alternative is to explicitly condition an agent’s policy on the actual policy of the opponent. In the domain of game theory, this ideal is captured by the concept of Program Equilibrium (Tennenholtz, 2004), where agents delegate decision-making to programs that can read and condition on each other’s source code. This framework theoretically enables rich cooperative outcomes (e.g., via “mutual cooperation” proofs in the Prisoner’s Dilemma) that are inaccessible to standard Nash equilibria.

However, Program Equilibrium has remained largely theoretical and disconnected from modern learning approaches. In the prevalent Deep Reinforcement Learning (DRL) paradigm, implementing such policy-conditioning is practically intractable due to a “representational bottleneck”. Policies are represented as deep neural networks defined by millions of opaque parameters. Inputting one neural network’s weights into another is computationally prohibitive and semantically ill-defined, as 1. Motivation from MARL Challenges: While Sistla & Kleiman-Weiner (2025a) focuses on evaluating LLMs within a game-theoretic context, our approach stems fundamentally from the perspective of MARL. We identify programmatic policies specifically as a solution to the “representational bottleneck” and non-stationarity in MARL. We frame this not merely as an evaluation of LLMs, but as a necessary paradigm shift to solve the recursive reasoning challenges inherent in learning co-adapting policies.

- Optimization via Textual Gradients: Sistla & Kleiman-Weiner (2025a) primarily relies on the inherent reasoning capabilities of LLMs via prompting. In contrast, we propose Programmatic Iterated Best Response (PIBR) as a formal optimization algorithm. Our method explicitly treats the LLM as a definable operator optimized via textual gradients (Yuksekgonul et al., 2024). We incorporate a structured feedback loop that combines game utility with runtime unit tests, ensuring that the generated policies are not only strategically sound but also syntactically robust and executable.

We extend the validation of programmatic policies beyond standard matrix games (which are the primary focus of concurrent works) to the Level-Based Foraging environment. This is a complex grid-world task requiring spatial coordination and sequential decision-making, demonstrating the capability of our method to handle higherdimensional state spaces and more intricate coordination dynamics.

Acknowledging the precedence of Sistla & Kleiman-Weiner (2025a), we refrain from submitting this specific version for formal conference publication to adhere to novelty standards. Instead, we release this manuscript as a technical report to establish the timeline of our independent contribution. We plan to incorporate these findings into future submissions that feature significant technical improvements or novel extensions beyond the scope of the current discussion.

This section provides the necessary background for our method. We begin by establishing the formalisms for multi-agent learning, including game settings and solution concepts. Then we discuss the two fundamental challenges that motivate our work: non-stationarity and the complexities of opponent modeling. Finally, we introduce the concept of program equilibrium and provability logic, which provide the theoretical basis for agents that can condition their policies on the source code of their opponents. A detailed discussion of related work is deferred to Appendix A.

In this work, we focus on the multi-agent task which is formulated as a Markov Game (Littman, 1994), also known as a stochastic game (Shapley, 1953). A Markov Game for N agents is defined by a tuple G = ⟨N, S, {A i } N i=1 , T , {r i } N i=1 , γ⟩. S is the set of global states. A i is the action space for agent i, and the joint action space is A = × N i=1 A i . The function T : S × A → ∆(S) is the state transition probability function. Each agent i has a reward function r i : S × A →

This content is AI-processed based on open access ArXiv data.