With recent advances in large language model (LLM) agents, memory systems have become the focus of storing and retrieving long-term, personalized memories. They can typically be categorized into two groups: 1) Parametric methods (Wang et al., 2024;Fang et al., 2025b) that encode memories implicitly into model parameters, which can handle specific knowledge better but struggle in scalability and continual updates, as modifying parameters to incorporate new memories often risks catastrophic forget-Emails: {Xingbo.Du, Longkang.Li, Duzhen.Zhang, Le.Song} @mbzuai.ac.ae 1 Mohamed bin Zayed University of Artificial Intelligence. Correspondence to: Le Song

.Preprint. December 24, 2025.

ting and requires expensive fine-tuning. 2) Non-parametric methods (Xu et al., 2025;LangChain Team, 2025;Chhikara et al., 2025;Rasmussen et al., 2025), in contrast, store explicit external information, enabling flexible retrieval and continual augmentation without altering model parameters. However, they typically rely on heuristic retrieval strategies, which can lead to noisy recall, heavy retrieval, and increasing latency as the memory store grows.

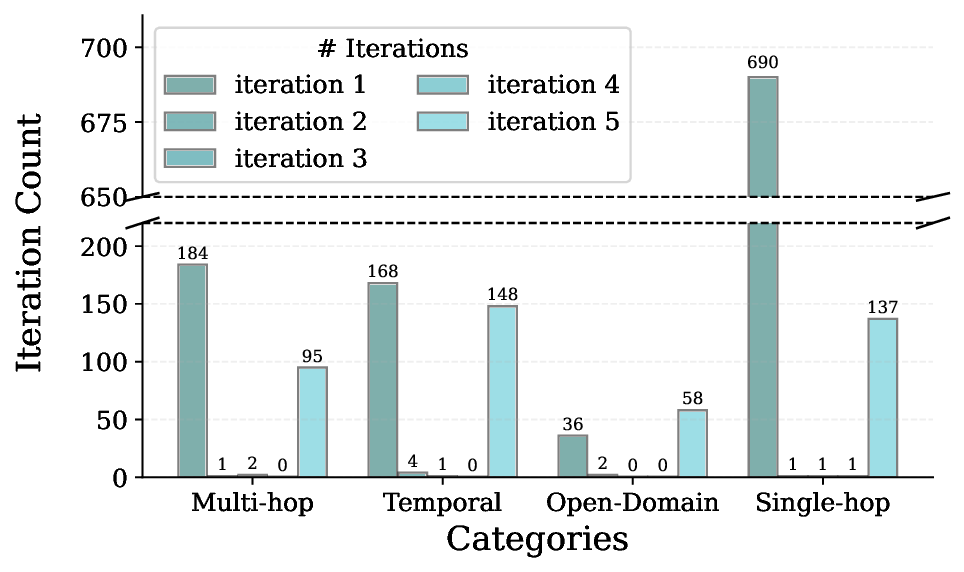

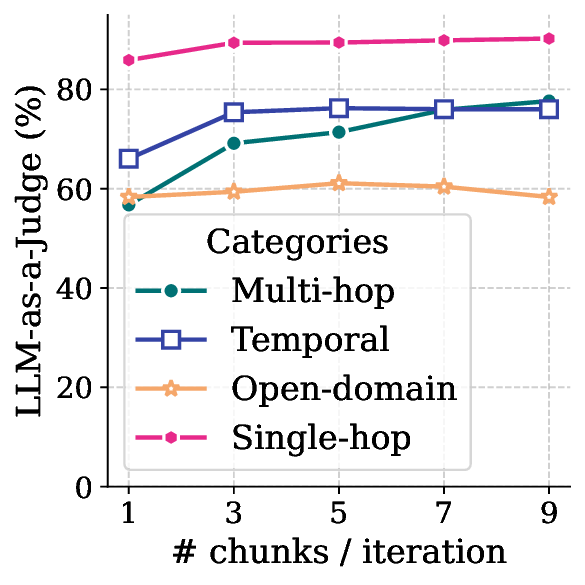

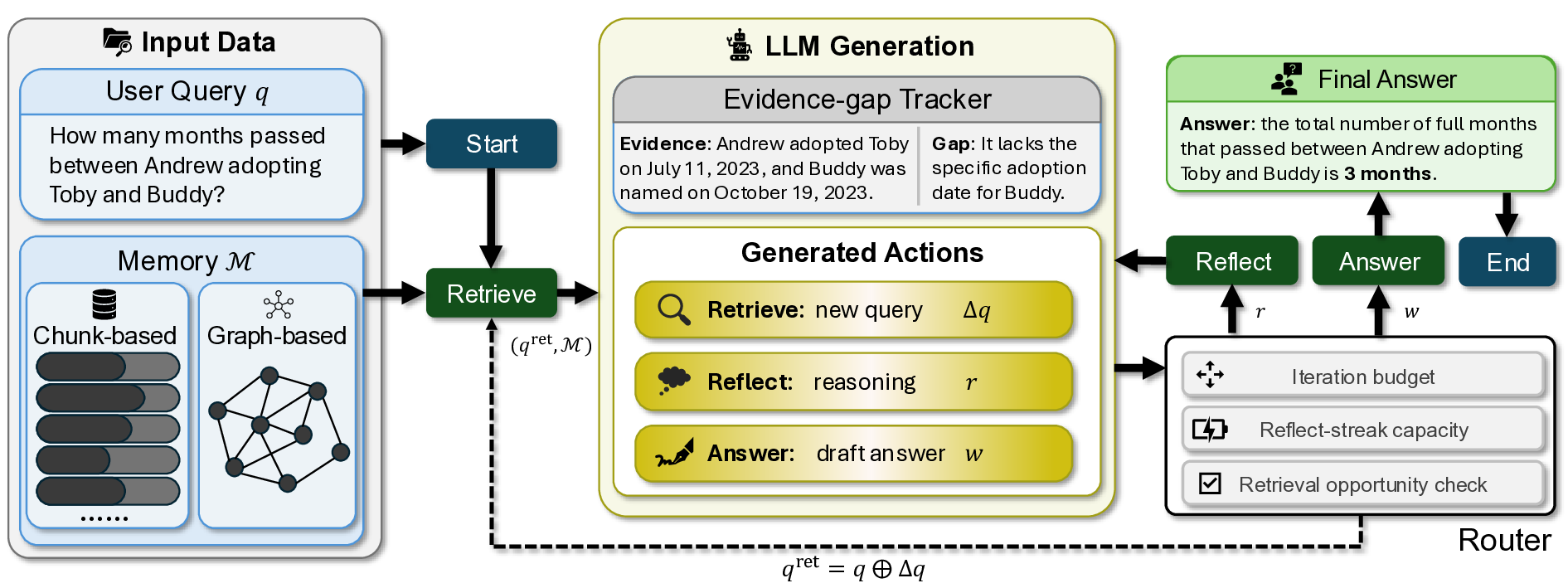

Orthogonal to these works, this paper constructs an agentic memory system, MemR 3 , i.e., Memory Retrieval system with Reflective Reasoning, to improve retrieval quality and efficiency. Specifically, this system is constructed using LangGraph (Inc., 2025), with a router node selecting three optional nodes: 1) the retrieve node, which is based on existing memory systems, can retrieve multiple times with updated retrieval queries. 2) the reflect node, iteratively reasoning based on the current acquired evidence and the gaps between questions and evidence. 3) the answer node that produces the final response using the acquired information. Within all nodes, the system maintains a global evidencegap tracker to update the acquired (evidence) and missing (gap) information.

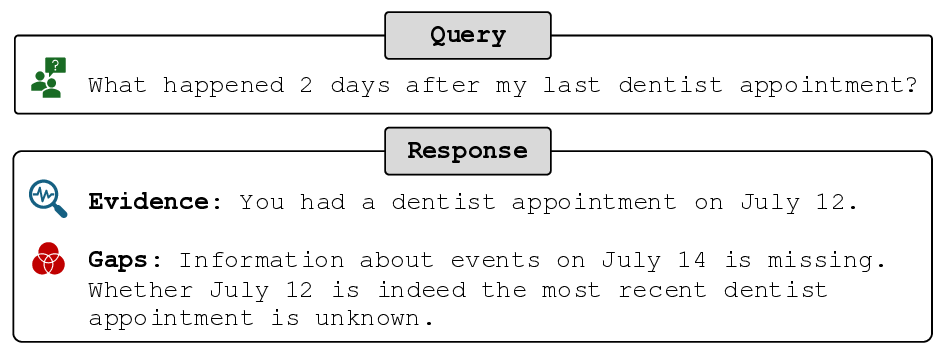

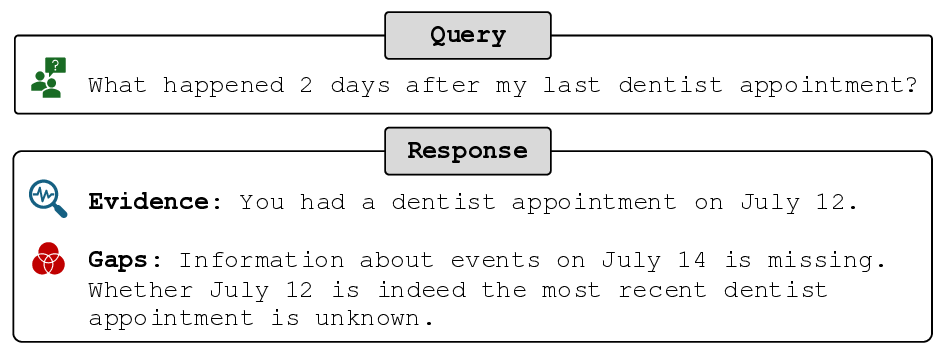

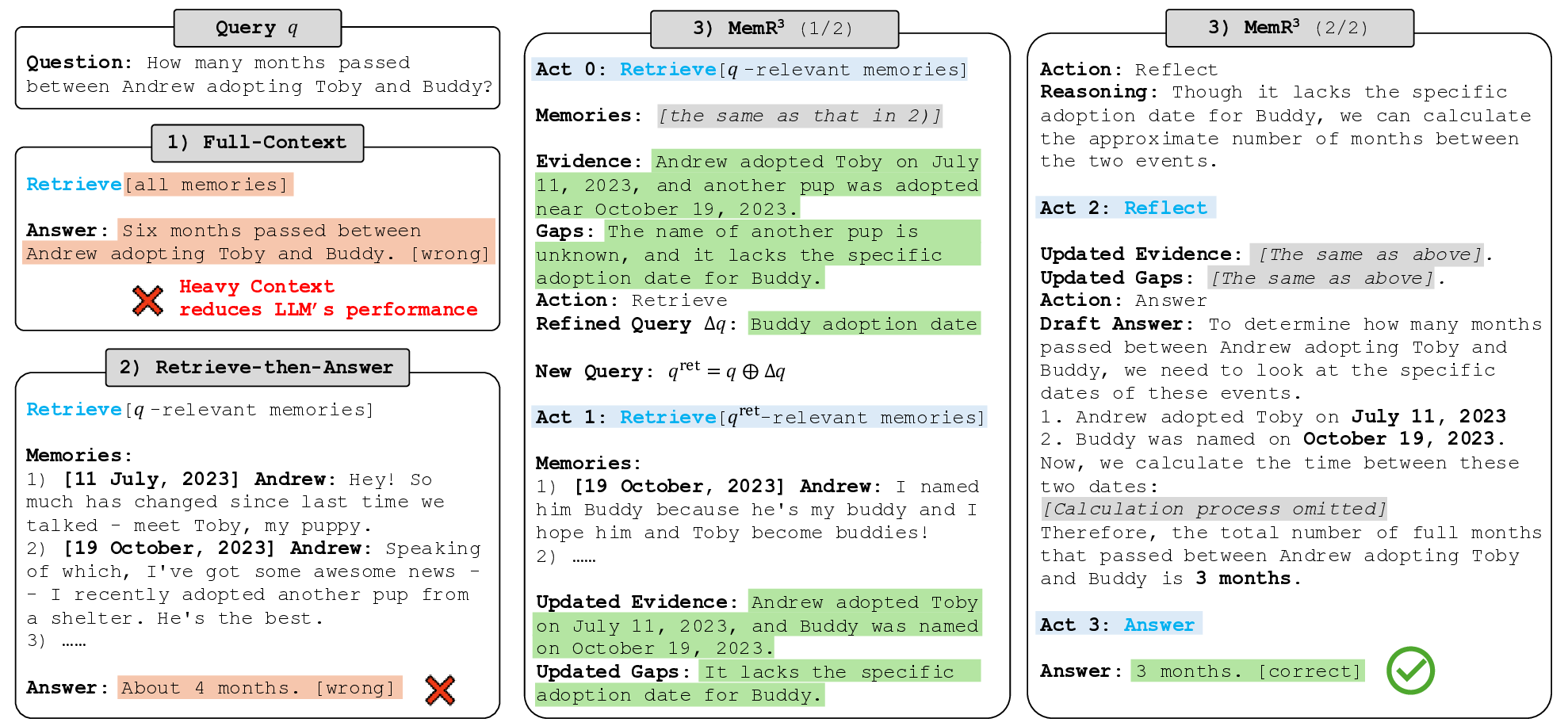

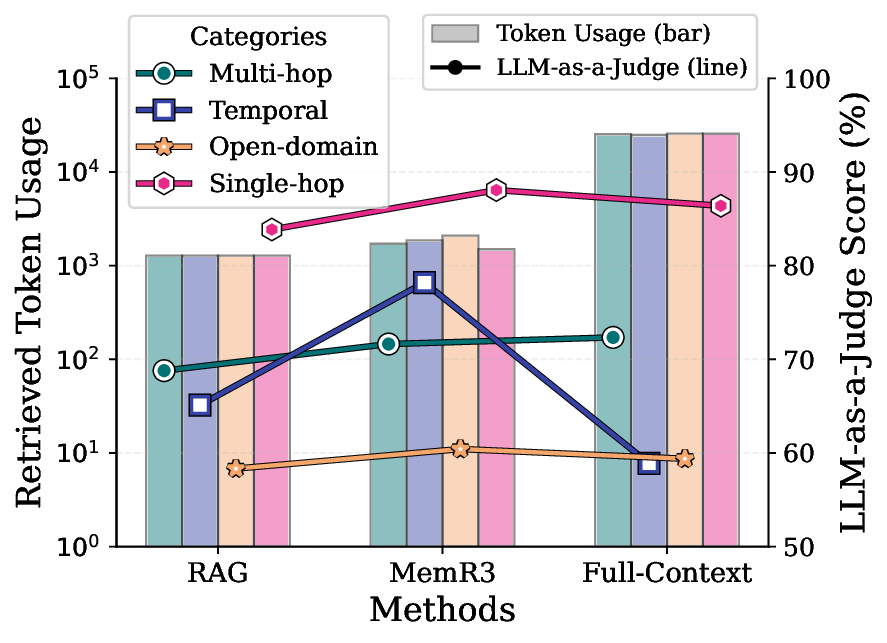

The system has three core advantages: 1) Accuracy and efficiency. By tracking the evidence and gap, and dynamically routing between retrieval and reflection, MemR 3 minimizes unnecessary lookups and reduces noise, resulting in faster, more accurate answers. 2) Plug-and-play usage. As a controller independent of existing retriever or memory storage, MemR 3 can be easily integrated into memory systems, improving retrieval quality without architectural changes. 3) Transparency and explainability. Since MemR 3 maintains an explicit evidence-gap state over the course of an interaction, it can expose which memories support a given answer and which pieces of information were still missing at each step, providing a human-readable trace of the agent’s decision process. We compare MemR 3 , the Full-Context setting (which uses all available memories), and the commonly adopted retrieve-then-answer paradigm from a high-level perspective in Fig. 1. The contributions of this work are threefold in the following:

(1) A specialized closed-loop retrieval controller for longterm conversational memory. We propose MemR 3 , an autonomous controller that wraps existing memory stores and Retrieve-then-Answer retrieves relevant snippets but still miscalculates. In contrast, MemR 3 iteratively retrieves and reflects using an evidence-gap tracker (Acts 0-3), refines the query about Buddy’s adoption date, and produces the correct answer (3 months).

turns standard retrieve-then-answer pipelines into a closedloop process with explicit actions (retrieve / reflect / answer) and simple early-stopping rules. This instantiates the general LLM-as-controller idea specifically for non-parametric, long-horizon conversational memory.

(2) Evidence-gap state abstraction for explainable retrieval. MemR 3 maintains a global evidence-gap state (E, G) that summarizes what has been reliably established in memory and what information remains missing. This state drives query refinement and stopping, and can be surfaced as a human-readable trace of the agent’s progress. We further formalize this abstraction via an abstract requirement space and prove basic monotonicity and completeness properties, which we later use to interpret empirical behaviors.

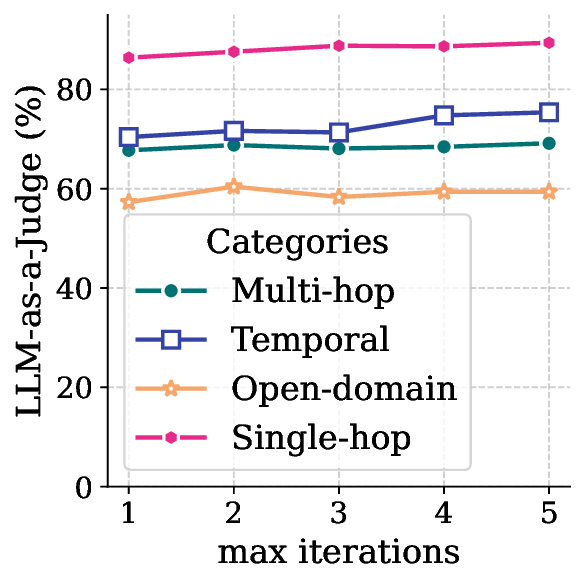

(3) Empirical study across memory systems. We integrate MemR 3 with both chunk-based RAG and a graph-based backend (Zep) on the LoCoMo benchmark and compare it with recent memory systems and agentic retrievers. Across backends and question types, MemR 3 consistently improves LLM-as-a-Judge scores over its underlying retrievers.

Prior work on non-parametric agent memory systems spans a wide range of fields, including management and utilization (Du et al., 2025), by storing structured (Rasmussen et al., 2025) or unstructured (Zhong et al., 2024) external knowledge. Specifically, production-oriented agents such as MemGPT (Packer et al., 2023) introduce an OSstyle hierarchical memory system that allows the model to page information between context and external storage, and SCM (Wang et al., 2023) provid