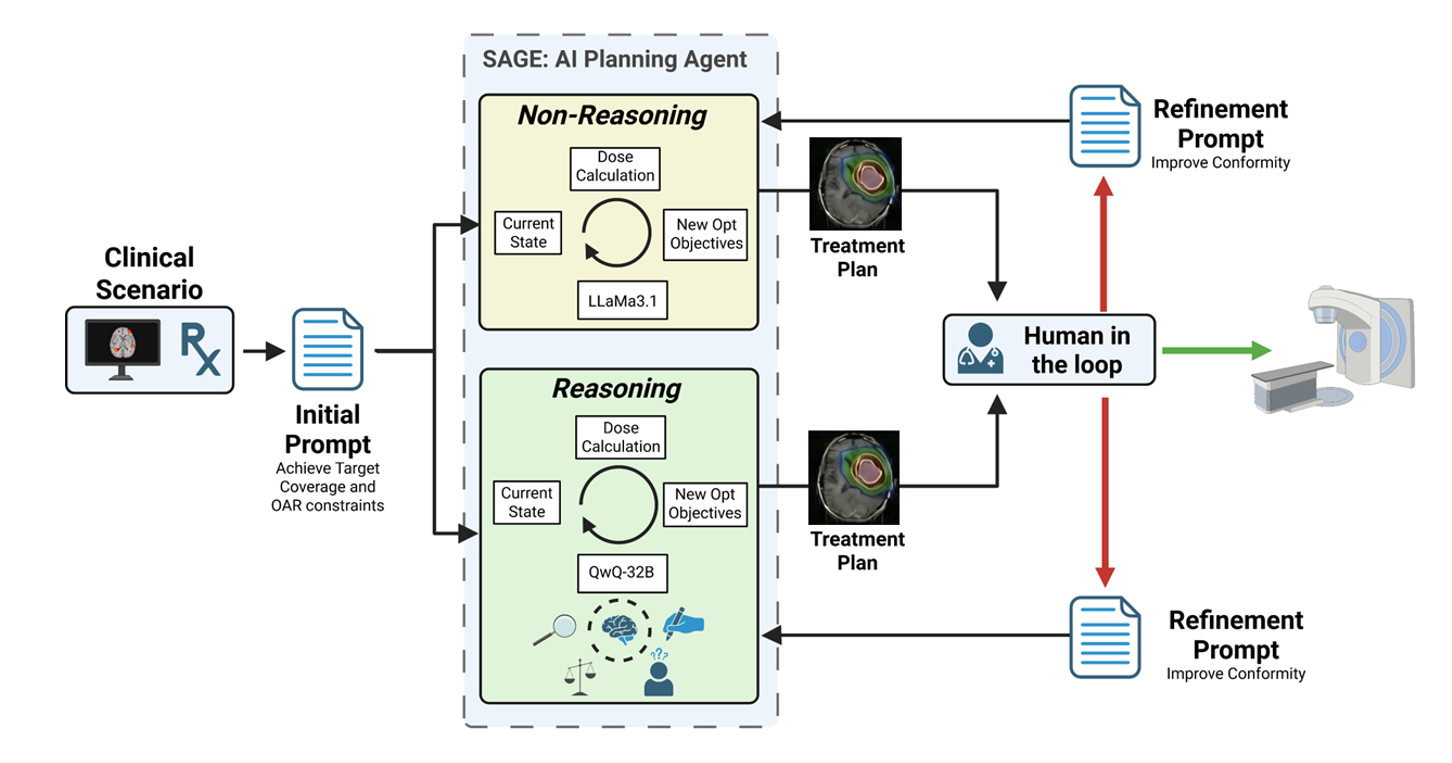

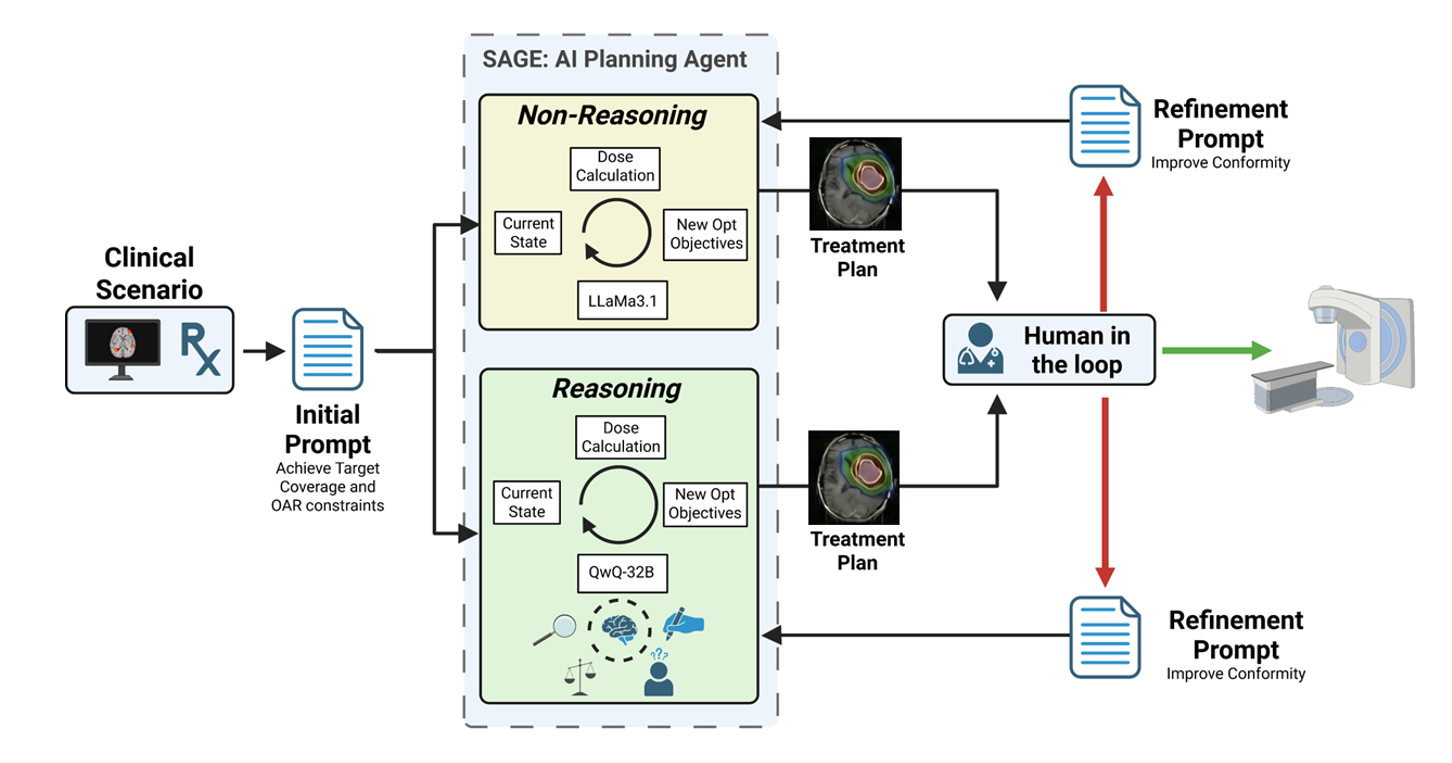

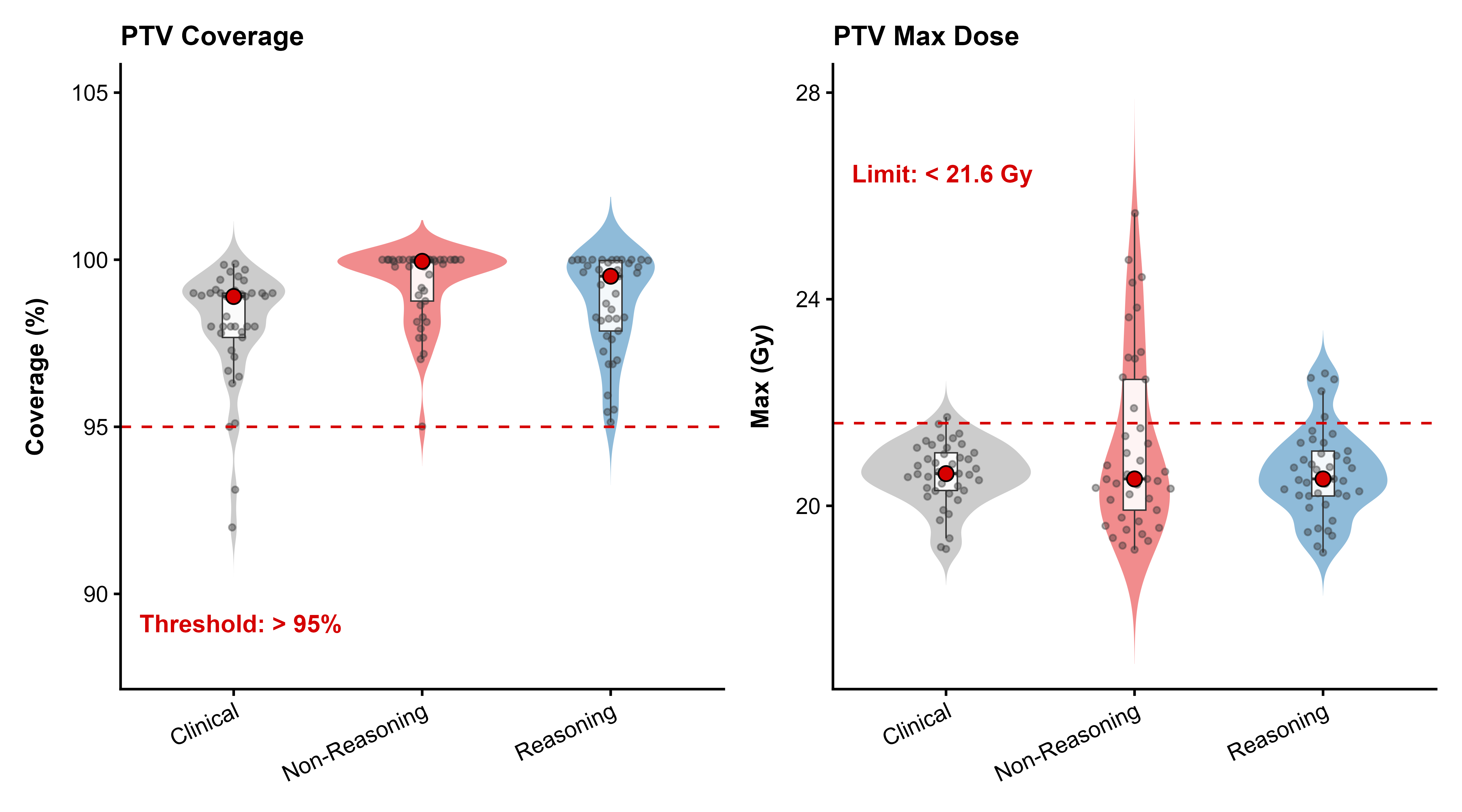

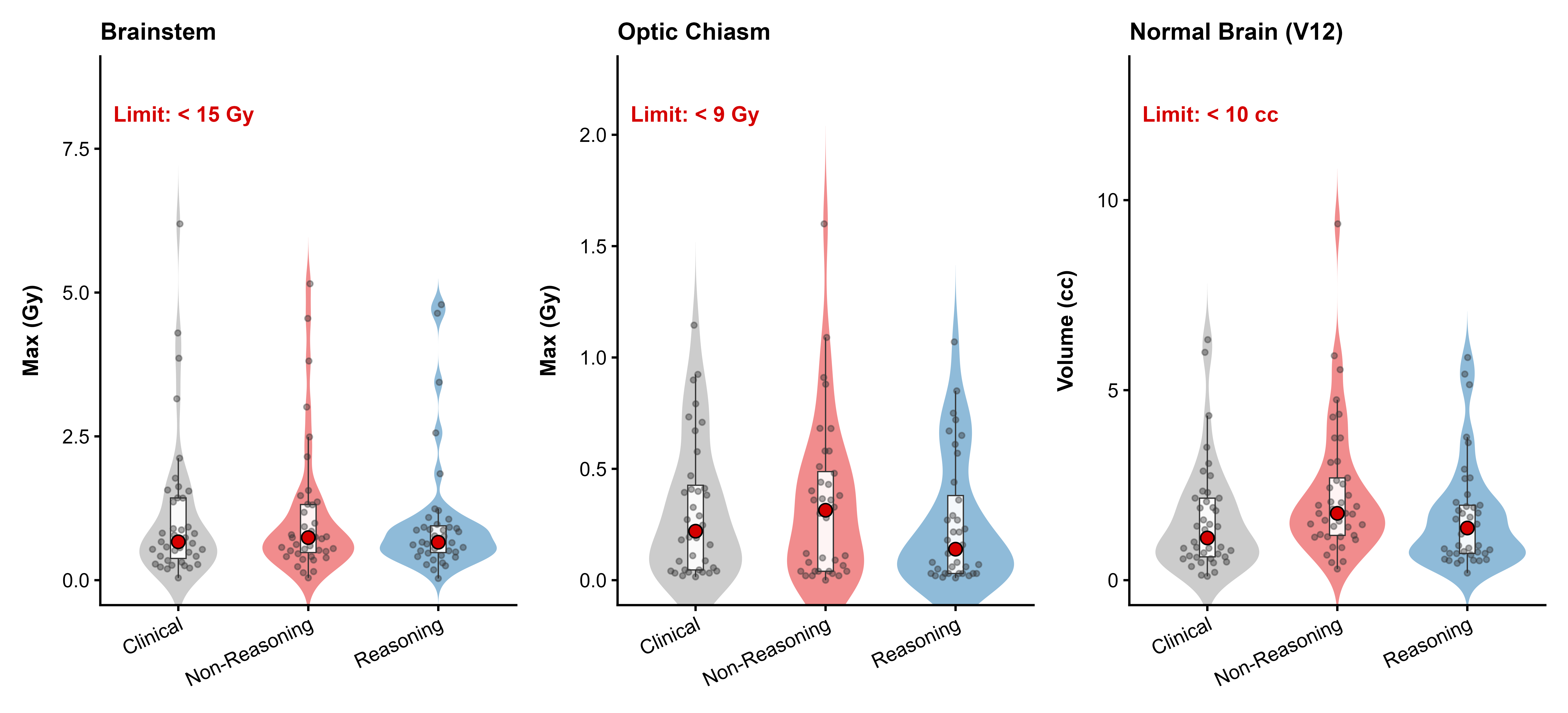

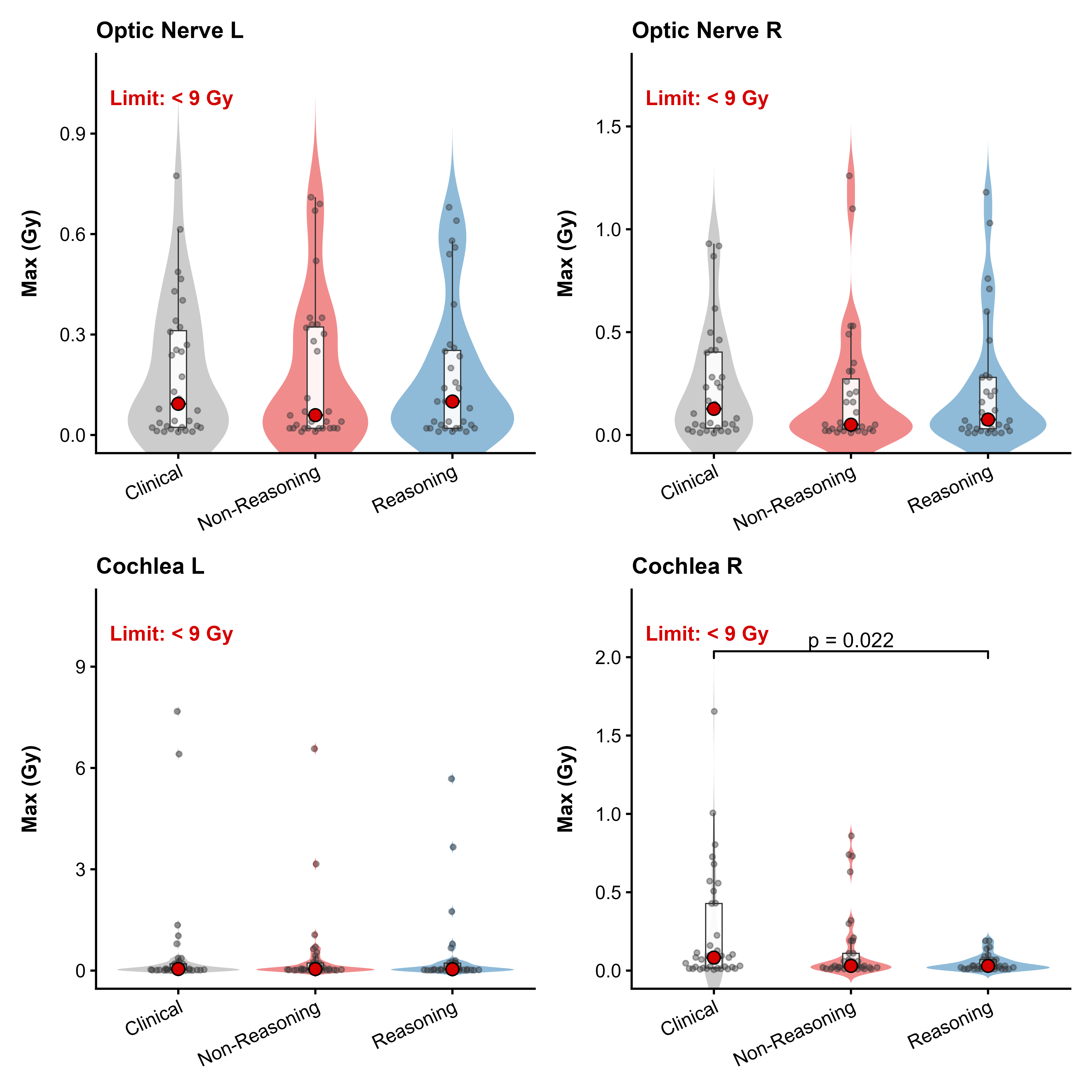

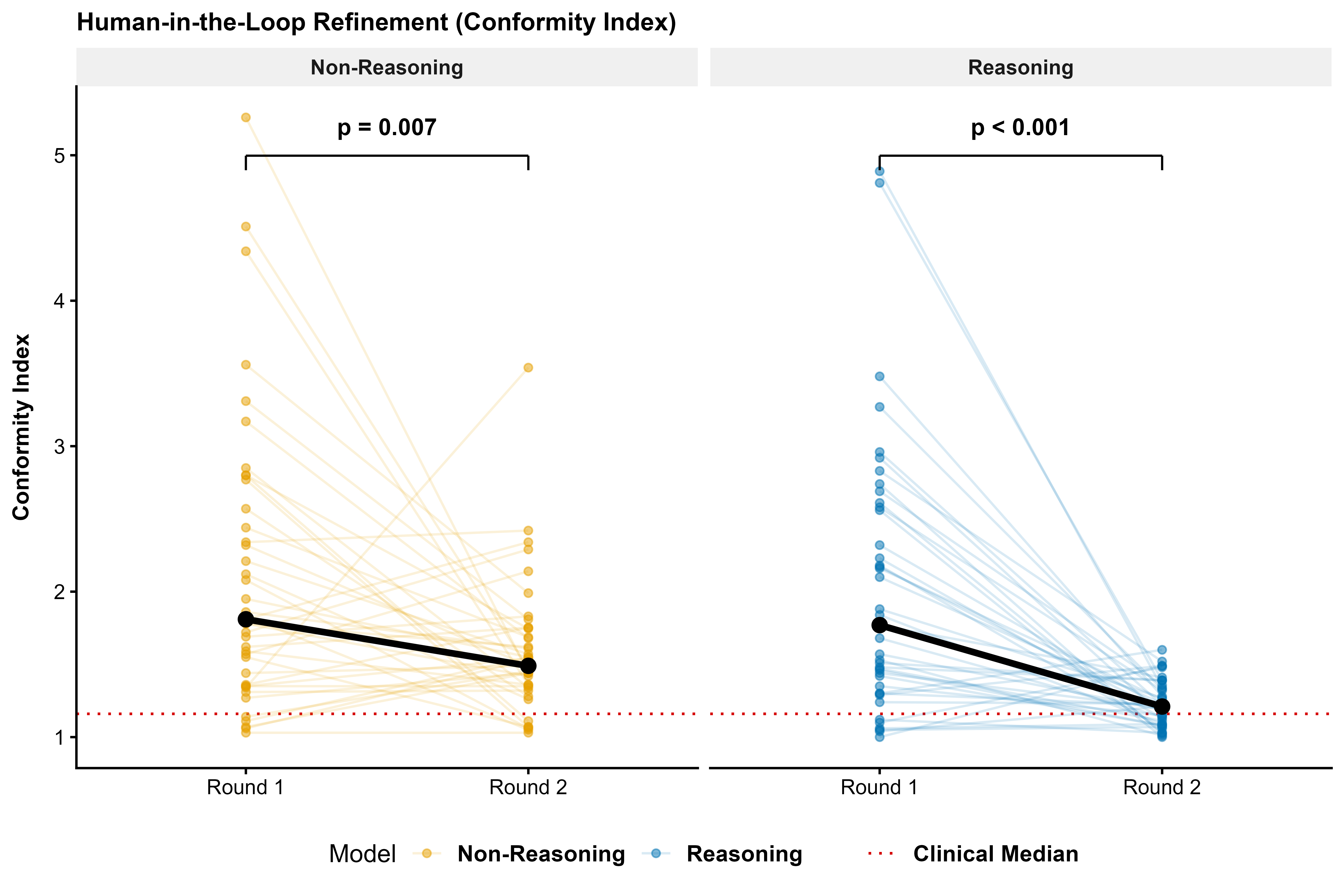

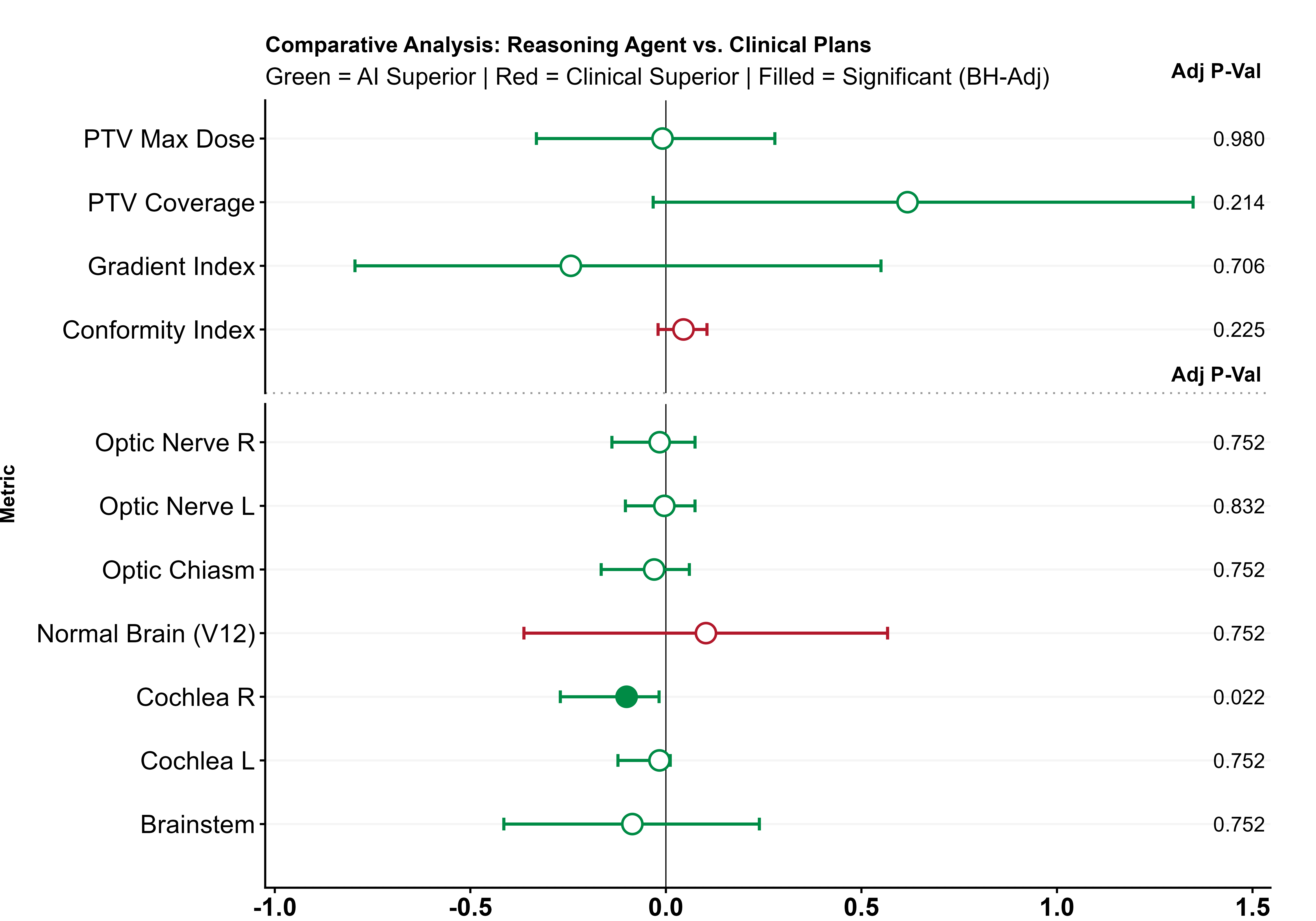

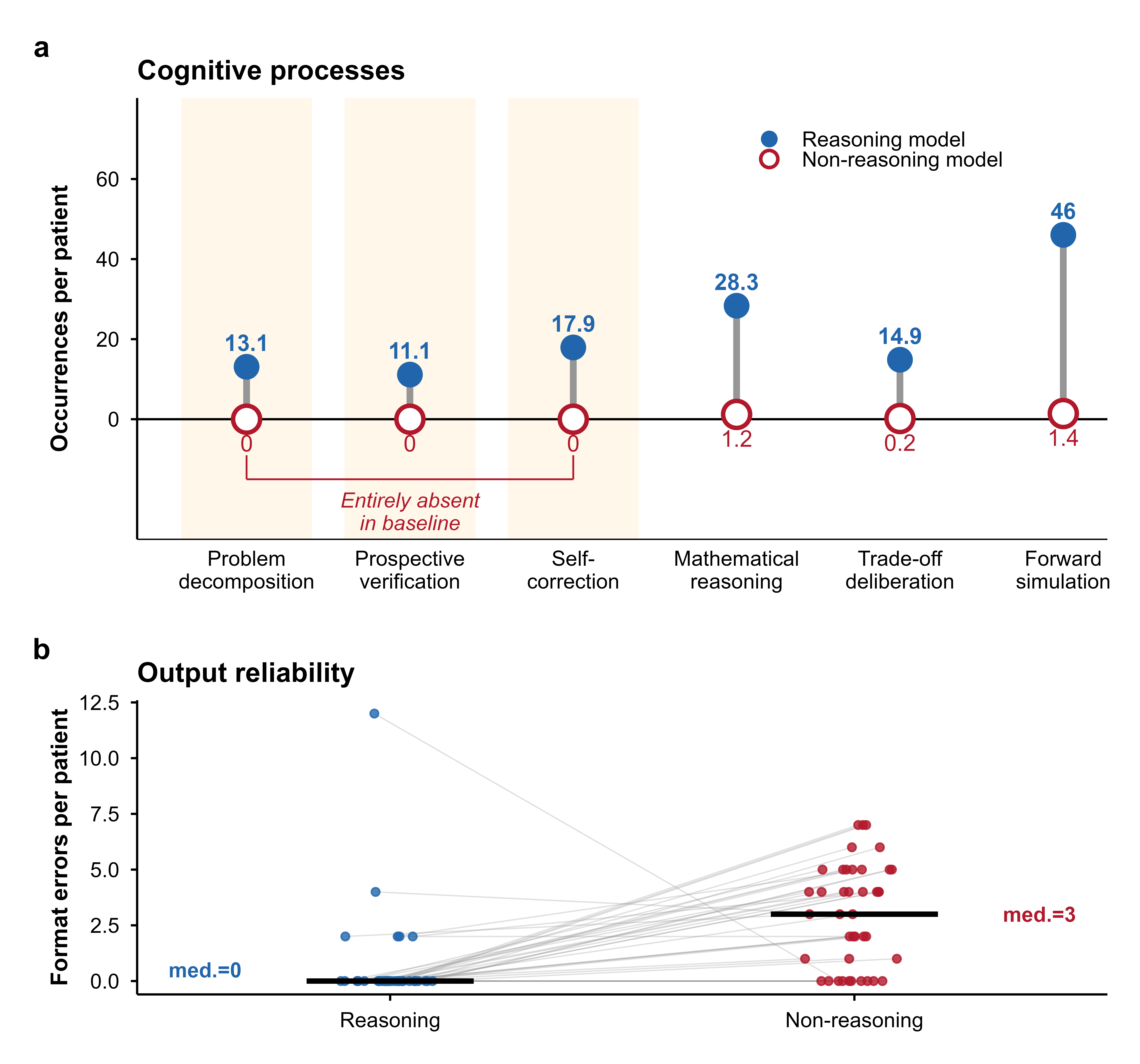

Stereotactic radiosurgery (SRS) demands precise dose shaping around critical structures, yet black-box AI systems have limited clinical adoption due to opacity concerns. We tested whether chain-of-thought reasoning improves agentic planning in a retrospective cohort of 41 patients with brain metastases treated with 18 Gy single-fraction SRS. We developed SAGE (Secure Agent for Generative Dose Expertise), an LLM-based planning agent for automated SRS treatment planning. Two variants generated plans for each case: one using a non-reasoning model, one using a reasoning model. The reasoning variant showed comparable plan dosimetry relative to human planners on primary endpoints (PTV coverage, maximum dose, conformity index, gradient index; all p > 0.21) while reducing cochlear dose below human baselines (p = 0.022). When prompted to improve conformity, the reasoning model demonstrated systematic planning behaviors including prospective constraint verification (457 instances) and trade-off deliberation (609 instances), while the standard model exhibited none of these deliberative processes (0 and 7 instances, respectively). Content analysis revealed that constraint verification and causal explanation concentrated in the reasoning agent. The optimization traces serve as auditable logs, offering a path toward transparent automated planning.

Treatment planning in radiation oncology has grown increasingly complex. A treatment plan consists of a set of instructions generated within a treatment planning system (TPS) that directs the linear accelerator during dose delivery. This task, performed by dosimetrists and medical physicists, requires specialized expertise, substantial time investment, and is subject to variability based on individual planner skill. The degree of complexity varies considerably by tumor site and treatment technique. For conventionally fractionated treatments of anatomically stable sites such as prostate cancer, target volumes are relatively homogeneous and organs at risk occupy predictable positions, facilitating more standardized planning approaches [1,2].

In contrast to this, stereotactic radiosurgery (SRS) for brain metastases represents the opposite end of this spectrum. SRS delivers a large radiation dose to intracranial tumors in a single treatment fraction [3]. The targets are typically brain metastases, which often present with clinical urgency and compressed treatment timelines. Several factors contribute to the technical difficulty of SRS planning: critical OARs in close proximity to targets, the need for steep dose gradients to minimize normal brain exposure, and the extra precision required when the entire prescribed dose is delivered in one session. Given the current shortage of qualified treatment planners [4,5] and the specialized nature of SRS, these treatments are largely confined to large academic medical centers [6,7]. Automated treatment planning using artificial intelligence (AI) offers a potential solution to improve access and reduce workforce burden. Prior work in AIdriven treatment planning has largely relied on neural networks trained on institutional retrospective data for specific tumor sites. Several groups have reported successful implementations of this approach [8][9][10]. However, these methods have notable limitations. They are constrained to the anatomical site and treatment technique represented in the training data. Such systems function as black boxes that offer limited transparency or explainability regarding their optimization decisions [11][12][13][14][15][16][17][18][19][20][21][22][23]. This approach does not scale well across institutions because each implementation remains siloed to the center that performed the development and training.

Regulatory frameworks for AI-based medical devices increasingly emphasize interpretability and transparency [24][25][26][27][28], while surveys of radiation oncology professionals identify explainability as a key determinant of clinical acceptance [29][30][31]. These concerns around model opacity represent substantial barriers to widespread adoption.

The application of large language models (LLMs) to radiation oncology is an emerging area of investigation. Published work has primarily focused on retrieval-augmented generation (RAG) for clinical question answering, protocol compliance verification, and knowledge-grounded decision support [32][33][34]. These applications leverage LLMs’ ability to retrieve and synthesize information from clinical guidelines. By contrast, the present work employs LLMs for iterative, reasoning-driven treatment plan optimization, a fundamentally different task requiring spatial reasoning, constraint satisfaction, and forward simulation of dosimetric consequences. This distinction is critical: retrieval-based systems improve reliability by grounding responses in validated knowledge sources, whereas reasoning-based planning requires the model to perform multi-step logical inference over complex geometric and dosimetric tradeoffs. To date, all reported LLM-based planning studies have employed non-reasoning models without explicit reasoning capabilities, and none have addressed the geometric complexity of SRS planning where transparent, stepwise reasoning is essential.

The present work addresses these gaps. We employ SAGE, a general agentic framework previously validated for prostate cancer planning [35], and apply it to SRS. We directly compare a reasoning LLM against a non-reasoning LLM within the same planning framework and tasks. We include a mechanistic dialogue analysis that connects model behavior to planning outcomes, providing insight into how reasoning architecture influences optimization strategy.

Recent LLM development has produced models optimized for different computational behaviors. While all LLMs fundamentally operate through next-token prediction, some models are specifically trained to generate extended intermediate reasoning steps during inference before producing final outputs. For practical purposes, we refer to these as “reasoning models” versus “non-reasoning models,” recognizing this as a behavioral distinction rather than a fundamental architectural dichotomy [36][37][38]. This behavioral difference, at a functional level, resembles Kahneman’s distinction between System 1 (fast, automatic) and

This content is AI-processed based on open access ArXiv data.