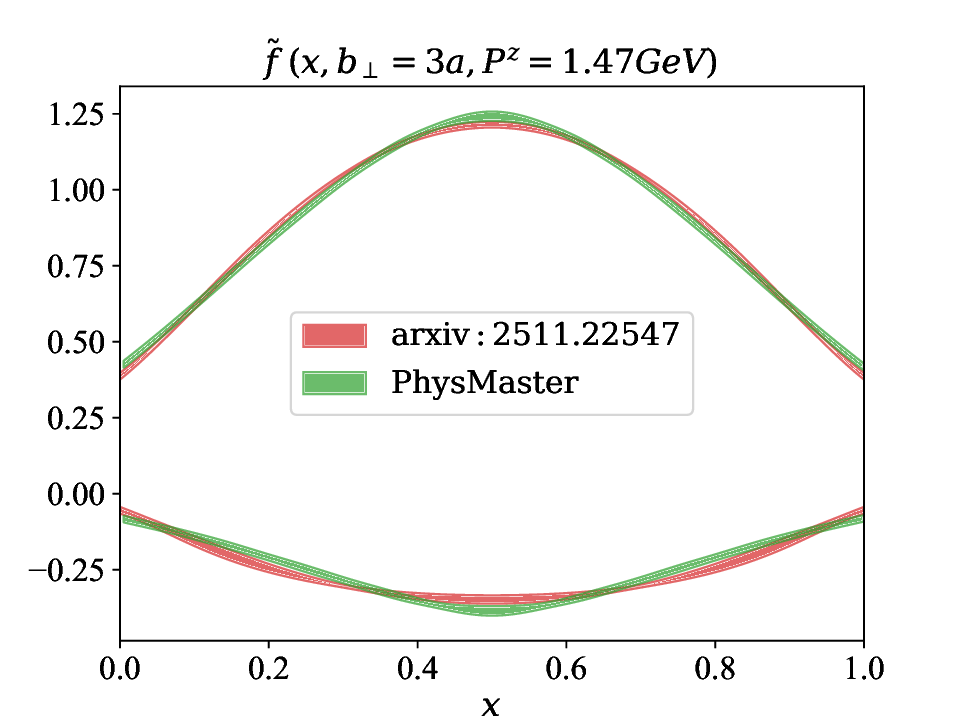

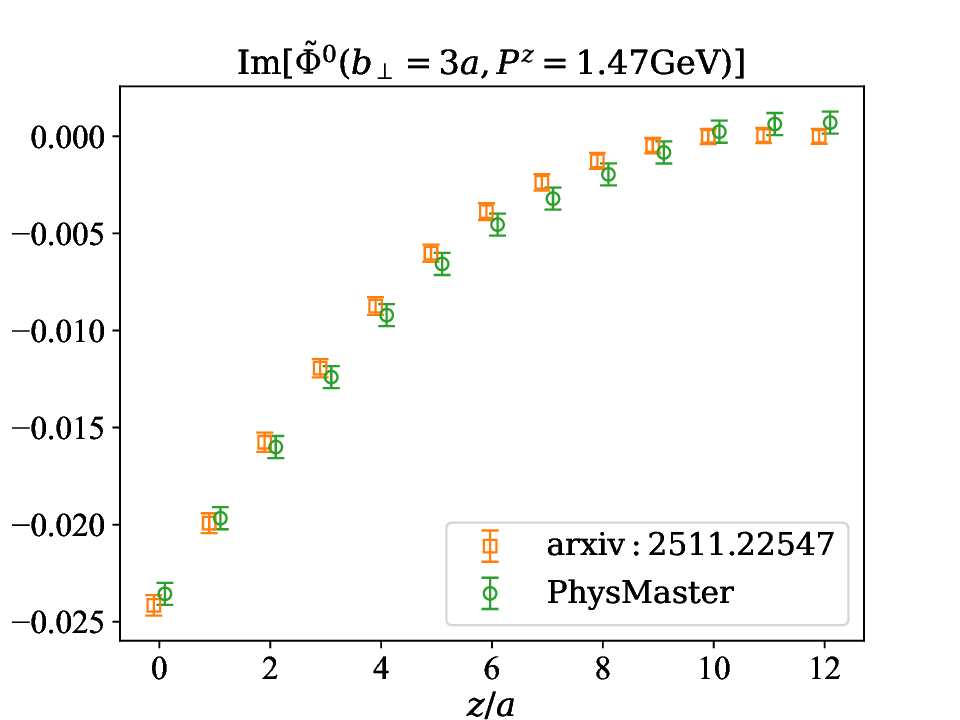

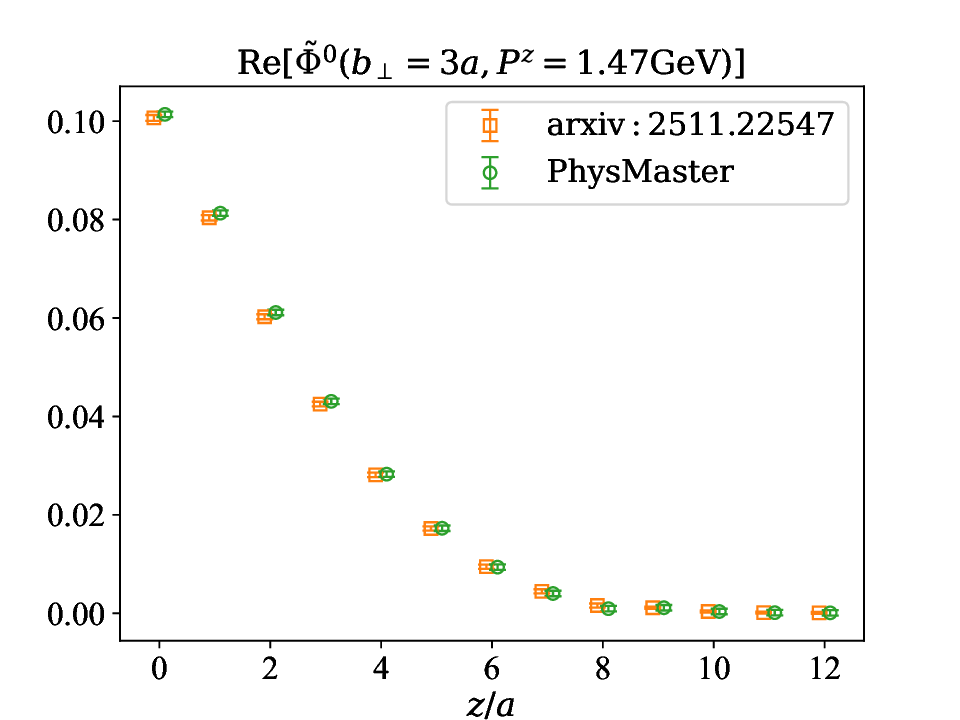

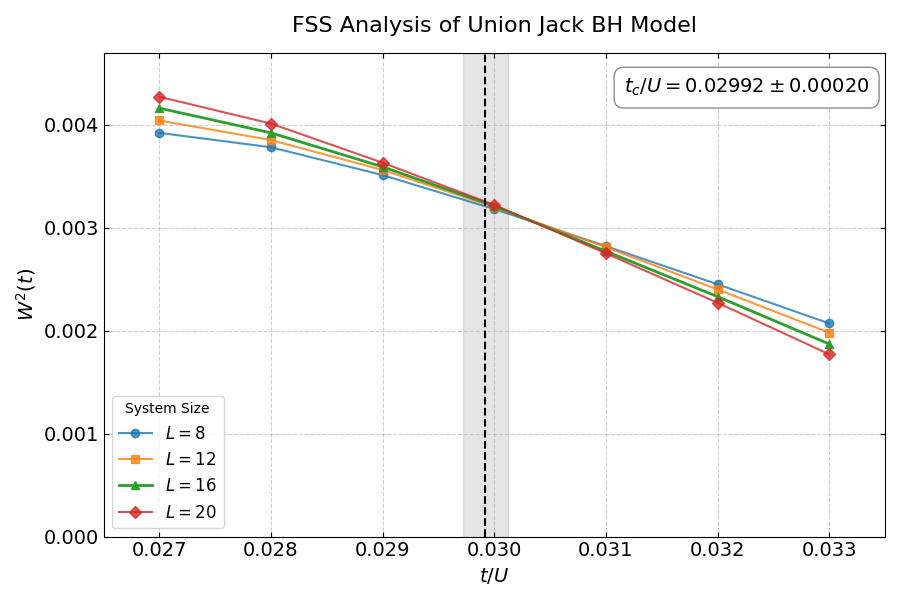

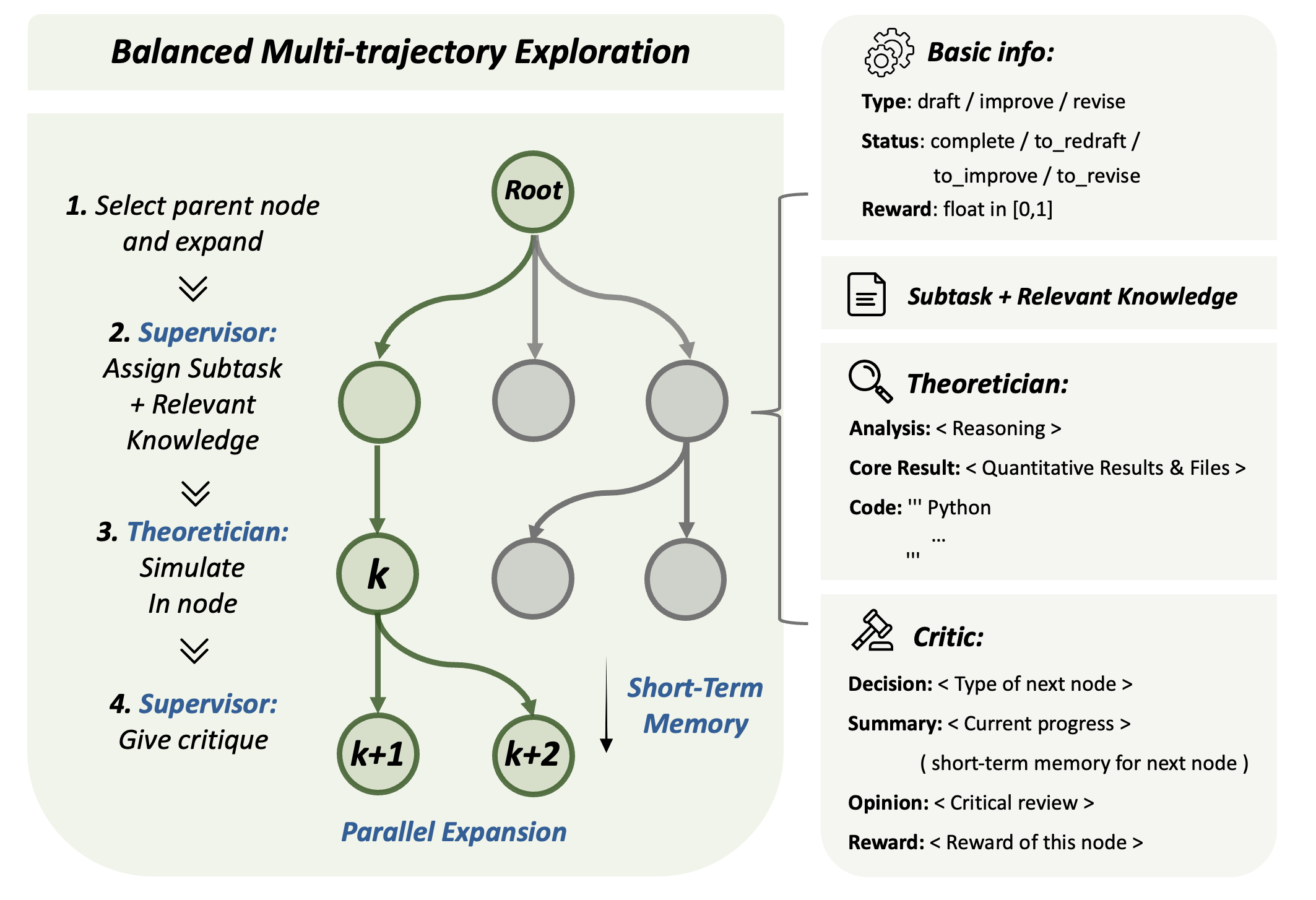

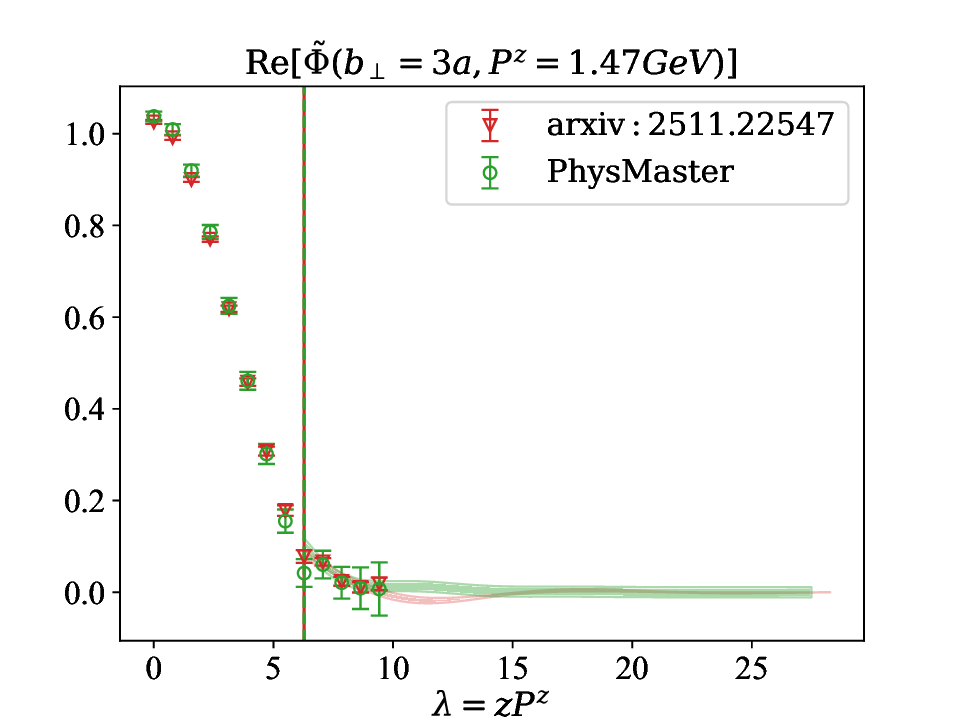

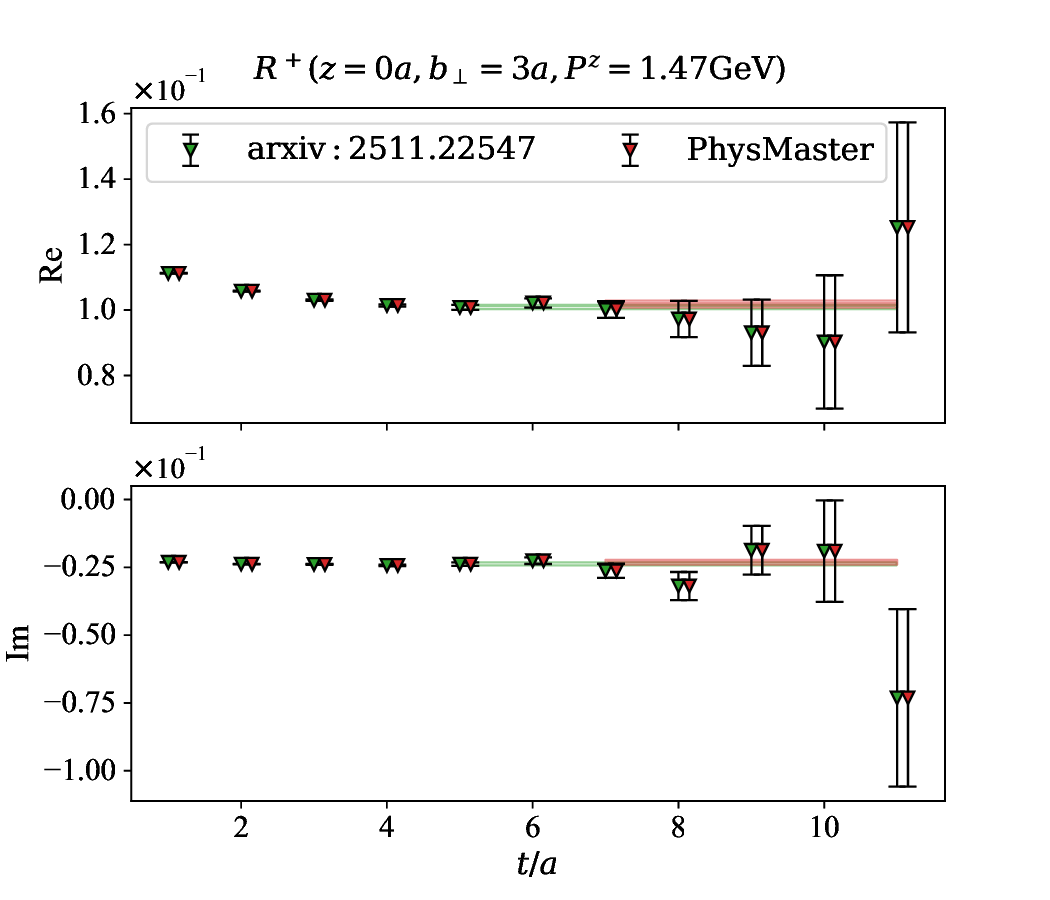

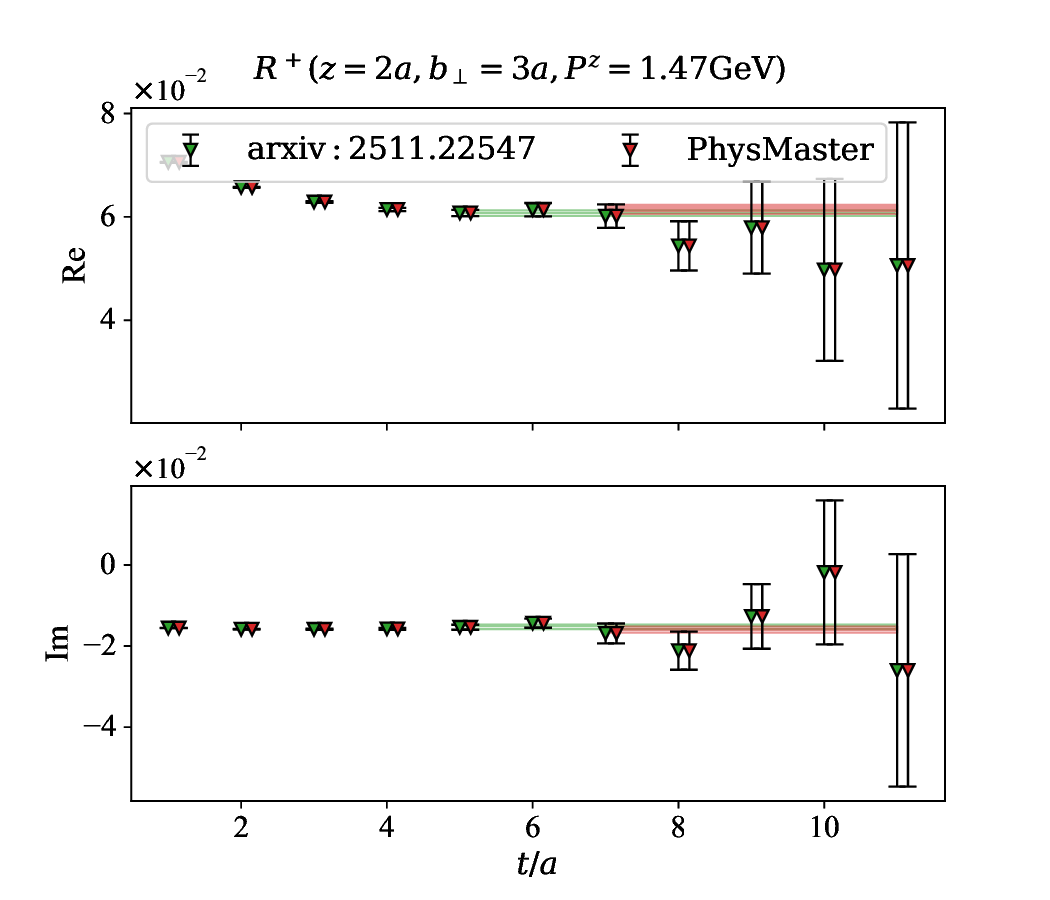

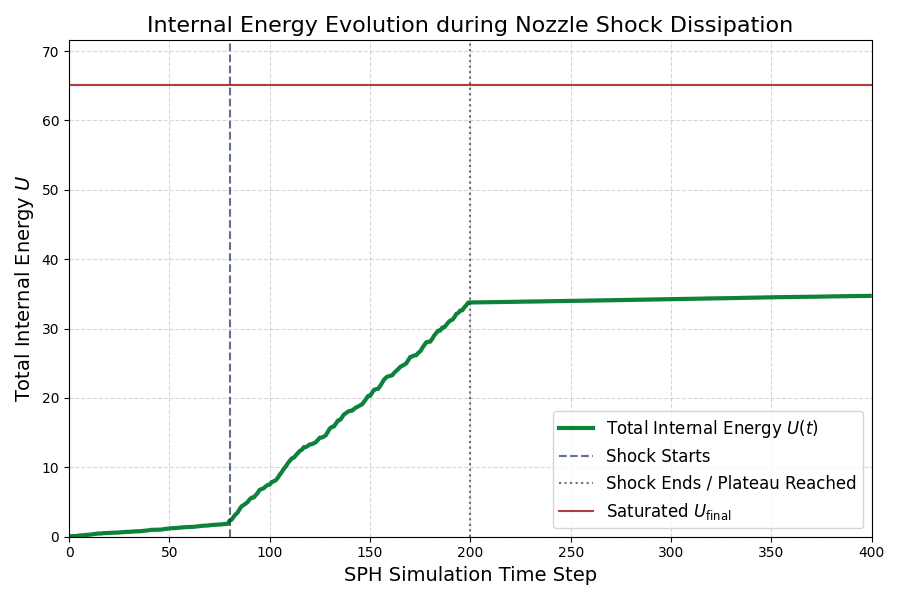

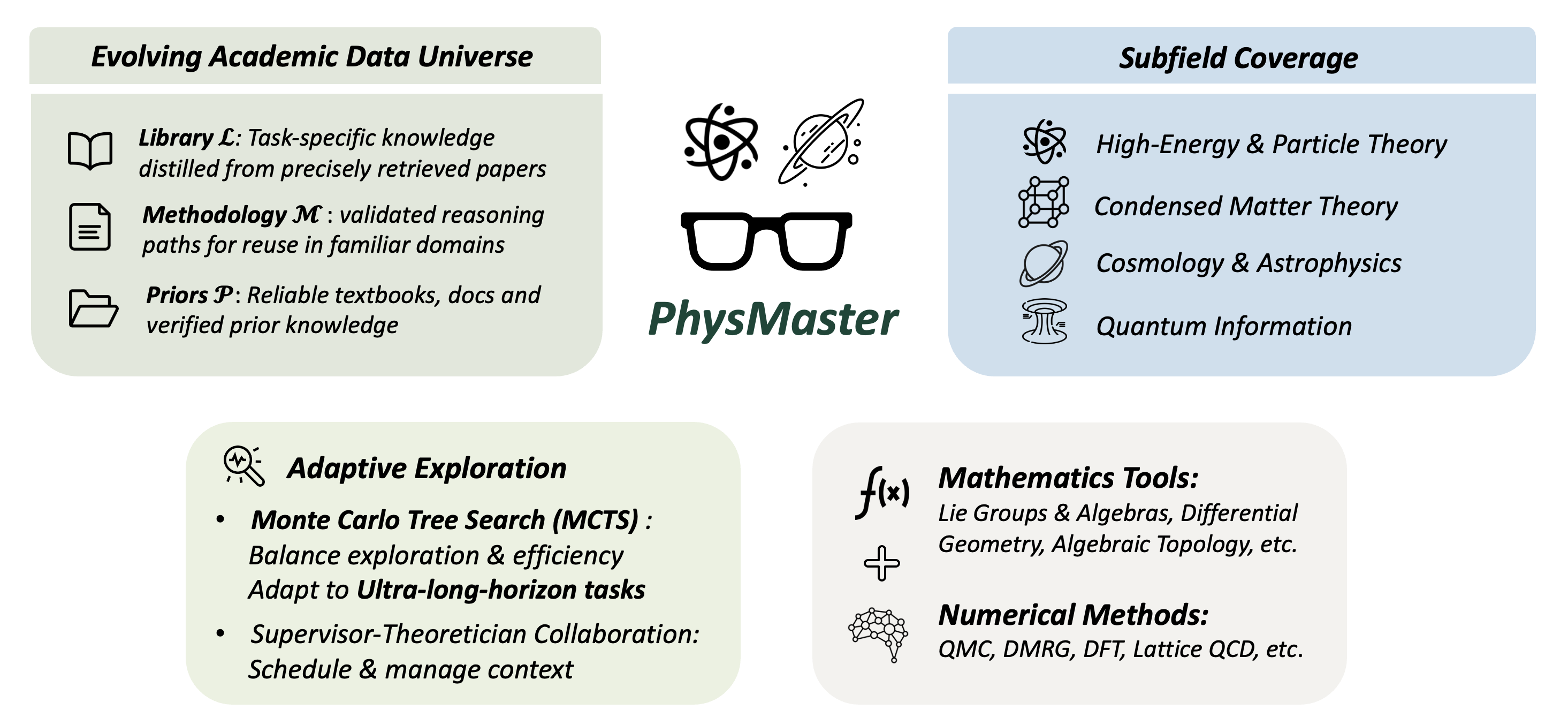

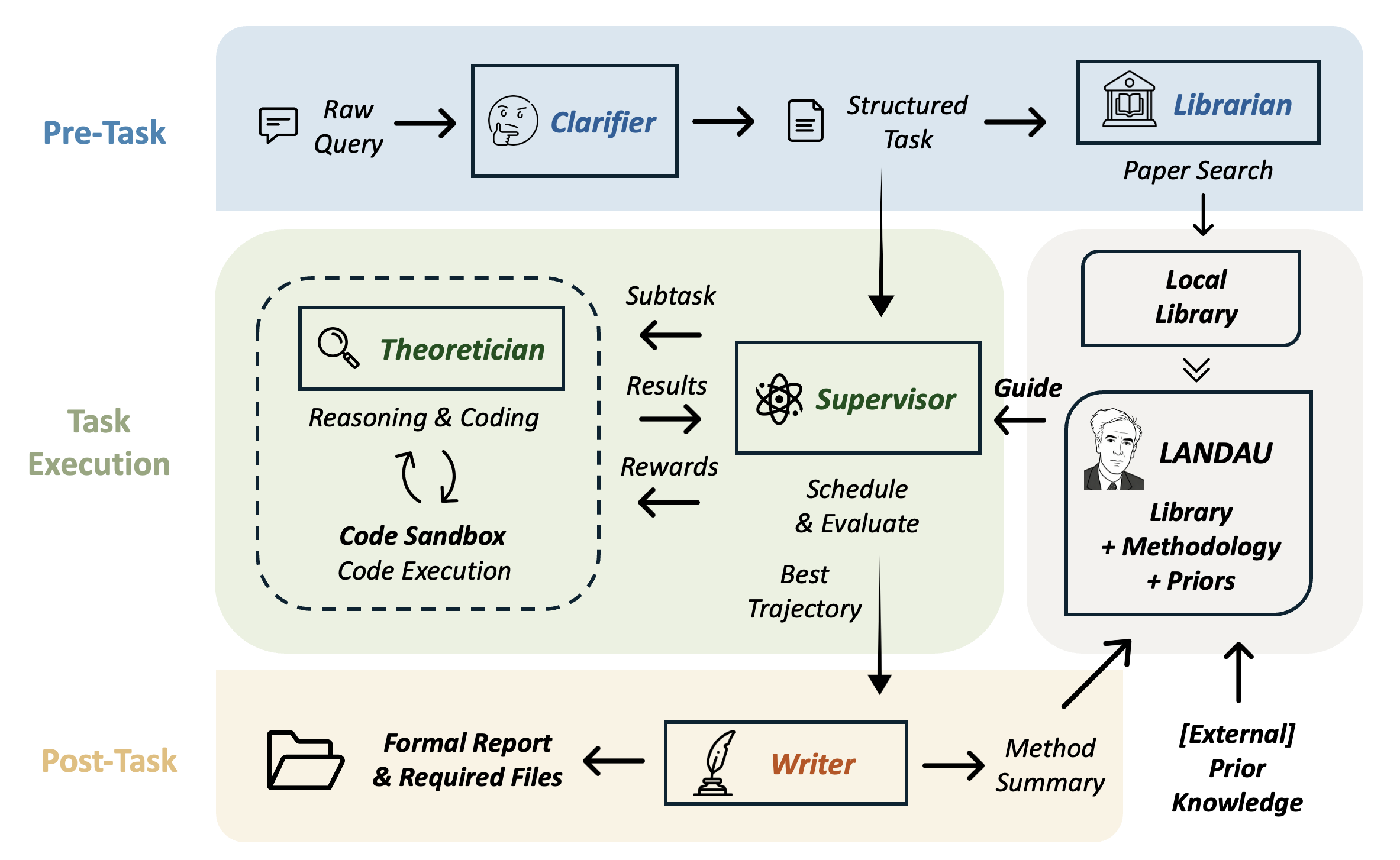

Advances in LLMs have produced agents with knowledge and operational capabilities comparable to human scientists, suggesting potential to assist, accelerate, and automate research. However, existing studies mainly evaluate such systems on well-defined benchmarks or general tasks like literature retrieval, limiting their end-to-end problem-solving ability in open scientific scenarios. This is particularly true in physics, which is abstract, mathematically intensive, and requires integrating analytical reasoning with code-based computation. To address this, we propose PhysMaster, an LLM-based agent functioning as an autonomous theoretical and computational physicist. PhysMaster couples absract reasoning with numerical computation and leverages LANDAU, the Layered Academic Data Universe, which preserves retrieved literature, curated prior knowledge, and validated methodological traces, enhancing decision reliability and stability. It also employs an adaptive exploration strategy balancing efficiency and open-ended exploration, enabling robust performance in ultra-long-horizon tasks. We evaluate PhysMaster on problems from high-energy theory, condensed matter theory to astrophysics, including: (i) acceleration, compressing labor-intensive research from months to hours; (ii) automation, autonomously executing hypothesis-driven loops ; and (iii) autonomous discovery, independently exploring open problems.

The advancement in Large Language Models (LLMs) has profoundly reshaped both the ways we live and work, marking a new era in Artificial Intelligence (AI). From early conversational systems such as ChatGPT to more recent models Guo et al. (2025); Jaech et al. (2024); OpenAI (2024b), LLMs have exhibited substantial gains in abstract reasoning, long-horizon planning, and multi-step problem-solving. When augmented with tool-use and action-taking capabilities Schmidgall et al. (2025), these systems increasingly blur the boundary between passive language understanding and active task execution, raising the prospect that AI systems may approach-or in narrowly defined domains, surpass-human expert-level performance.

This aligns with the staged framework for Artificial General Intelligence (AGI) articulated by OpenAI OpenAI (2024a), which conceptualizes AI capabilities as several levels:

• Level 1 -Chatbots: AI with conversational language.

• Level 2 -Reasoners: human-level problem-solving.

• Level 3 -Agents: systems that can take actions.

• Level 4 -Innovators: AI that can aid in invention.

• Level 5 -Organizations: AI that can do the work of an orgnization.

Current frontier systems, augmented with tool-use, planning, and execution capabilities, are believed to be at Level 3, which is Agents. Meanwhile, the transition from agentic execution (Level 3) to genuine innovation (Level 4) is accelerating, where AI systems can autonomously generate novel hypotheses, conduct verification and yield novel discoveries. Consequently, the human-AI collaboration paradigm will be fundamentally reshaped: from human-directed AI assistance towards AI-orchestrated task execution with human oversight, and ultimately to greater autonomy.

It is widely recognized that AI holds the potential to transform scientific research paradigms and accelerate discoveries. In earlier stages, AI primarily functioned as a powerful, tool-like enabler of science, supporting tasks such as prediction, simulation, and data-driven inference. Scientific foundation models have emerged as powerful capability engines, with recent domain-specific models setting or approaching frontiers in key sub-tasks. For instance, AlphaFold3 advances biomolecular structure and interaction prediction Abramson et al. (2024), while GNoME enables large-scale discovery of stable inorganic crystals Merchant et al. (2023). GraphCast demonstrates that learned surrogates can rival numerical simulators for complex dynamical systems with significant speedups Lam et al. (2023), and Uni-Mol provides a universal 3D molecular representation framework unifying diverse downstream tasks Zhou et al. (2023). These breakthroughs furnish strong engines for inference, generation, and simulation-essential components of scientific cycles-yet they underscore that models alone address only fragments of scientific work and become transformative when integrated into larger systems.

As AI general capabilities advance, AI is evolving from a mere scientific tool to an active research labor force, enabling the rise of AI scientists that can autonomously participate in research and facilitate discovery, though within engineered environments. The AI co-scientist from Google/DeepMind, a multi-agent Gemini-based system, employs generate-debate-evolve cycles to propose biomedical hypotheses, some validated experimentally, while amplifying human scientists in prioritization and leaving final judgment to them Natarajan et al. (2025). In parallel, The AI Scientist and AI Scientist-v2 from Sakana AI automate the research loop in machine learning-from ideation to experiment design and paper writing-achieving peerreviewed acceptance of fully AI-generated papers Lu et al. (2024); Yamada et al. (2025). Beyond these, Robin offers a multi-agent framework automating literature research, hypothesis generation, experiment planning, and data analysis, applied in lab-in-the-loop settings to discover therapeutic candidates Ghareeb et al. (2025). Further, the recent Kosmos sustaining long-horizon cycles of literature search, data analysis, and hypothesis generation to produce traceable scientific reports and cross-domain discoveries Mitchener et al. (2025). Denario presents a modular multi-agent research assistant that covers idea generation, literature review, planning, and code execution across multiple scientific domains; however, the authors also reports frequent computational mistakes and occasional unsupported claims, underscoring the continued need for rigorous mathematical/numerical verification Villaescusa-Navarro et al. (2025). Despite these impressive demonstrations, current AI scientist systems are still largely optimized for text-centric domains, with limited ability to manipulate rigorous mathematical formalisms and to conduct robust numerical computation for verification, while also lacking the adaptability to long-horizon workflows.

Physics is a uniquely fundamental and comprehensive enterprise: it seeks u

This content is AI-processed based on open access ArXiv data.