Large language models are exposed to risks of extraction, distillation, and unauthorized fine-tuning. Existing defenses use watermarking or monitoring, but these act after leakage. We design AlignDP, a hybrid privacy lock that blocks knowledge transfer at the data interface. The key idea is to separate rare and non-rare fields. Rare fields are shielded by PAC indistinguishability, giving effective zero-epsilon local DP. Non-rare fields are privatized with RAPPOR, giving unbiased frequency estimates under local DP. A global aggregator enforces composition and budget. This two-tier design hides rare events and adds controlled noise to frequent events. We prove limits of PAC extension to global aggregation, give bounds for RAPPOR estimates, and analyze utility trade-off. A toy simulation confirms feasibility: rare categories remain hidden, frequent categories are recovered with small error.

Large language models are increasingly deployed at scale and face risks of knowledge extraction, distillation, unauthorized fine-tuning, and targeted editing. Current defenses such as watermarking, usage monitoring, or legal policy operate after leakage has occurred. They do not prevent misuse at the point of data release.

We take a different approach. We introduce AlignDP, a hybrid privacy lock that blocks transfer of sensitive knowledge by design. The central idea is to treat rare and non-rare events differently. Rare events are highly identifying and pose the greatest risk if revealed. Non-rare events, by contrast, can be estimated under carefully applied noise.

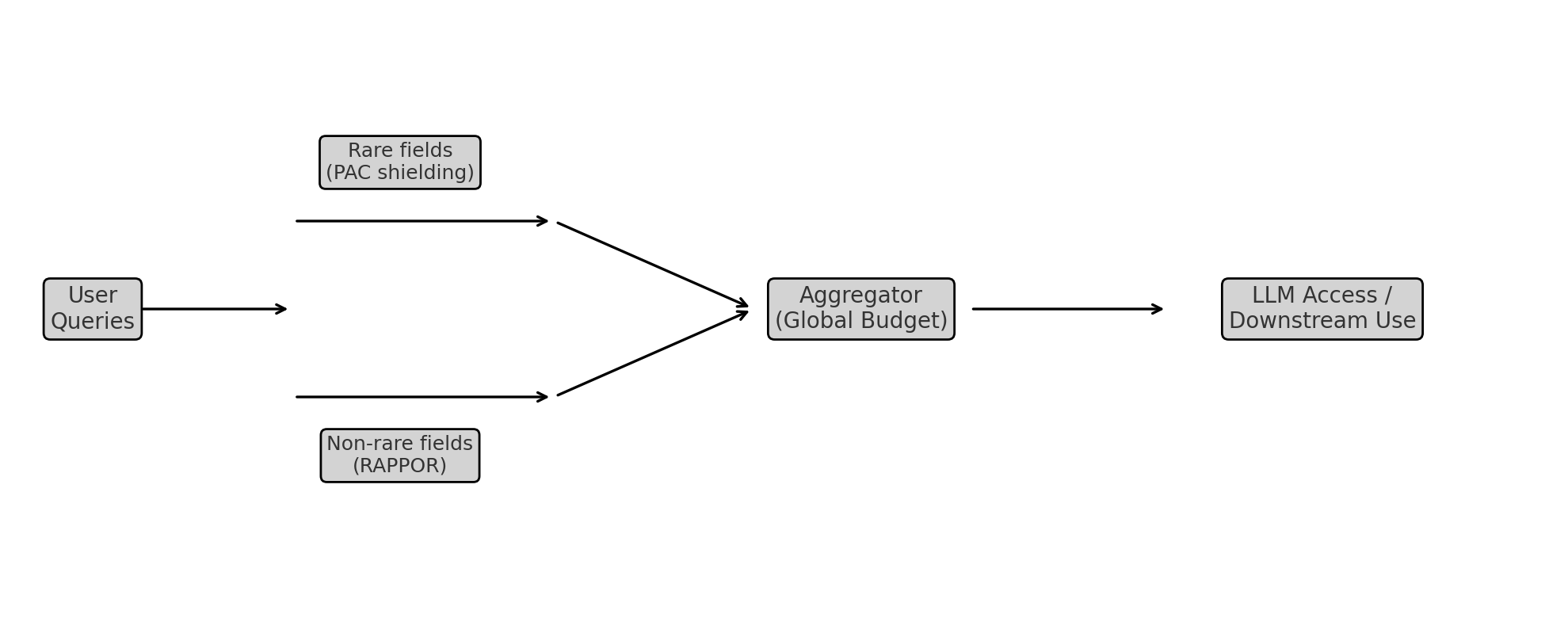

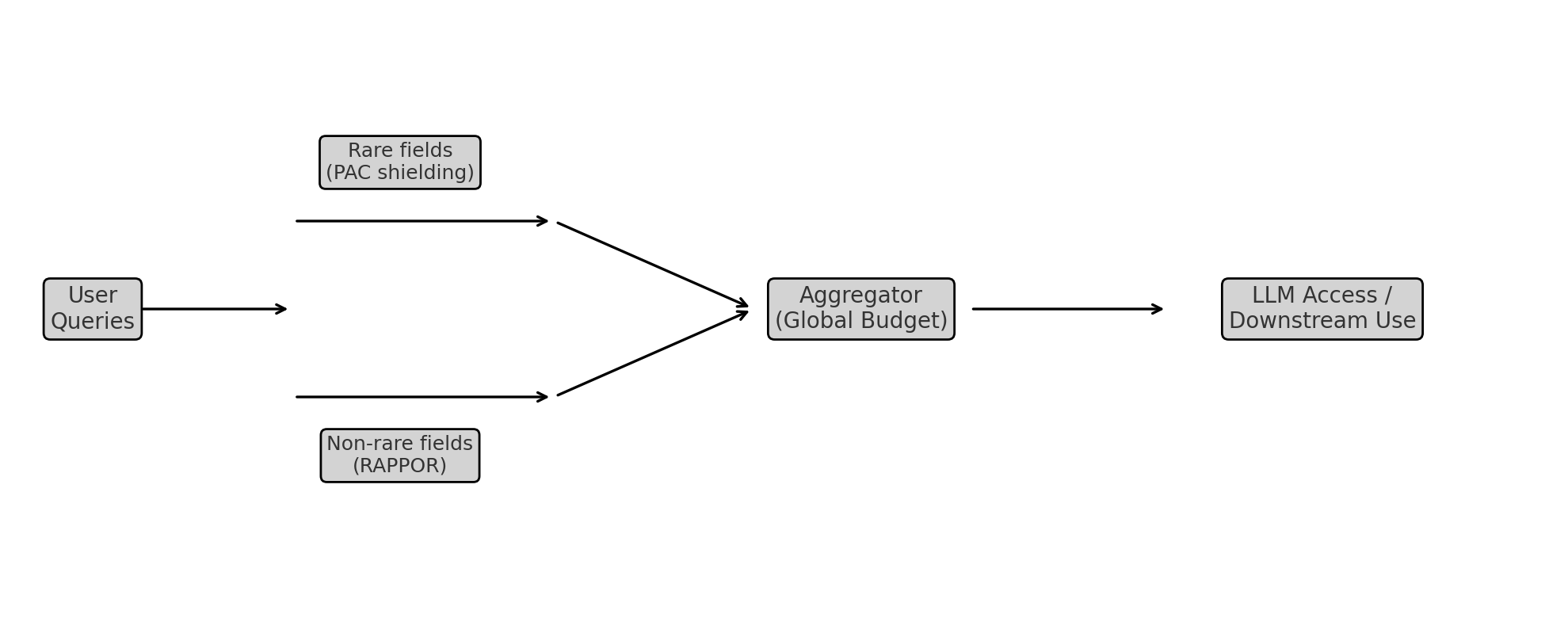

AlignDP implements a two-tier design. Rare events are shielded using PAC indistinguishability, yielding effective zero-ϵ local DP guarantees. Non-rare events are privatized using RAPPOR, which supports unbiased frequency estimation in aggregate while concealing individual values. A global aggregator enforces composition rules and manages adaptive budget allocation.

The result is a hybrid mechanism that enables useful aggregate statistics while preventing extraction of rare signals. It also makes fine-tuning on privatized outputs less effective, since gradients are derived from noisy or absent labels. We provide theoretical analysis, formal bounds, and a small operational illustration.

• A rarity-aware two-tier privacy model for LLM telemetry.

• PAC shielding for rare events and local DP guarantees for non-rare events. • Proof that PAC protection does not extend globally, motivating DP composition.

• A mechanism view of AlignDP as a privacy lock consistent with Lock-LLM goals.

We highlight three main directions of prior work.

The first is differential privacy. Central DP has been applied to model training by adding noise to gradients [1]. Local DP is used in telemetry and frequency estimation [4,3]. Hybrid approaches that combine local and global control are less common.

The second direction is protection for LLMs. Watermarking embeds detectable patterns in model outputs [7]. Detection-based defenses analyze outputs for signs of extraction [2]. These approaches operate after leakage has occurred, rather than preventing it.

The third direction is distillation and fine-tuning. Distillation is widely used for compression [5]. Attacks have shown that even black-box queries can reproduce models [9,6]. Detection of unauthorized fine-tuning has been explored [8], but current guarantees are weak.

Our work takes a different path. We propose a rarity-aware privacy lock. PAC shielding hides rare events, RAPPOR privatizes non-rare events, and an aggregator enforces budget. This provides a mechanism that prevents leakage by design, rather than by post hoc detection.

We study a data release setting for LLM logs. Each user has record X = (X 1 , . . . , X d ). Each field X i takes values from domain D i with distribution µ i .

We fix threshold α > 0. If µ i (x) < α, we mark event x as rare. Otherwise it is non-rare. The rare set is

and the non-rare set is

The AlignDP mechanism M works in two parts.

- If x ∈ R i : output is symbol x but guarantee is PAC indistinguishability. Frequency of such events cannot be distinguished beyond error δ(n, α).

x ∈ N i : encode x as bit vector v ∈ {0, 1} m . Each bit flips with probability p. Privatized vector y is sent. This is RAPPOR.

For non-rare events the mechanism is ϵ-LDP with ϵ = log 1 -p p .

Let y j be fraction of reports equal to category j. The unbiased estimator of frequency is

where k = |D i | and q = 1 -p + p k . Aggregator A collects all outputs. For rare events it keeps counts and reports only PAC bounds. For non-rare events it debiases RAPPOR and computes μi (x).

Adversary can issue repeated queries and try to reconstruct training data. Adversary only sees privatized telemetry. For rare events, PAC bound hides signal. For non-rare events, randomized response prevents exact recovery. Aggregator enforces global budget so repeated queries cannot accumulate large leakage.

Figure 1 shows the AlignDP pipeline. User queries are split by rarity threshold. Rare events go through PAC shielding. Non-rare events go through RAPPOR. Aggregator combines streams, applies PAC bounds and debiasing, and updates global budget. Only aggregated outputs pass to the LLM or downstream system.

We give three main results with short sketches.

Theorem 1. Let x be an event with µ(x) < α. For n i.i.d. samples, probability of distinguishing x from other rare events is at most

Thus rare events satisfy effective zero-ϵ local DP.

Set t = α -µ(x). Since µ(x) < α, adversary cannot distinguish x with probability greater than δ(n, α). This yields indistinguishability guarantee.

Theorem 2. For x ∈ N i , RAPPOR with flip probability p is ϵ-LDP with

Sketch. Ratio of probabilities is bounded by (1 -p)/p. Estimator formula follows from solving expectation equations under randomized response. Variance comes from Bernoulli varianc

This content is AI-processed based on open access ArXiv data.