Finding rare but useful solutions in very large candidate spaces is a recurring practical challenge across language generation, planning, and reinforcement learning. We present a practical framework, \emph{Inverted Causality Focusing Algorithm} (ICFA), that treats search as a target-conditioned reweighting process. ICFA reuses an available proposal sampler and a task-specific similarity function to form a focused sampling distribution, while adaptively controlling focusing strength to avoid degeneracy. We provide a clear recipe, a stability diagnostic based on effective sample size, a compact theoretical sketch explaining when ICFA can reduce sample needs, and two reproducible experiments: constrained language generation and sparse-reward navigation. We further show how structured prompts instantiate an approximate, language-level form of ICFA and describe a hybrid architecture combining prompted inference with algorithmic reweighting.

Large candidate spaces are ubiquitous. Producing a sentence that satisfies many constraints, finding a molecular structure with several desired properties, or discovering a long action sequence that yields reward are all instances of the same core difficulty: good solutions are rare, and naive generation wastes computation. Common practical workarounds-sampling many candidates and selecting the best, beam search, or costly policy training-each carry clear limitations. Sampling scales poorly when targets are rare; beam and tree methods depend on brittle local heuristics; and training-based approaches like reinforcement learning can be prohibitively expensive and slow to adapt.

We propose a different angle: view search as conditioning on a target. If some numeric measure quantifies how well a candidate matches the target, one can use that measure to skew sampling toward promising areas. ICFA implements this idea by reweighting samples drawn from an available proposal distribution, using a Boltzmann-style transform of a similarity score, and by adaptively controlling the strength of the reweighting so that the process remains numerically stable and diverse.

ICFA is not a magic bullet: its benefit depends on the informativeness of the similarity function. Our contribution is practical: (1) a clear and reproducible algorithm for inferencetime focusing that avoids common failure modes; (2) a stability control mechanism that is simple to compute; (3) empirical demonstrations showing that focusing can significantly reduce effective sample needs in representative tasks; and (4) a conceptual bridge to prompting: structured prompts can be understood as a lightweight language-level approximation of ICFA, useful when algorithmic intervention is impractical.

The rest of the paper is organized as follows. Section 2 defines the framework and its diagnostics. Section 3 gives the algorithmic recipe. Section 4 sketches why and when focusing helps. Section 5 shows how prompting approximates focusing. Section 6 reports experiments. Section 7 discusses limitations and deployment guidance. We close with reproducibility notes.

Let S be a discrete candidate space and let 𝑃 0 (𝑠) be a proposal sampler that we can draw from (for example, the distribution implicit in a pretrained language model or a baseline policy). Let 𝑣 target denote a target specification and let 𝑆(𝑠, 𝑣 target ) be a computable similarity function that scores how well candidate 𝑠 matches 𝑣 target . Our goal is to concentrate sampling effort on high-quality candidates without retraining 𝑃 0 .

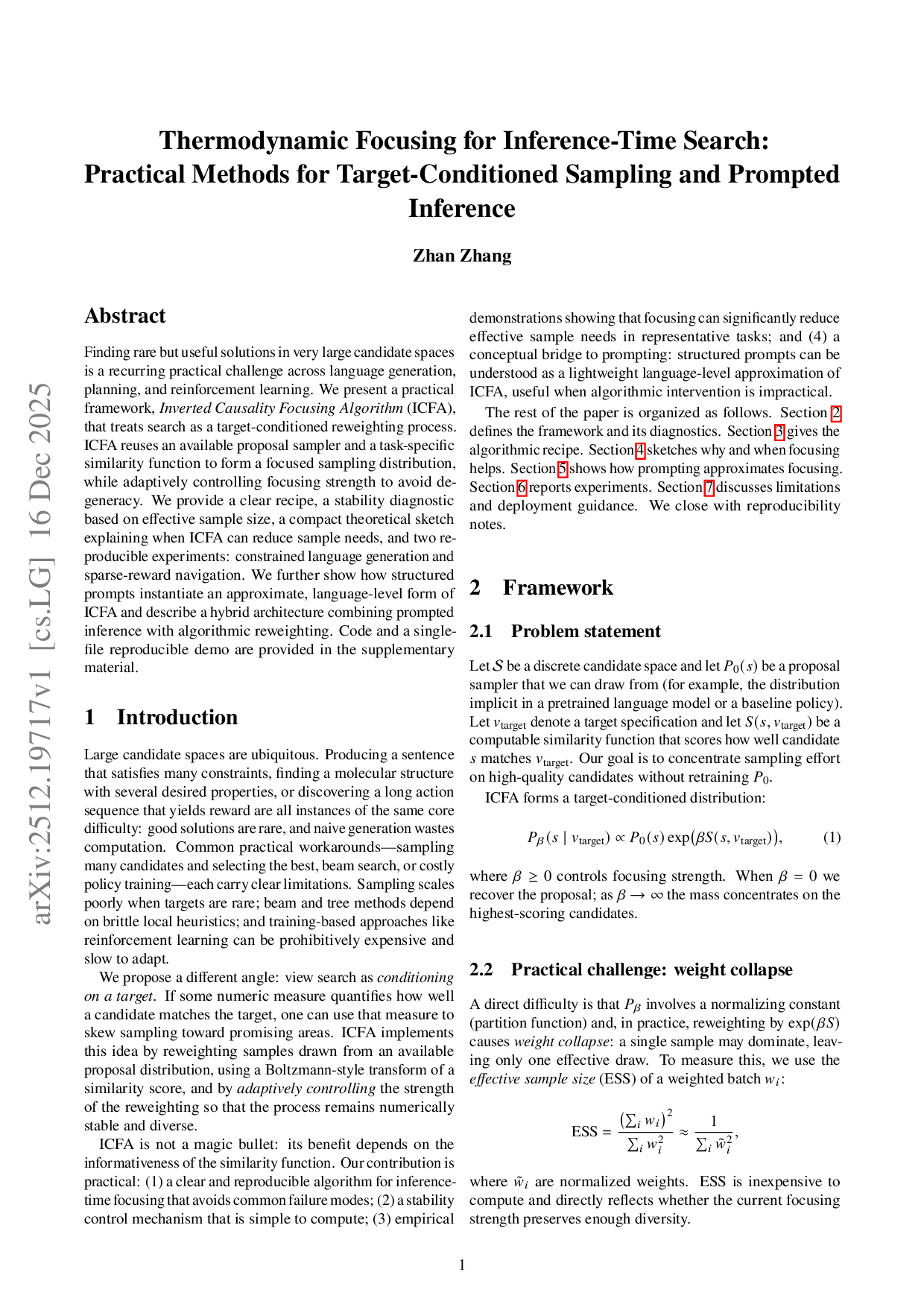

ICFA forms a target-conditioned distribution:

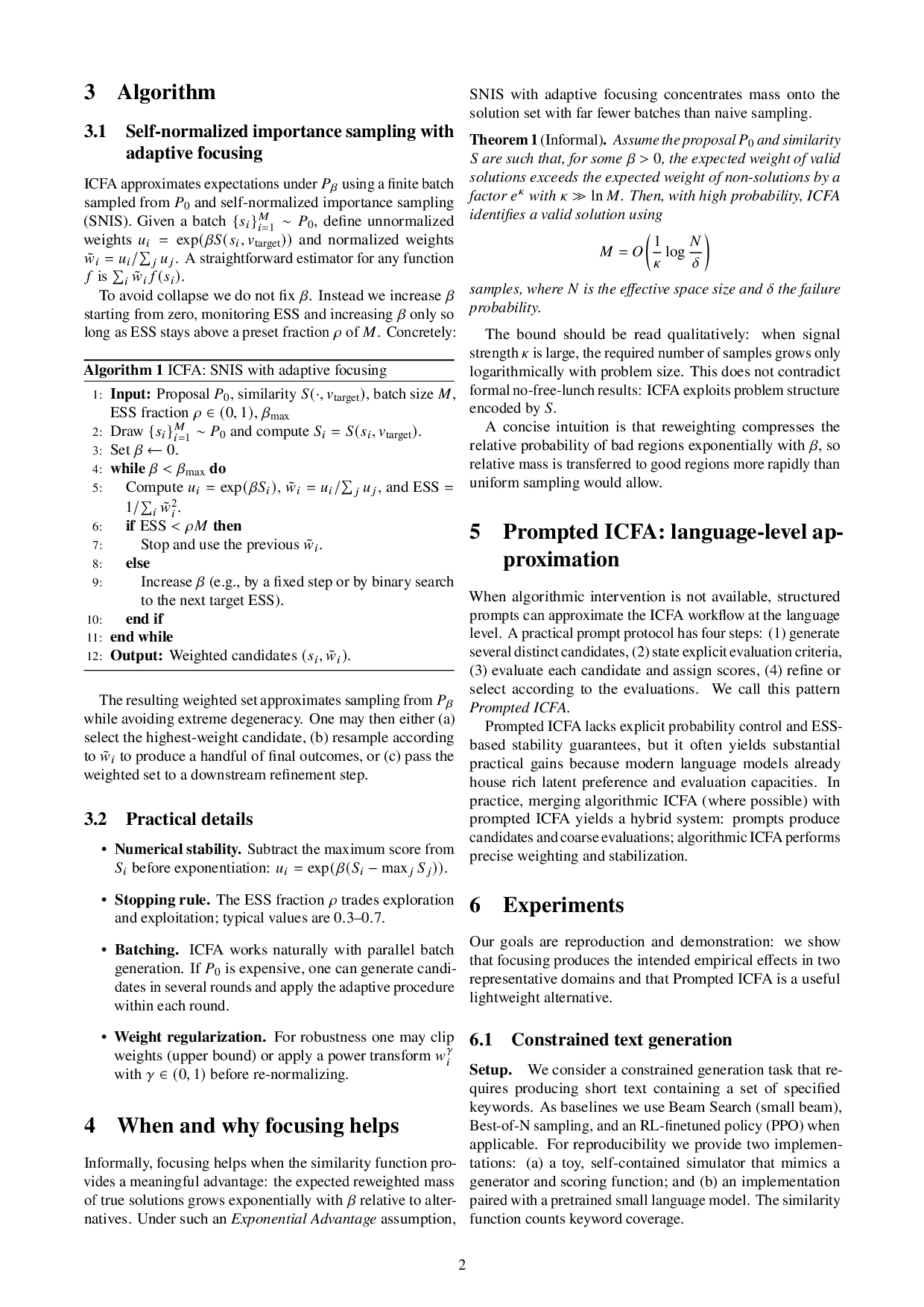

where 𝛽 ≥ 0 controls focusing strength. When 𝛽 = 0 we recover the proposal; as 𝛽 → ∞ the mass concentrates on the highest-scoring candidates. The resulting weighted set approximates sampling from 𝑃 𝛽 while avoiding extreme degeneracy. One may then either (a) select the highest-weight candidate, (b) resample according to w𝑖 to produce a handful of final outcomes, or (c) pass the weighted set to a downstream refinement step. with 𝛾 ∈ (0, 1) before re-normalizing.

Informally, focusing helps when the similarity function provides a meaningful advantage: the expected reweighted mass of true solutions grows exponentially with 𝛽 relative to alternatives. Under such an Exponential Advantage assumption, SNIS with adaptive focusing concentrates mass onto the solution set with far fewer batches than naive sampling.

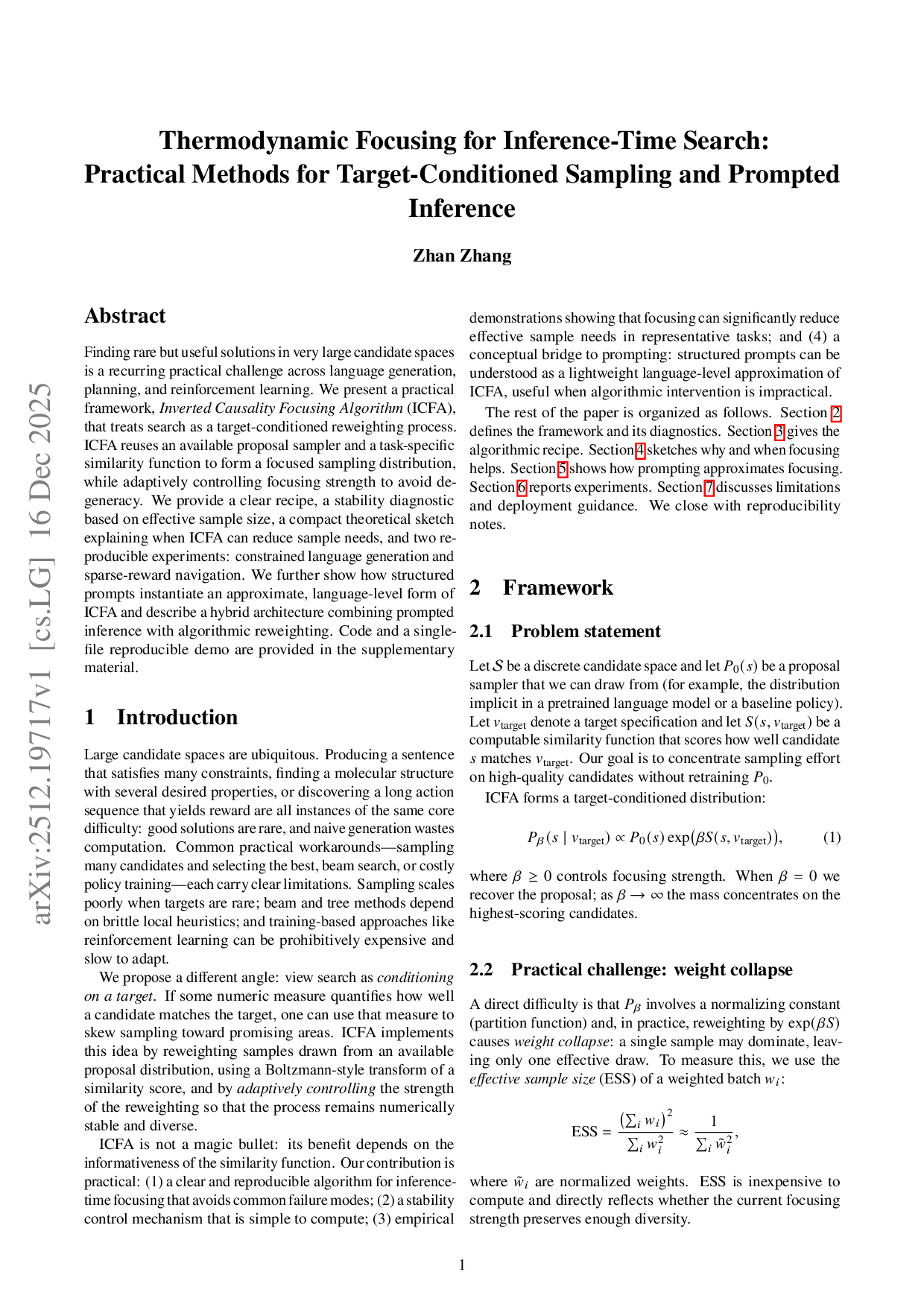

Theorem 1 (Informal). Assume the proposal 𝑃 0 and similarity 𝑆 are such that, for some 𝛽 > 0, the expected weight of valid solutions exceeds the expected weight of non-solutions by a factor 𝑒 𝜅 with 𝜅 ≫ ln 𝑀. Then, with high probability, ICFA identifies a valid solution using

where 𝑁 is the effective space size and 𝛿 the failure probability.

The bound should be read qualitatively: when signal strength 𝜅 is large, the required number of samples grows only logarithmically with problem size. This does not contradict formal no-free-lunch results: ICFA exploits problem structure encoded by 𝑆.

A concise intuition is that reweighting compresses the relative probability of bad regions exponentially with 𝛽, so relative mass is transferred to good regions more rapidly than uniform sampling would allow.

When algorithmic intervention is not available, structured prompts can approximate the ICFA workflow at the language level. A practical prompt protocol has four steps: (1) generate several distinct candidates, (2) state explicit evaluation criteria, (3) evaluate each candidate and assign scores, (4) refine or select according to the evaluations. We call this pattern Prompted ICFA.

Prompted ICFA lacks explicit probability control and ESSbased stability guarantees, but it often yields substantial practical gains because modern language models already house rich latent preference and evaluation capacities. In practice, merging algorithmic ICFA (where possible) with prompted ICFA yields a hybrid system: prompts produce candidates and c

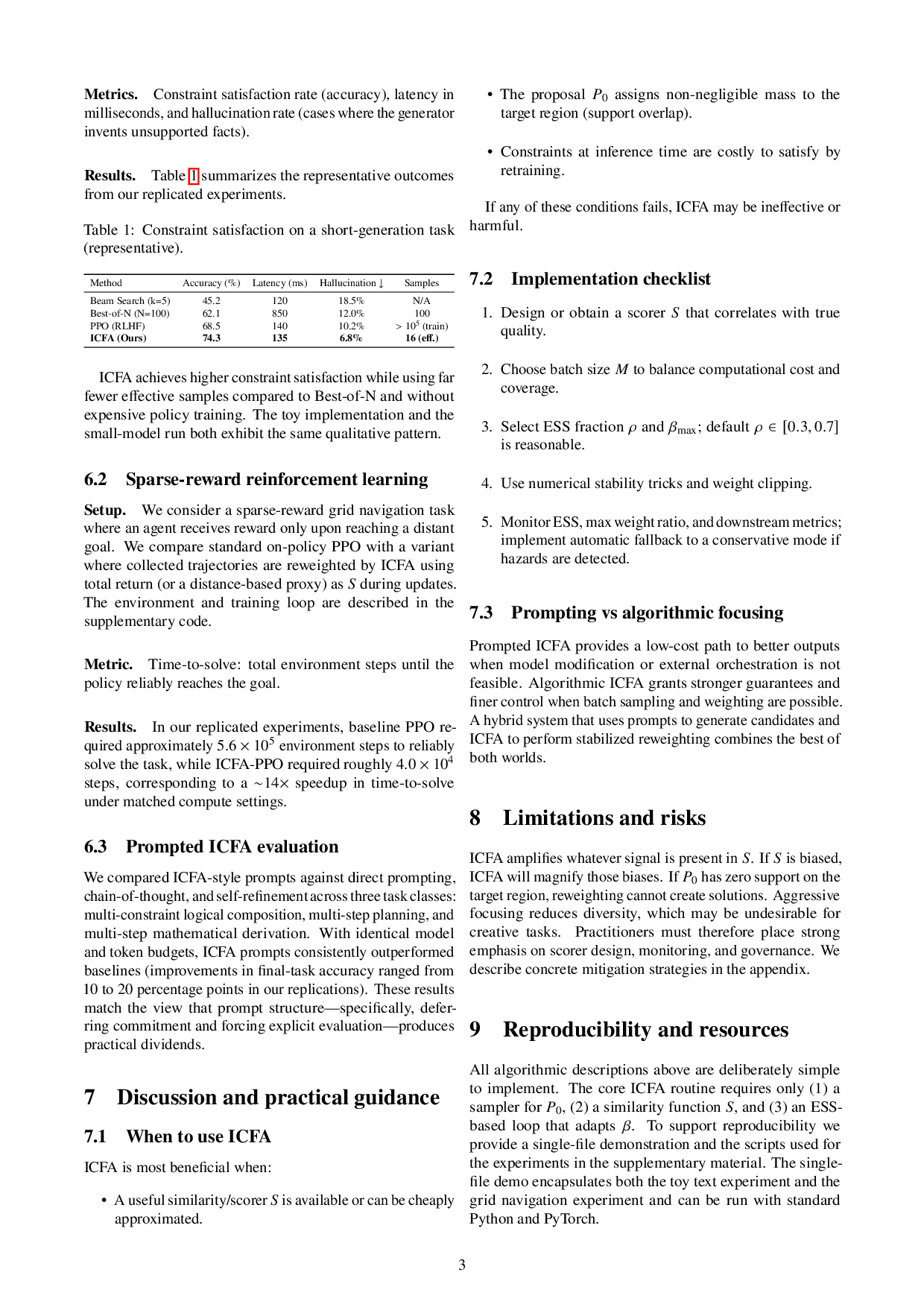

This content is AI-processed based on open access ArXiv data.