Vertical Federated Learning (VFL) enables collaborative model training across organizations that share common user samples but hold disjoint feature spaces. Despite its potential, VFL is susceptible to feature inference attacks, in which adversarial parties exploit shared confidence scores (i.e., prediction probabilities) during inference to reconstruct private input features of other participants. To counter this threat, we propose PRIVEE (PRIvacy-preserving Vertical fEderated lEarning), a novel defense mechanism named after the French word privée, meaning "private." PRIVEE obfuscates confidence scores while preserving critical properties such as relative ranking and inter-score distances. Rather than exposing raw scores, PRIVEE shares only the transformed representations, mitigating the risk of reconstruction attacks without degrading model prediction accuracy. Extensive experiments show that PRIVEE achieves a threefold improvement in privacy protection compared to state-of-the-art defenses, while preserving full predictive performance against advanced feature inference attacks.

Why Is Privacy Still a Challenge in Federated Learning? To mitigate privacy concerns inherent in centralized machine learning systems, federated learning (FL) [28] was introduced. FL enables multiple distributed parties to collaboratively train a shared model without exchanging their raw private data. This paradigm has been successfully applied in privacy-sensitive domains such as mobile keyboard prediction, healthcare, and personalized recommendation systems [24]. However, despite its privacy-preserving design, FL remains susceptible to privacy attacks. For example, in vertical federated learning (VFL), adversarial clients can exploit shared confidence scores during inference to reconstruct other clients' private feature representations [6,19,26,42].

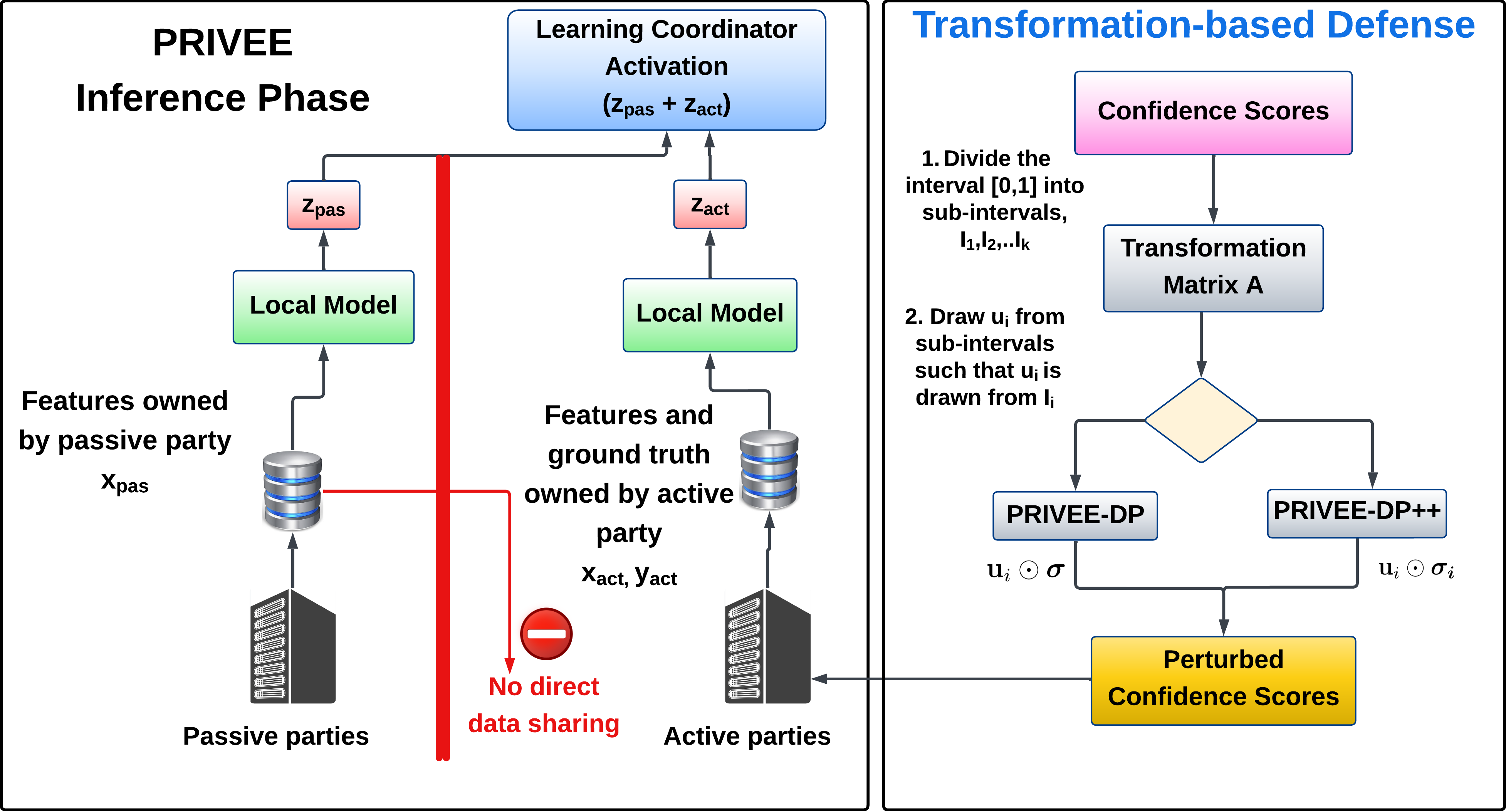

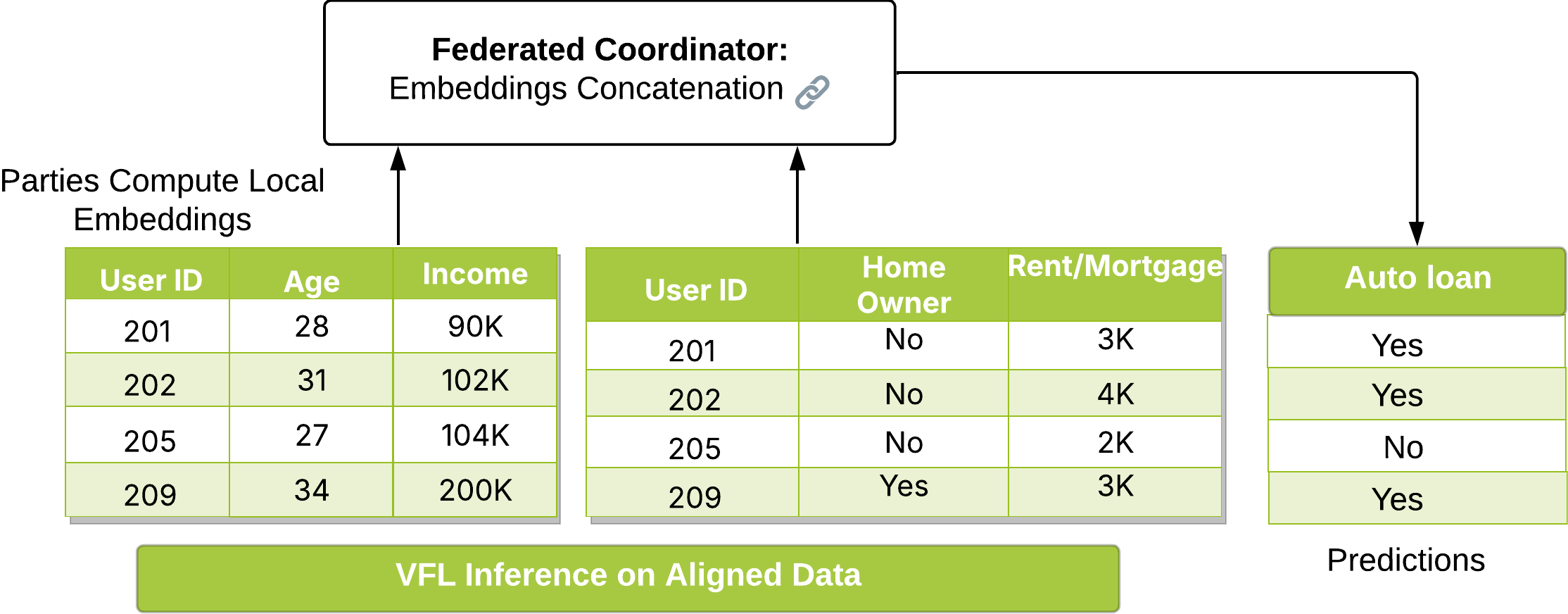

How Does VFL Differ from HFL in Inference Workflow? In horizontal federated learning (HFL), participating parties share the same feature space but possess different data samples. In contrast, vertical federated learning (VFL) involves parties that share the same sample space but hold different subsets of features. VFL is particularly relevant in domains such as banking or healthcare, where institutions possess partial, private information about common entities and must collaborate without exposing raw data.

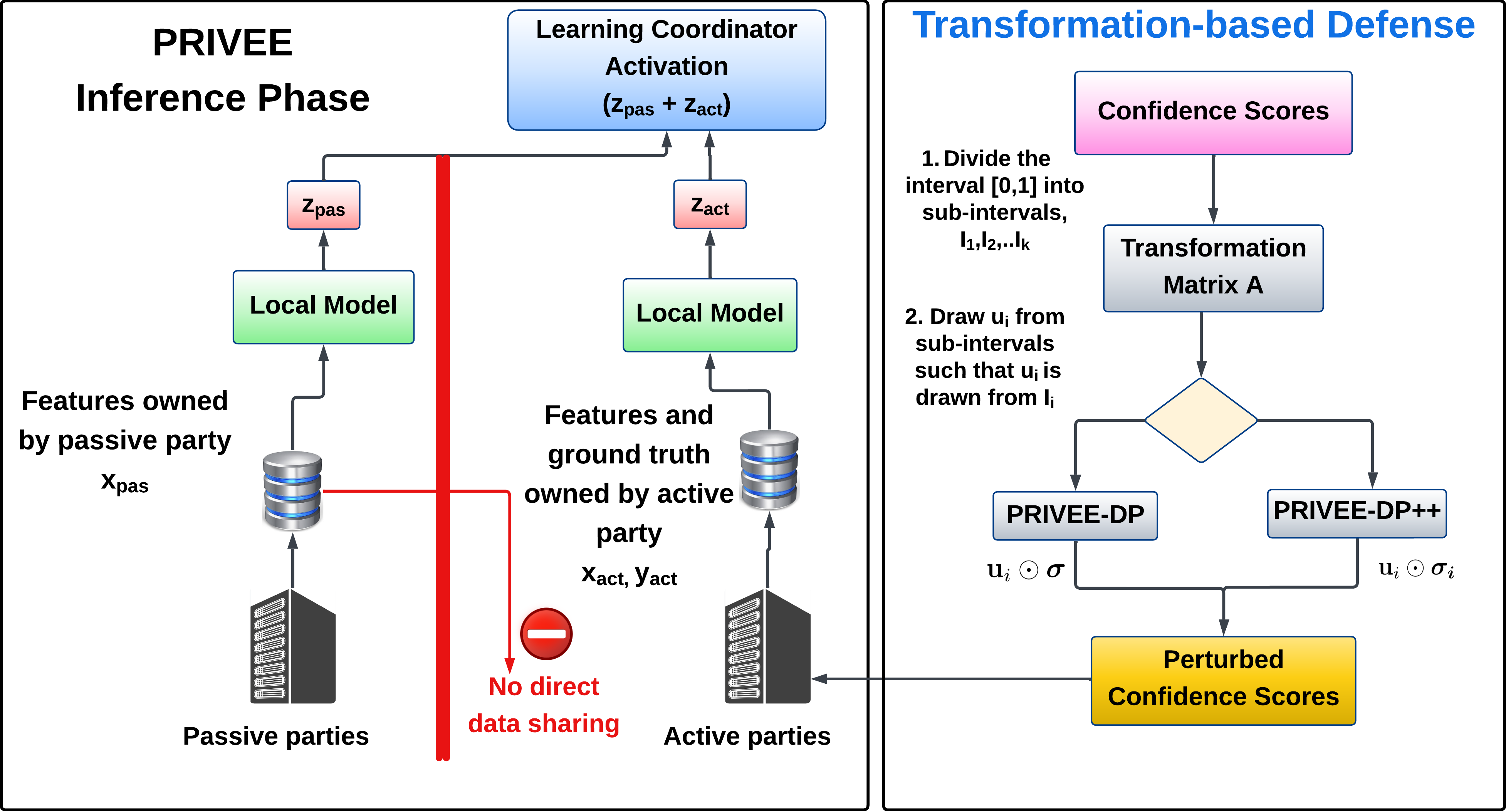

As illustrated in Fig. 1, VFL requires collaborative inference, since accurate predictions depend on the aggregation of distributed features. During inference, both active and passive parties process their local features using private sub-models to generate intermediate representations. These representations are securely transmitted to a trusted coordinator, which aggregates them and applies a final non-linear activation (e.g., softmax or sigmoid). The resulting confidence scores are then broadcast to all participating clients as the final output. This broadcast mechanism, while necessary for prediction, introduces potential avenues for privacy leakage.

Why Are Existing Inference-Time Defenses Insufficient? Feature inference attacks typically occur during the inference phase and are not mitigated by traditional training-time privacypreserving methods such as Differential Privacy (DP) or Homomorphic Encryption (HE). These attacks exploit shared confidence scores, combined with the adversary’s local features, to reconstruct private attributes of benign clients. As a result, protecting confidence vectors during inference becomes critical. Prior defenses proposed in both centralized [29,38,43] and VFL [18,21,23,45] contexts demonstrate varying levels of protection but often suffer from a severe accuracy-privacy trade-off. Even lightweight defenses such as rounding, noise injection, or applying DP to confidence scores [19,26] tend to reduce model accuracy significantly. To overcome these limitations, we propose PRIVEE, a novel inference-time defense that increases reconstruction error (MSE) against inference attacks while maintaining model accuracy and computational efficiency.

To address the aforementioned challenges and limitations in existing approaches, we propose PRIVEE, a privacy-enhancing inference framework for vertical federated learning (VFL), which introduces the following key contributions:

• PRIVEE enhances client-side privacy by transforming confidence scores through a lightweight, order-preserving perturbation mechanism that incurs negligible computational cost while maintaining inference accuracy. Two adaptive variants, PRIVEE-DP and PRIVEE-DP++, are introduced to support different levels of privacy guarantees and deployment constraints. • PRIVEE demonstrates strong resilience against state-of-the-art feature inference attacks, including the Generative Regressive Network Attack (GRNA) and Gradient Inversion Attack (GIA) [19], which exploit confidence vectors to reconstruct sensitive features. Experimental results show that both PRIVEE-DP and PRIVEE-DP++ significantly increase reconstruction error (MSE) across all tested datasets and model architectures. • PRIVEE-VFL achieves consistent outperformance over existing defenses, providing up to 30× higher privacy protection (in terms of MSE improvement) while preserving near-identical model accuracy (within 0.3% deviation from baseline). The inference latency remains in the millisecond range, confirming its practicality for real-time applications such as financial analytics and IoT systems. Each party then computes local feature embeddings using its private data and forwards them to the federated coordinator, who concatenates the embeddings and performs centralized operations such as loss computation and gradient aggregation. The computed gradients are securely distributed back to the parties for local parameter updates, enabling iterative model refinement under privacy constraints. During the inference phase, each party performs a local forward pass to produce embeddings that are aggregated by the coordinator to generate the final prediction output. This workflow exemplifies how VFL achieves col

This content is AI-processed based on open access ArXiv data.