Implicit neural representations (INRs) have become a powerful paradigm for continuous signal modeling and 3D scene reconstruction, yet classical networks suffer from a well-known spectral bias that limits their ability to capture high-frequency details. Quantum Implicit Representation Networks (QIREN) mitigate this limitation by employing parameterized quantum circuits with inherent Fourier structures, enabling compact and expressive frequency modeling beyond classical MLPs. In this paper, we present Quantum Neural Radiance Fields (Q-NeRF), the first hybrid quantum-classical framework for neural radiance field rendering. Q-NeRF integrates QIREN modules into the Nerfacto backbone, preserving its efficient sampling, pose refinement, and volumetric rendering strategies while replacing selected density and radiance prediction components with quantum-enhanced counterparts. We systematically evaluate three hybrid configurations on standard multi-view indoor datasets, comparing them to classical baselines using PSNR, SSIM, and LPIPS metrics. Results show that hybrid quantum-classical models achieve competitive reconstruction quality under limited computational resources, with quantum modules particularly effective in representing fine-scale, view-dependent appearance. Although current implementations rely on quantum circuit simulators constrained to few-qubit regimes, the results highlight the potential of quantum encodings to alleviate spectral bias in implicit representations. Q-NeRF provides a foundational step toward scalable quantum-enabled 3D scene reconstruction and a baseline for future quantum neural rendering research.

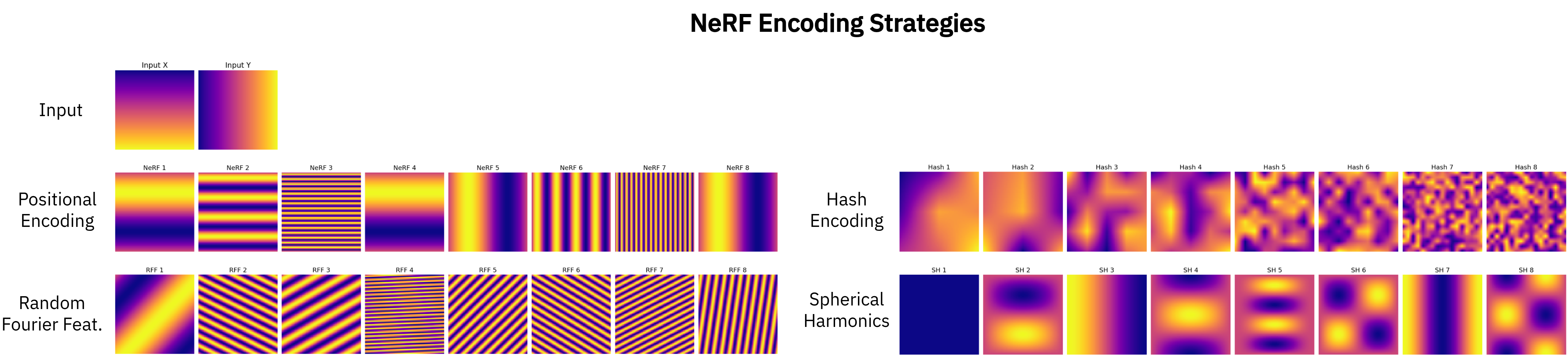

Novel view synthesis, the generation of realistic images of a scene from unseen viewpoints, is a central challenge in computer vision with applications in virtual reality [16], autonomous navigation [45], and digital content creation [52]. Traditional 3D reconstruction methods based on explicit geometry such as meshes or point clouds [34] often fail to capture fine detail and view-dependent effects. Neural Radiance Fields (NeRF) [23] addressed these limitations by representing scenes as continuous volumetric functions that map 3D spatial locations and viewing directions to emitted color and density. By optimizing this representation through differentiable volume rendering, NeRF achieves photorealistic synthesis from sparse input views and has rapidly become a cornerstone in neural rendering. Subsequent variants improve scalability and reconstruction fidelity via multiscale representations [3,4], hash-grid encodings [25], and efficient sampling strategies [33]. Yet, despite their success, classical NeRFs remain computationally intensive and exhibit a pronounced spectral bias, a tendency of MLP-based implicit networks to underfit highfrequency details [42]. This bias limits their ability to reproduce fine geometric structures and complex appearance variations that are essential for realistic rendering.

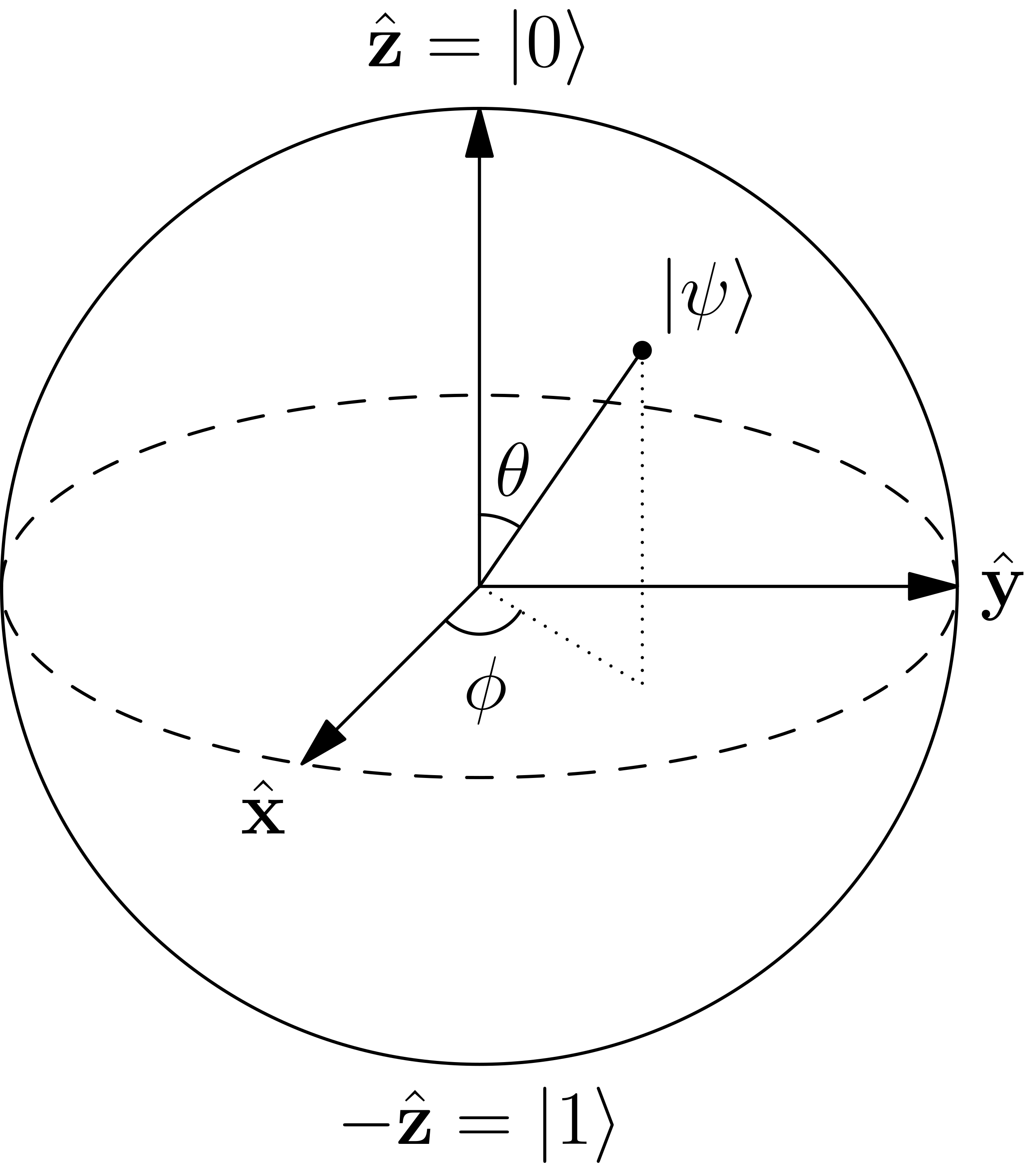

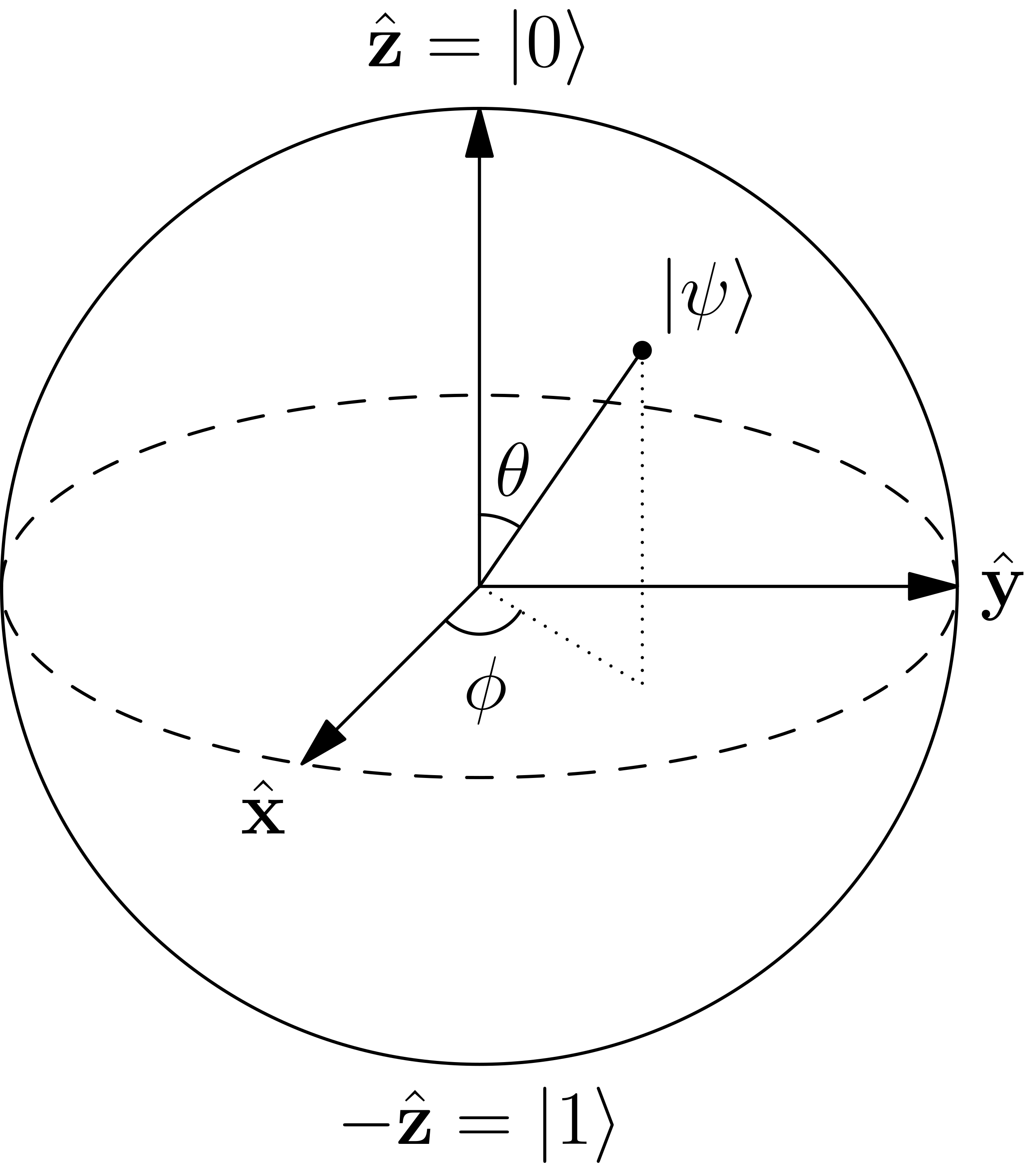

Quantum computing offers a complementary direction to overcome such representational bottlenecks. Quantum Neural Networks (QNNs) leverage superposition and entanglement to process information in exponentially large Hilbert spaces [5,7], potentially achieving greater expressivity per parameter than their classical counterparts. However, the training of QNNs is often hindered by the phenomenon of barren plateaus, regions in the optimization landscape where gradients vanish exponentially with system size, pos-ing significant challenges to scalability and efficient learning [9,22]. Parameterized quantum circuits (PQCs) naturally implement Fourier-like function decompositions [13,35], making them particularly suitable for modeling highfrequency components, precisely the regime where classical networks struggle. Recent studies in quantum implicit representations suggest that these circuits can compactly encode fine spectral details while maintaining trainability on noisy intermediate-scale quantum (NISQ) devices [51]. Such characteristics motivate exploring quantum-enhanced methods for continuous scene representations, bridging advances in quantum machine learning and neural rendering.

This work introduces Quantum Neural Radiance Fields (Q-NeRF), a hybrid quantum-classical framework that integrates Quantum Implicit Representation Networks (QIREN) [51] within the modular NeRF pipeline. Building on QIREN, Q-NeRF substitutes selected classical encoding or regression components with quantum modules while preserving the volumetric rendering formulation. This design enables controlled comparisons between classical and quantum components under identical rendering conditions. The framework is implemented in the NISQ regime through simulators that account for hardware constraints, shallow circuit depths, and hybrid optimization loops [11,29].

The main contributions of this paper are: • The Q-NeRF architecture, a modular hybrid NeRF architecture that incorporates QIREN-based quantum modules into a classical radiance field pipeline. • A systematic comparison of three hybrid configurations, isolating the roles of quantum processing in density and color prediction. • Empirical validation demonstrating the feasibility of integrating quantum circuits for high-frequency radiance modeling under current simulation constraints.

To our knowledge, Q-NeRF represents the first attempt to extend neural radiance fields into the quantum domain. This work establishes a foundation for studying how quantum computation can enhance implicit 3D representations, providing insights into the opportunities and limitations of hybrid quantum-classical models for future photorealistic scene reconstruction.

This work is structured as follows. Section 2 reviews prior research on Neural Radiance Fields, Implicit Neural Representations, and Quantum Machine Learning, emphasizing advances in frequency modeling and hybrid quantum-classical training. Section 3 details the proposed Q-NeRF architecture, describing its integration of QIREN modules into the NeRF pipeline and the three hybrid configurations explored. Section 4 presents the experimental setup, baselines, and training procedures used to evaluate Q-NeRF under realistic simulation constraints. Section 5 reports quantitative and qualitative results, analyzing reconstruction performance and the effects of quantum compo-nents. Finally, Section 6 concludes the paper and outlines future research directions.

Neural Radiance Fields (NeRF) formulate novel-view synthesis as learning a continuous 5D radiance field with volumetric rendering [23]. Subsequent work improves antialiasing and robustness to scale wi

This content is AI-processed based on open access ArXiv data.