We present Particulate, a feed-forward approach that, given a single static 3D mesh of an everyday object, directly infers all attributes of the underlying articulated structure, including its 3D parts, kinematic structure, and motion constraints. At its core is a transformer network, Part Articulation Transformer, which processes a point cloud of the input mesh using a flexible and scalable architecture to predict all the aforementioned attributes with native multi-joint support. We train the network end-to-end on a diverse collection of articulated 3D assets from public datasets. During inference, Particulate lifts the network's feed-forward prediction to the input mesh, yielding a fully articulated 3D model in seconds, much faster than prior approaches that require per-object optimization. Particulate can also accurately infer the articulated structure of AI-generated 3D assets, enabling full-fledged extraction of articulated 3D objects from a single (real or synthetic) image when combined with an off-the-shelf image-to-3D generator. We further introduce a new challenging benchmark for 3D articulation estimation curated from high-quality public 3D assets, and redesign the evaluation protocol to be more consistent with human preferences. Quantitative and qualitative results show that Particulate significantly outperforms state-of-the-art approaches.

Our everyday environment is populated with objects whose functionality arises not only from their shape, but also from the motion of multiple interconnected parts. Cabinets, for example, have doors and drawers that open through rotational and translational motion, constrained by hinges and sliding tracks. Humans can effortlessly perceive the articulated structure of such objects. Developing similar capabilities for machines is crucial for robots that must manipulate these articulated objects in daily tasks [21,44,59], and can simplify the creation of interactive digital twins for gaming and simulation [16,56].

In this paper, we consider the problem of estimating the articulated structure from a single static 3D mesh. Prior work has attempted to model the rich variety of everyday articulated objects via procedural generation [26,41]. However, scaling such rule-based approaches for the long tail of real-world objects remains extremely challenging. We posit that learning-based approaches that capture articulation priors from large collections of 3D assets offer greater potential for generality.

Several authors have already proposed learning-based approaches for closely related tasks. [31][32][33]54] focus on learning part segmentation on 3D point clouds. These methods, however, primarily predict semantic part segmentation on static objects, without modeling the underlying articulations between parts. Other learning-based methods [11,20,29,30] aim to generate 3D articulated objects directly. Despite great promise, these models are typically trained on only a few object categories, assume key attributes such as the kinematic structure are known a priori, and often rely on part retrieval to assemble fully articulated 3D assets, which limits their ability to produce accurate and diverse articulated objects.

Seeking to address these limitations, we introduce PAR-TICULATE, a unified learning-based framework that takes an input 3D mesh of an everyday object and outputs the underlying articulated structure. PARTICULATE predicts a full set of articulation attributes, including articulated 3D part segmentation, kinematic structure, and parameters of articulated motion. It does so in a single forward pass, taking only seconds. In particular, our design of tasking PARTICU-LATE to analyze existing 3D assets rather than synthesizing them from scratch is motivated by the rapid advances in 3D generative models [7,[48][49][50]61]: by focusing on the orthogonal task of articulation estimation, PARTICULATE can leverage increasingly high-fidelity 3D generators to enable one-stop creation of articulated 3D objects with realistic geometry and appearance (Fig. 1). The resulting assets can be seamlessly imported into physics engines for simulation purposes.

At the core of PARTICULATE is an end-to-end trained Part Articulation Transformer, designed to be flexible and scalable. To this end, the network operates on point clouds, which is an explicit and highly structured representation of the input mesh and is applicable to virtually all 3D shapes. It consists of standard attention blocks paired with multiple decoder heads to predict different articulation attributes. This flexible design allows us to train this network on a diverse collection of articulated 3D assets from public datasets, including PartNet-Mobility [59] and GRScenes [52]. To further enhance generalization to novel objects, we augment the raw point cloud with 3D semantic part features obtained from PartField [32] as input to the network. The resulting model predicts the articulated structure of input meshes of new objects in arbitrary articulated poses, including those generated by off-the-shelf 3D generators (e.g., [50]), in a feed-forward manner.

To evaluate our model, we introduce a new challenging benchmark dataset of 220 high-quality 3D assets with accurate articulation annotations, crafted by Lightwheel under the CC-BY-NC license [27]. We also establish a comprehensive evaluation protocol for the 3D articulation estimation task, with new metrics that more faithfully reflect pre-

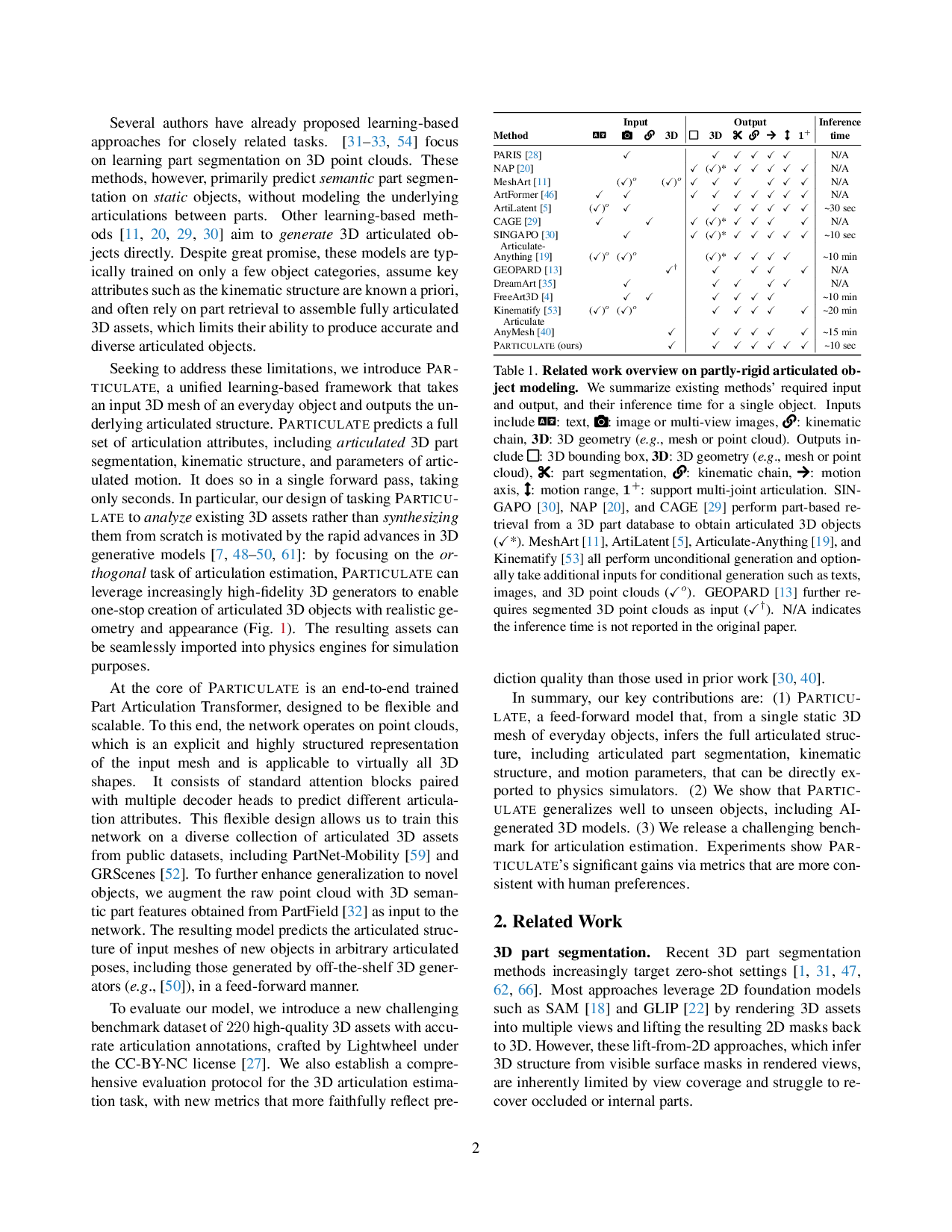

Table 1. Related work overview on partly-rigid articulated object modeling. We summarize existing methods’ required input and output, and their inference time for a single object. Inputs include : text, : image or multi-view images, : kinematic chain, 3D: 3D geometry (e.g., mesh or point cloud). Outputs include □: 3D bounding box, 3D: 3D geometry (e.g., mesh or point cloud), : part segmentation, : kinematic chain, : motion axis, : motion range, 1 + : support multi-joint articulation. SIN-GAPO [30], NAP [20], and CAGE [29] perform part-based retrieval from a 3D part database to obtain articulated 3D objects (✓*). MeshArt [11], ArtiLatent [5], Articulate-Anything [19], and Kinematify [53] all perform unconditional generation and optionally take additional inputs for conditional generation such as texts, images, and 3D point clouds (✓ o ). GEOPARD [13] further requires segmented 3D poin

This content is AI-processed based on open access ArXiv data.